logo by @camiloferrua

Repository

https://github.com/to-the-sun/amanuensis

The Amanuensis is an automated songwriting and recording system aimed at ridding the process of anything left-brained, so one need never leave a creative, spontaneous and improvisational state of mind, from the inception of the song until its final master. The program will construct a cohesive song structure, using the best of what you give it, looping around you and growing in real-time as you play. All you have to do is jam and fully written songs will flow out behind you wherever you go.

If you're interested in trying it out, please get a hold of me! Playtesters wanted!

New Features

- What feature(s) did you add?

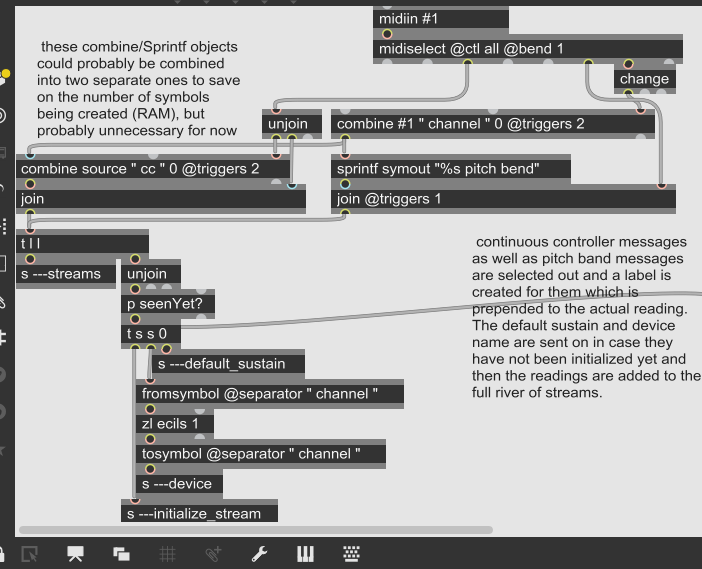

The Singing Stream has been fundamentally reworked to make it faster, more efficient, less obtrusive for users and cleaner in the code as well. This major overhaul was accomplished by abandoning the previous reliance on poly~ in favor of a new system utilizing scripting to dynamically create abstractions on the fly. Each new stream (i.e. source you would like to use as a MIDI instrument: audio streams, game controllers, the mouse, etc.) must be handled separately; now each time a new stream is introduced a new abstraction is created for it, which loads in a fraction of a second silently in the background.

Previously a new poly~ voice would need to be loaded for each stream and because of the way the object works all of the previously established voices would also need to be reloaded (a process requiring many seconds). This made poly~ very slow and I had implemented an entire loading screen, disabling the UI, to be displayed while it was working. This was rather obtrusive and could be confusing for the user. In addition, I have continually found the poly~ object to be a confounding source of bugs and the more I code with this language, the more I'm inclined to recommend avoiding it altogether. Dynamically creating abstractions with scripting makes the code so much easier to understand, streamlined and perform much better.

- How did you implement it/them?

If you're not familiar, Max is a visual language and textual representations like those shown for each commit on Github aren't particularly comprehensible to humans. You won't find any of the commenting there either. Therefore, I will present the work that was completed using images instead. Read the comments in those to get an idea of how the code works. I'll keep my description here about the process of writing that code.

These are the primary commits involved:

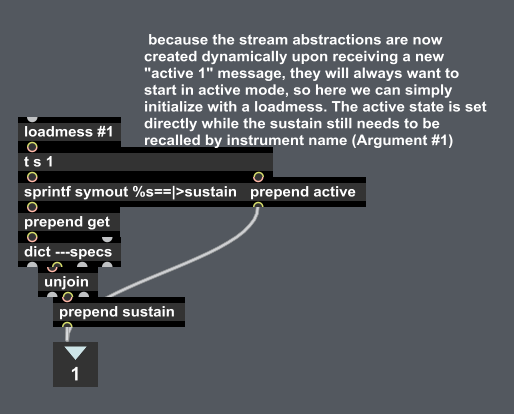

The new subpatcher seen below manages the creation of the stream.maxpat abstractions. It essentially replaces the previous p target. These new abstractions can be instantiated with unique arguments denoting the source name of the streams. This facilitates one of many conveniences and simplifications over the previous setup in that before, source names had to be stored and recalled with coll ---sources, but can now be easily referenced with #1.

the new streams subpatcher in singingstream.maxpat, complete with commenting

If you're not familiar with Max, an abstraction is a patch, or portion of code, (.maxpat file) that can be referenced by name anywhere throughout your code and, if it can be found somewhere within the Max search path, it will be loaded and function just like any other subpatcher. The closest thing it might be analogous to in a more traditional language might be a Class.

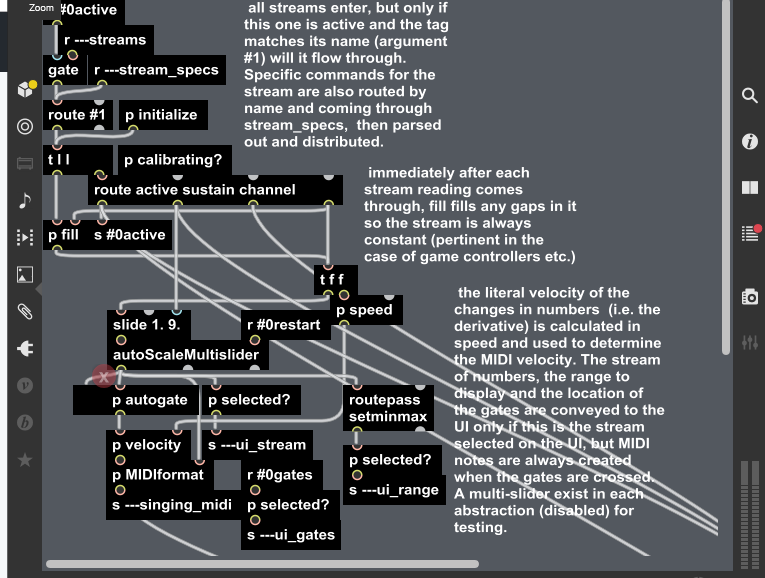

Until now, I had not realized that whole abstractions could be created dynamically using scripting. This opens up a whole new world of possibilities, using code to create whole batches of new code all at once. The main abstraction created in this update is streams.maxpat, seen below.

the new stream abstraction, complete with commenting

This patch functions much the same as the old polysingingstream.maxpat that it replaces, but adjustments have been required all throughout it. One of the main ones is the reference to #1 instead of coll ---sources. Beyond that however, the two subpatchers below were also reworked completely in this new update.

p initialize inside the new stream abstraction, complete with commenting

p calibrating? inside the new stream abstraction, complete with commenting

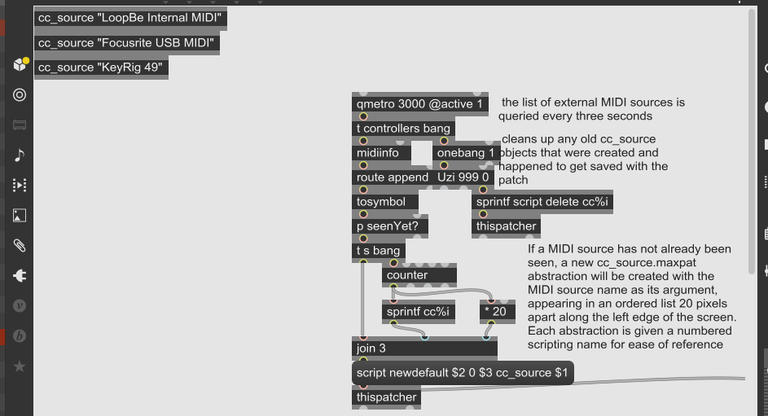

Because it works so well to dynamically create abstractions this way, I decided to take it one step further and reimagined another subpatcher, cc, in the same light. It was already using scripting, but was doing so object by object, which requires you to explicitly connect them with patch cords and gets messy fast. Using abstractions is so much cleaner.

the new cc subpatcher in singingstream.maxpat, complete with commenting

The code above identifies new MIDI sources and creates abstractions for them. The newly written abstraction below parses, tags and initializes the MIDI streams.

the new cc_source abstraction, complete with commenting

Your contribution has been evaluated according to Utopian policies and guidelines, as well as a predefined set of questions pertaining to the category.

To view those questions and the relevant answers related to your post, click here.

Need help? Chat with us on Discord.

[utopian-moderator]

Thanks!

Thank you for your review, @helo! Keep up the good work!

Hi @to-the-sun!

Your post was upvoted by @steem-ua, new Steem dApp, using UserAuthority for algorithmic post curation!

Your post is eligible for our upvote, thanks to our collaboration with @utopian-io!

Feel free to join our @steem-ua Discord server

Hey, @to-the-sun!

Thanks for contributing on Utopian.

We’re already looking forward to your next contribution!

Get higher incentives and support Utopian.io!

Simply set @utopian.pay as a 5% (or higher) payout beneficiary on your contribution post (via SteemPlus or Steeditor).

Want to chat? Join us on Discord https://discord.gg/h52nFrV.

Vote for Utopian Witness!