If this is your first time seeing this series, I recommend starting at the beginning.

Building A New Nas

You can find all the other posts below, this is the 10th post in the series.

Install the Operating System

I am going to be using Ubuntu 18.04 Server. The operating system will be installed on a pair of 256GB SSD drives using Raid 1. I will be using ZFS on Linux for the 15 data drives.

Installation was easy except for the initial problem I mentioned in the last post about the switch firmware. I did not expect that to happen, but neither of my network cards would pick up a link light.

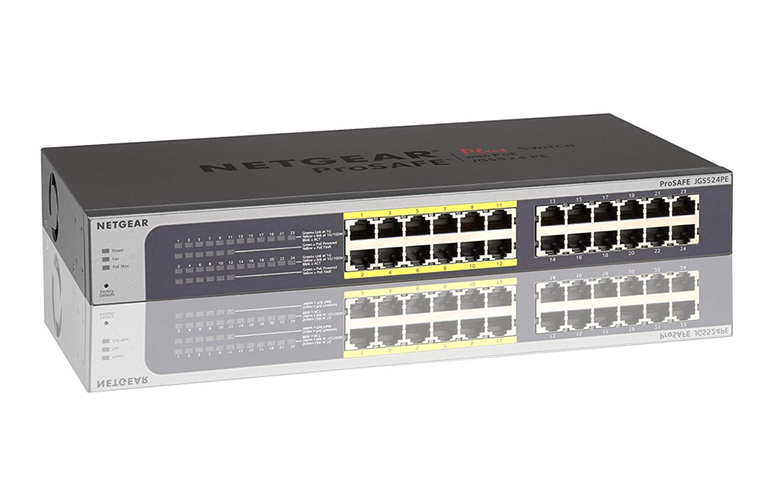

I thought it might be something to do with the fact they were 10Gbit network cards but out of curiosity, I checked my 1GBit switch to see how old the firmware was. A lot of SOHO (small office / home office) network equipment is very poorly maintained and many times resolving many issues in future firmware.

I use a 1Gbit 24 port switch with 12 PoE ports (Power over Ethernet). I use the PoE ports for Wifi endpoints and cameras. I will switch to a full 10Gbit network when prices are reasonable to get a 24 port 10Gbit switch, for now, I will only be using 10Gbit between my main workstation and my NAS.

Outside of the switch firmware issue, installation was really easy.

SAS Expander

Using the SAS expander was plug and play. I just installed the card, used the male to male 8087 SAS cables to connect the motherboard ports to the SAS expander and plugged all the drives into the SAS expander board. This allows me to use up to 24 drives instead of the 8 supported by the motherboard.

Testing

Connecting and wiring the 15 SAS drives was a big pain in the butt. Once done though, everything worked immediately. No hardware failures anywhere, and all 15 drives spun up immediately.

I initially tested Raid 0 with all 15 drives striping data. This is the absolute best performance you are going to get with zero data protection. If a single drive fails, you lose all data across all 15 drives. My final configuration will either be mirrored (3) Raidz Striped (Raid 5 x 3 Striped) or Raidz2 (Raid 6),

I haven't decided but am leaning towards (3) Raidz Striped. This will give me three smaller (5 drive) Raid 5 arrays with Raid zero between the three arrays. In this configuration, I can lose up to three drives and still have a working array, but if two drives from any of the 5 drive arrays fail, I will lose data on all 15 drives. Raidz2 would allow me to lose any two drives and remain running. (3) Raidz will be faster, but potentially riskier.

Striped Performance (aka Raid 0)

NAME STATE READ WRITE CKSUM

tank ONLINE 0 0 0

sda ONLINE 0 0 0

sdc ONLINE 0 0 0

sdd ONLINE 0 0 0

sde ONLINE 0 0 0

sdf ONLINE 0 0 0

sdg ONLINE 0 0 0

sdh ONLINE 0 0 0

sdi ONLINE 0 0 0

sdj ONLINE 0 0 0

sdk ONLINE 0 0 0

sdl ONLINE 0 0 0

sdm ONLINE 0 0 0

sdn ONLINE 0 0 0

sdo ONLINE 0 0 0

sdp ONLINE 0 0 0

WRITE: bw=1418MiB/s (1487MB/s)

READ: bw=2248MiB/s (2358MB/s)

Striped Raidz Performance (aka Raid 50)

NAME STATE READ WRITE CKSUM

tank ONLINE 0 0 0

raidz1-0 ONLINE 0 0 0

wwn-0x5000c500 ONLINE 0 0 0

wwn-0x5000c500 ONLINE 0 0 0

wwn-0x5000c500 ONLINE 0 0 0

wwn-0x5000c500 ONLINE 0 0 0

wwn-0x5000c500 ONLINE 0 0 0

raidz1-1 ONLINE 0 0 0

wwn-0x5000c500 ONLINE 0 0 0

wwn-0x5000c500 ONLINE 0 0 0

wwn-0x5000c500 ONLINE 0 0 0

wwn-0x5000c500 ONLINE 0 0 0

wwn-0x5000c500 ONLINE 0 0 0

raidz1-2 ONLINE 0 0 0

wwn-0x5000c500 ONLINE 0 0 0

wwn-0x5000c500 ONLINE 0 0 0

wwn-0x5000c500 ONLINE 0 0 0

wwn-0x5000c500 ONLINE 0 0 0

wwn-0x5000c500 ONLINE 0 0 0

WRITE: bw=805MiB/s (844MB/s)

READ: bw=2122MiB/s (2225MB/s)

Raidz2 (aka Raid 6)

NAME STATE READ WRITE CKSUM

tank ONLINE 0 0 0

raidz2-0 ONLINE 0 0 0

sda ONLINE 0 0 0

sdc ONLINE 0 0 0

sdd ONLINE 0 0 0

sde ONLINE 0 0 0

sdf ONLINE 0 0 0

sdg ONLINE 0 0 0

sdh ONLINE 0 0 0

sdi ONLINE 0 0 0

sdj ONLINE 0 0 0

sdk ONLINE 0 0 0

sdl ONLINE 0 0 0

sdm ONLINE 0 0 0

sdn ONLINE 0 0 0

sdo ONLINE 0 0 0

sdp ONLINE 0 0 0

WRITE: bw=315MiB/s (330MB/s)

READ: bw=2205MiB/s (2312MB/s)

Temperatures

/dev/sda: SPCC Solid State Disk: 33°C

/dev/sdb: SPCC Solid State Disk: 33°C

/dev/sdc: SEAGATE ST4000NM0023: 49°C

/dev/sdd: SEAGATE ST4000NM0023: 60°C

/dev/sde: SEAGATE ST4000NM0023: 58°C

/dev/sdf: SEAGATE ST4000NM0023: 60°C

/dev/sdg: SEAGATE ST4000NM0023: 56°C

/dev/sdh: SEAGATE ST4000NM0023: 55°C

/dev/sdi: SEAGATE ST4000NM0023: 55°C

/dev/sdj: SEAGATE ST4000NM0023: 51°C

/dev/sdk: SEAGATE ST4000NM0023: 56°C

/dev/sdl: SEAGATE ST4000NM0023: 55°C

/dev/sdm: SEAGATE ST4000NM0023: 55°C

/dev/sdn: SEAGATE ST4000NM0023: 48°C

/dev/sdo: SEAGATE ST4000NM0023: 53°C

/dev/sdp: SEAGATE ST4000NM0023: 51°C

Another thing I was concerned with is cooling. I want to make sure the drives are not overheating under use. When I was testing with the case opened I was seeing some raised temperatures. After closing up the case and installing the fan wall I am seeing workable temperatures.

Compression

ZFS has fantastic compression support. Traditionally I would recommend not using compression on a file system, but the way ZFS does it will actually improve performance. By compressing data you trade some CPU time for reducing the amount of data written to disk. Seeing as CPU performance is exponentially faster than spinning disks, this tradeoff works in our favor. ZFS takes this one step further and will stop compression if it isn't getting a good ratio. This typically happens when trying to compress data that has already been compressed or cannot optimally be compressed. This prevents wasting CPU cycles trying to compress it.

Conclusion

Performance on Raid 0 is pretty impressive 1.4GB/s writes and 2.2GB/s reads on mechanical disks. I would never trust my data on this and I was merely testing what I can get out of the hardware.

The striped raidz performance was still very good and was around what I expected out of the hardware.

Raidz2 has fantastic read performance but the write performance is severely reduced due to the double parity.

These tests were done using sequential IO using 128K block size and represent ideal conditions and not typical usage. I am mostly looking to see if everything is working well together and identify any bottlenecks.

I am going to do some more testing, and then do the final OS install on Raid 1. I am currently using a single SSD disk for testing. I will also need to install Samba to allow me to use Windows File Sharing. I then want to test performance using local Virtual Machines.

NAS 2019 Build Series

- Building A New Nas

- NAS Build 2019 Step 1 - Ordering Parts

- NAS Build 2019 Step 2 - First parts delivery

- NAS Build 2019 Step 3 - Fan Upgrades

- NAS Build 2019 Step 4 - Power Supply Installation

- NAS Build 2019 Step 5 - Final Parts Delivery

- NAS Build 2019 Step 6 - CPU Installation

- NAS Build 2019 Step 7 - Hard Disks!

- NAS Build 2019 Step 8 - Firmware Updates

- NAS Build 2019 Step 9 - OS Install & Initial Testing

You got any opinions on using partitions in a raid for like swap or the like? Always wondered. Could probably script it to recover from a drive failure...but probably would make the system slightly less stable, since it might crash when the swap went down.

I always wondered about what kinda use cases you might use that kinda speed for that you didn't necessarily need to worry about losing the data. Like a cache or something. Maybe using it like a ram disk or something.

I prefer to keep my swap on my root SSD's and not on an array.

For my witness nodes and my full node I use Raid 0 NVMe with swap files ( like them over partitions as they are more flexible) as everything is 100% replaceable and speed is far more important.

For these nodes, I am trying to get as close to ram performance as possible, so speed is critical.

Ehh, I've have a few flash drives fail on me in the past and cost me time at crucial moments, so I try to avoid using swap on them. It's a bit annoying, but I'd rather a slightly slower system than have to restore data when on a deadline. Of course, if I mirrored them, it wouldn't quite be as critical, but then I'd still have to potentially buy a new drive suddenly, or risk losing data when one inevitably fails.

By flash you mean ssd? Under normal use SSD and NVMe can easily last 10-20 years. Especially newer ones and Pro series.

Yeah, mostly flash based sata SSD's.

I've had a few normal consumer ones fail on me by a few different major brands. Usually in less than a year. Literally every one I've owned in less than 3 years. Well, not consider the ones I've bought recently that are less than a year or two old. They're supposedly getting better though, so I'm hoping I won't have as bad of luck in the future.

Maybe a geek power users isn't considered normal use though. I probably use my computer more than double the time most people do. And I don't just use a browser. I'm constantly compiling and such.

uggg - when my coins moon im gonna build a nice server myself (raid 5 for me)

Wonderful but complex

PoE = Power Over Ethernet

definitely must be tired, fixed it thanks.

Hi @themarkymark!

Your post was upvoted by @steem-ua, new Steem dApp, using UserAuthority for algorithmic post curation!

Your UA account score is currently 8.367 which ranks you at #12 across all Steem accounts.

Your rank has not changed in the last three days.

In our last Algorithmic Curation Round, consisting of 330 contributions, your post is ranked at #9.

Evaluation of your UA score:

Feel free to join our @steem-ua Discord server

You got a 59.71% upvote from @ocdb courtesy of @themarkymark! :)

@ocdb is a non-profit bidbot for whitelisted Steemians, current max bid is 40 SBD and the equivalent amount in STEEM.

Check our website https://thegoodwhales.io/ for the whitelist, queue and delegation info. Join our Discord channel for more information.

If you like what @ocd does, consider voting for ocd-witness through SteemConnect or on the Steemit Witnesses page. :)

Hello @themarkymark! This is a friendly reminder that a Partiko user has just followed you! Congratulations!

To get realtime push notification on your phone about new followers in the future, download and login Partiko using the link below. You will also get 3000 Partiko Points for free, and Partiko Points can be converted into Steem token!

https://partiko.app/referral/partiko

Reply stop to stop receiving this message.