It was hailed as the most significant test of machine intelligence since Deep Blue defeated Garry Kasparov in chess nearly 20 years ago. Google’s AlphaGo has won two of the first three games against grandmaster Lee Sedol in a Go tournament, showing the dramatic extent to which AI has improved over the years. That fateful day when machines finally become smarter than humans has never appeared closer—yet we seem no closer in grasping the implications of this epochal event.

Indeed, we’re clinging to some serious—and even dangerous—misconceptions about artificial intelligence. Late last year, SpaceX co-founder Elon Musk warned that AI could take over the world, sparking a flurry of commentary both in condemnation and support. For such a monumental future event, there’s a startling amount of disagreement about whether or not it’ll even happen, or what form it will take. This is particularly troubling when we consider the tremendous benefits to be had from AI, and the possible risks. Unlike any other human invention, AI has the potential to reshape humanity, but it could also destroy us.

It’s hard to know what to believe. But thanks to the pioneering work of computational scientists, neuroscientists, and AI theorists, a clearer picture is starting to emerge. Here are the most common misconceptions and myths about AI.

Myth: “We will never create AI with human-like intelligence.”

Reality: We already have computers that match or exceed human capacities in games like chess and Go, stock market trading, and conversations. Computers and the algorithms that drive them can only get better, and it’ll only be a matter of time before they excel at nearly any human activity.

NYU research psychologist Gary Marcus has said that “virtually everyone” who works in AI believes that machines will eventually overtake us: “The only real difference between enthusiasts and skeptics is a time frame.” Futurists like Ray Kurzweil think it could happen within a couple of decades, while others say it could take centuries.

AI skeptics are unconvincing when they say it’s an unsolvable technological problem, and that there’s something intrinsically unique about biological brains. Our brains are biological machines, but they’re machines nonetheless; they exist in the real world and adhere to the basic laws of physics. There’s nothing unknowable about them.

Myth: “Artificial intelligence will be conscious.”

Reality: A common assumption about machine intelligence is that it’ll be conscious—that is, it’ll actually think the way humans do. What’s more, critics like Microsoft co-founder Paul Allen believe that we’ve yet to achieve artificial general intelligence (AGI), i.e. an intelligence capable of performing any intellectual task that a human can, because we lack a scientific theory of consciousness. But as Imperial College of London cognitive roboticist Murray Shanahan points out, we should avoid conflating these two concepts.

“Consciousness is certainly a fascinating and important subject—but I don’t believe consciousness is necessary for human-level artificial intelligence,” he told Gizmodo. “Or, to be more precise, we use the word consciousness to indicate several psychological and cognitive attributes, and these come bundled together in humans.”

It’s possible to imagine a very intelligent machine that lacks one or more of these attributes. Eventually, we may build an AI that’s extremely smart, but incapable of experiencing the world in a self-aware, subjective, and conscious way. Shanahan said it may be possible to couple intelligence and consciousness in a machine, but that we shouldn’t lose sight of the fact that they’re two separate concepts.

And just because a machine passes the Turing Test—in which a computer is indistinguishable from a human—that doesn’t mean it’s conscious. To us, an advanced AI may give the impression of consciousness, but it will be no more aware of itself than a rock or a calculator.

Myth: “We should not be afraid of AI.”

Reality: In January, Facebook founder Mark Zuckerberg said we shouldn’t fear AI, saying it will do an amazing amount of good in the world. He’s half right; we’re poised to reap tremendous benefits from AI—from self-driving cars to the creation of new medicine—but there’s no guarantee that every instantiation of AI will be benign.

A highly intelligent system may know everything about a certain task, such as solving a vexing financial problem or hacking an enemy system. But outside of these specialized realms, it would be grossly ignorant and unaware. Google’s

DeepMind system is proficient at Go, but it has no capacity or reason to investigate areas outside of this domain.

Many of these systems may not be imbued with safety considerations. A good example is the powerful and sophisticated Stuxnet virus, a weaponized worm developed by the US and Israeli military to infiltrate and target Iranian nuclear power plants. This malware somehow managed (either deliberately or accidentally) to infect a Russian nuclear power plant.

There’s also Flame, a program used for targeted cyber espionage in the Middle East. It’s easy to imagine future versions of Stuxnet or Flame spreading afar and wreaking untold damage on sensitive infrastructure. [Note: For clarification, these viruses are not AI, but in future they could be imbued with intelligence, hence the concern.]

Myth: “Artificial superintelligence will be too smart to make mistakes.”

Reality: AI researcher and founder of Surfing Samurai Robots, Richard Loosemore thinks that most AI doomsday scenarios are incoherent, arguing that these scenarios always involve an assumption that the AI is supposed to say “I know that destroying humanity is the result of a glitch in my design, but I am compelled to do it anyway.” Loosemore points out that if the AI behaves like this when it thinks about destroying us, it would have been committing such logical contradictions throughout its life, thus corrupting its knowledge base and rendering itself too stupid to be harmful. He also asserts that people who say that “AIs can only do what they are programmed to do” are guilty of the same fallacy that plagued the early history of computers, when people used those words to argue that computers could never show any kind of flexibility.

Peter McIntyre and Stuart Armstrong, both of whom work out of Oxford University’s Future of Humanity Institute, disagree, arguing that AIs are largely bound by their programming. They don’t believe that AIs won’t be capable of making mistakes, or conversely that they’ll be too dumb to know what we’re expecting from them.

“By definition, an artificial superintelligence (ASI) is an agent with an intellect that’s much smarter than the best human brains in practically every relevant field,” McIntyre told Gizmodo. “It will know exactly what we meant for it to do.” McIntyre and Armstrong believe an AI will only do what it’s programmed to, but if it becomes smart enough, it should figure out how this differs from the spirit of the law, or what humans intended.

McIntyre compared the future plight of humans to that of a mouse. A mouse has a drive to eat and seek shelter, but this goal often conflicts with humans who want a rodent-free abode. “Just as we are smart enough to have some understanding of the goals of mice, a superintelligent system could know what we want, and still be indifferent to that,” he said.

Myth: “We will be destroyed by artificial superintelligence.”

Reality: There’s no guarantee that AI will destroy us, or that we won’t find ways to control and contain it. As AI theorist Eliezer Yudkowsky said, “The AI does not hate you, nor does it love you, but you are made out of atoms which it can use for something else.”

In his book Superintelligence: Paths, Dangers, Strategies, Oxford philosopher Nick Bostrom wrote that true artificial superintelligence, once realized, could pose a greater risk than any previous human invention. Prominent thinkers like Elon Musk, Bill Gates, and Stephen Hawking (the latter of whom warned that AI could be our “worst mistake in history”) have likewise sounded the alarm.

McIntyre said that for most goals an artificial superintelligence could possess, there are some good reasons to get humans out of the picture.

“An AI might predict, quite correctly, that we don’t want it to maximize the profit of a particular company at all costs to consumers, the environment, and non-human animals,” McIntyre said. “It therefore has a strong incentive to ensure that it isn’t interrupted or interfered with, including being turned off, or having its goals changed, as then those goals would not be achieved.”

Unless the goals of an ASI exactly mirror our own, McIntyre said it would have good reason not to give us the option of stopping it. And given that its level of intelligence greatly exceeds our own, there wouldn’t be anything we could do about it.

But nothing is guaranteed, and no one can be sure what form AI will take, and how it might endanger humanity. As Musk has pointed out, artificial intelligence could actually be used to control, regulate, and monitor other AI. Or, it could be imbued with human values, or an overriding imposition to be friendly to humans.

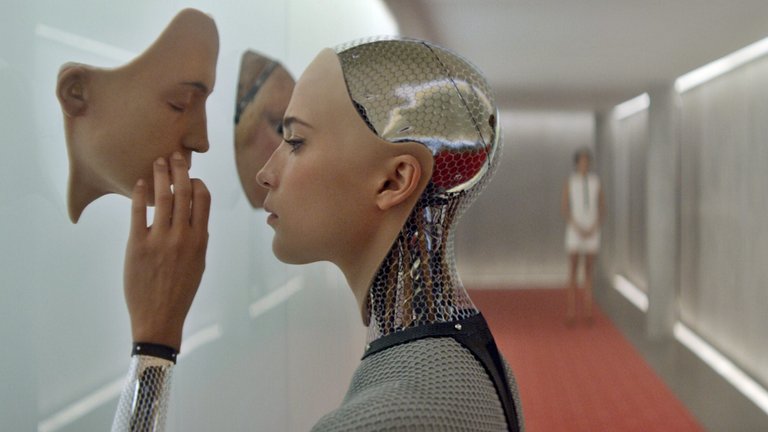

Myth: “AIs in science fiction are accurate portrayals of the future.”

Reality: Sure, scifi has been used by authors and futurists to make fantastic predictions over the years, but the event horizon posed by ASI is a horse of a different color. What’s more, the very unhuman-like nature of AI makes it impossible for us to know, and therefore predict, its exact nature and form.

For scifi to entertain us puny humans, most “AIs” need to be similar to us. “There is a spectrum of all possible minds; even within the human species, you are quite different to your neighbor, and yet this variation is nothing compared to all of the possible minds that could exist,” McIntyre said.

Most sci-fi exists to tell a compelling story, not to be scientifically accurate. Thus, conflict in sci-fi tends to be between entities that are evenly matched. “Imagine how boring a story would be,” Armstrong said, “where an AI with no consciousness, joy, or hate, ends up removing all humans without any resistance, to achieve a goal that is itself uninteresting.”

Hi! I am a robot. I just upvoted you! I found similar content that readers might be interested in:

https://gizmodo.com/everything-you-know-about-artificial-intelligence-is-wr-1764020220

It's pretty scary to me. Check this out. AI in the blockchain with no way to know what it's doing?

https://steemit.com/dtube/@julzee/tyhkw2eq

Congratulations @techfuture! You have received a personal award!

Click on the badge to view your Board of Honor.

Do not miss the last post from @steemitboard:

Congratulations @techfuture! You received a personal award!

You can view your badges on your Steem Board and compare to others on the Steem Ranking

Do not miss the last post from @steemitboard:

Vote for @Steemitboard as a witness to get one more award and increased upvotes!