Almost every new technology that lets you wonder how do they know that or do this deploy deep learning algorithms — the secret sauce of artificial intelligence.

Among many ... many other things, here is a short and sweet list of some impressive tasks that deep learning accomplishes or contributes to.

- translate between languages

- recognise emotions in speech and faces

- drive

- speak

- spot cancer in tissue slides better than human epidemiologists

- sort cucumbers

- predict social unrest 5 days before it happens (highly interesting; read more about it here)

- trade stocks

- predict outcomes of cases of European Court of Human Rights with ~80% accuracy (read)

- beat 75% of Americans in visual intelligence tests (read)

- beat the best human players in pretty much every game

- paint a pretty good van Gogh (read)

- play soccer badly (read)

- write its own machine learning software (read)

As artificial intelligence is already very advanced in a lot of tasks, it is still at the beginning and thus an understandable technology that is not too late to learn yet.

If you would like to have a basic understanding of how deep learning works in general, I recommend you to read this very blog post series. This post is the second piece of that series.

To read the first introductory part, click here.

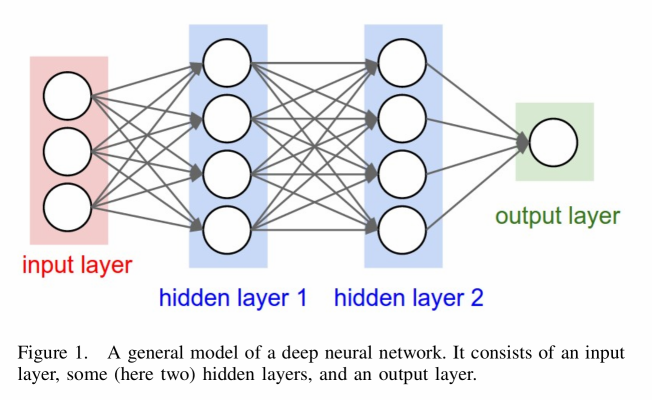

Generally — Deep learning (DL) is a field of artificial intelligence (AI) that uses several processing steps, aka layers, to learn and subsequently recognize patterns in data. These methods have dramatically improved the state of the art in speech recognition, visual object recognition, and many other domains.

3. Deep Learning Convolutional Neural Networks

DL architectures that are called convolutional neural networks (CNNs) are especially interesting because they have brought about breakthroughs in processing video and images. And, in the same time, they are relatively straight forward to understand. Their quick off-the-shelf use has been enabled by open source libraries such as TensorFlow or theano.

The founding father of CNNs is Yann LeCun who published them first in 1989, but he had been working with them since 1982. Like every deep neural network, CNNs consist of multiple different layers — the processing steps.

The speciality here is the convolutional layer in which the processing units within a layer work in such a way that they respond to overlapping regions in the visual field. In simple terms, since a convolutional layer processes let's say an image parallelly, it works in an overlapping manner to understand the full image properly.

The processing units are also known as neurons to further nurture the analogy to a human brain.

To understand the big picture of a DL architecture, I will not only explain how a convolutional layer works, but also the input layer, a rectifier linear unit layer, a pooling layer, and the output layer.

Later in the blog post series, I go beyond theory and build a CNN using the open source software TensorFlow to run the CIFAR-10 benchmark. It consists of 60 000 images and requires them to be classified correctly at the end.

Some examples how the CIFAR-10 dataset looks like:

We will see later how the deep learning network learns by using a simple method called gradient descent. In AI it is possible to measure how well or bad a network learned and we as well will see how the loss per every learned bit gradually decreases. The loss tells us how wrong the network currently is.

A. Input Layer

The nodes of the input layer are passive, meaning they do not modify the data. They receive a single value on their input, and duplicate the value to their multiple outputs which are the inputs of the following layer.

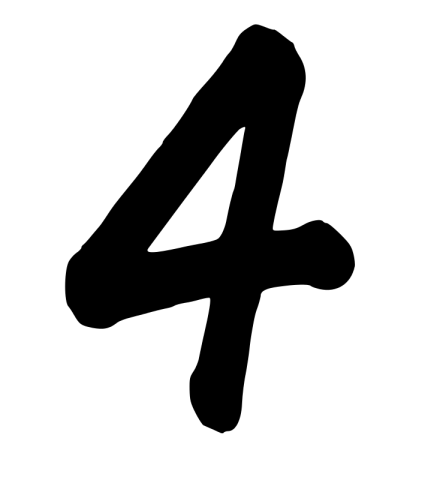

To better describe what values the input layer propagates, let's take a closer look at an image input. For example, if we take an image of a black four on white background, then one possible way to interpret its values could be that the processing units translate the input values into values from −1 to +1. While white pixels are then denoted by −1, black pixels would denote +1 and all other values, like transitions between black and white regions, hold values between −1 and +1.

Since the objects' shape carries the most crucial information, that is to say what number it is, transitional values between black and white are most interesting in this case. A CNN would deduce a model from the training data that tries to understand the objects' shape.

It would be very complex to develop an algorithm that takes all observable pixel values deterministically into account. Numbers, especially when hand-written, can be tilted, stretched and compressed.

NEXT PART: CNNs and its remaining layers - simplified explanation.

I thrive to write quality blog posts here on steemit to really add value to this amazing platform (and to further push paradigm shift due to the blockchain). I hope this format was good for you. If not, leave me a comment and I will work on it.

Further questions? Ask!

I am working on follow-up blog posts, so, stay tuned!

If you liked it so far, then like, resteem and follow me: @martinmusiol !

Thank you!

Wow dude, this is amazingly detailed post. You did a pretty good job! Keep it up!

Thank you I appreciate it. :)

@martinmusiol nice post , I came here after you commented my post https://steemit.com/technology/@braveheart22/the-history-of-microsoft-technology . I upvoted and followed . Be kind to do as well.

I have a nice post too about Sophia !! Is amaizing too : https://steemit.com/technology/@braveheart22/meet-the-sophia-robot-the-humanoid

See you soon !

@braveheart22

Thank you .. cool, I will check it out!

Nice article, but it's very high level. It might be beneficial to add a few words to explain what the weights do, how they are initialized, the different types of activation functions, training with backpropagation, and gradient descent.

For big picture ideas, it might be useful to explain how the early layers "discover" features on their own, what a convolution does to the data, what max-pooling does, and how softmax can be used. Looking forward to your future blog posts. :)

Thanks @terenceplizga for your feedback. In my further posts I will actually talk about it. :)

Regarding the high level language, I agree. I decided to write like this, because the Steemit community is not very large in this topic area (like cryptocurrencies). Gradually I will inject jargon.

Thank you and kind regards,

@martinmusiol

Sounds good, @martinmusiol. I'll have to start following you; I look forward to your posts. I also hope you like my posts on this topic as well. I suspect you're right about other topics (crypto) having bigger followings on Steemit. But maybe we can both get others excited about AI and machine learning, and give the crypto crowd some competition! :)

Congratulations @martinmusiol, this post is the forth most rewarded post (based on pending payouts) in the last 12 hours written by a Newbie account holder (accounts that hold between 0.01 and 0.1 Mega Vests). The total number of posts by newbie account holders during this period was 1265 and the total pending payments to posts in this category was $1218.43. To see the full list of highest paid posts across all accounts categories, click here.

If you do not wish to receive these messages in future, please reply stop to this comment.

I just read this article from a comment. I can say this is a good detailed post and it is worth reading on the follow up posts to understand more on the artificial intelligence.

Thank you @turpsy. I appreciate your words.

I am happy to read your informative posts as well.

:)

kr, @martinmusiol

Great post! Looking forward to the next part. Following.

Any thoughts in how AI is and could be used to mitigate cybersecurity risks?

@mrosenquist, one way would be to implement a machine learning algorithm for anomaly detection. It would learn normal usage on your system/network via metrics of various resources (e.g., percentage of CPU used, number of processes/threads, number of file sockets open, number of database connections open). Then, if malware does make it past your firewall, your AI would figure out that there's "unusual behavior" (e.g., burst activity like sending out an unusually large number of emails--typical when the malware turns your system into a bot). For more on the subject, please see https://en.wikipedia.org/wiki/Anomaly_detection.

@terenceplizga I definitely follow you know. Do you write about this topic area as well?

Thanks, Martin. Yes ... just started blogging on machine learning recently. I've been doing research in the area for a while now, but I'm new to the whole blogging thing.

I know several companies working to apply AI for anomaly detection in this way. But such detection techniques have been attempted for over a decade with little success. I have not seen any indication the application of AI will push the success rate much further. The problem is with overcoming the cross over rate of false positive and false-negatives. Then there is the longer term risk of attackers figuring out how the AI makes its decision and then maneuvering to poison the learning or simply working beyond the learned bounds.

Yes I do. there are lots of aspects to this topic. Do you have something concrete in mind?

Areas where the application of AI could improve the practical management of cyber risks. For example, in the Prediction, Prevention, Detection, and Response to threats.

Just interested in your thoughts, what you are hearing in the industry, from latest ideas from luminaries, etc.