Its Science Fair time again. Today we were experimenting with different variations of a very simple reinforcement learning algorithm (Q learning) by varying the available actions and the trade-off between exploration (taking a random action) vs. exploitation (taking the best learned action). Our bearded dragon was happy to help, but was a bit leery of the whirring Lego Mindstorms EV3 robot.

The robot currently uses one touch sensor (just behind the pivoting bumper) to sense when it hits something and an infrared sensor to detect distance. The infrared sensor is not very precise. Sometimes it reports that it is close to an object when it is actually far away and vice versa, even when the sensor and the obstacle are not moving. To correct for this we ordered an ultrasonic sensor that is supposed to be more precise and more accurate.

The robot learns by constantly updating a table of expected long term reward values indexed by its sensor data and the possible actions it can take. If it moves forward it gets 1 point, a forward turn to the right or left gets .5 points, hitting an obstacle gets -5 points. Everything else scores 0.

Within 1000 trials it can learn to avoid obstacles, but it clearly isn't approaching optimal behavior. My daughter's experiment will likely be to explore a variety of parameters to see how they affect the quality of the learned behavior, measure as the total reward over a number of action steps (probably 1500 to 2000) and the average reward over the last 100 steps. We are not sure of the exact experiment yet. She keeps coming up with different ideas to try.

The learning algorithm is designed to run in one of two ways: directly on the robot or on a laptop using remote procedure calls. Instead of using Lego's built-in operating system on the robot, we installed a version of Linux designed for the Lego EV3 robot (ev3dev) and wrote the code in Python. ev3dev is really easy to install, because instead of replacing the Lego OS, it runs off a micro SD card that you pop into the EV3. With the card inserted, the EV3 will automatically boot up into Debian Linux, ready to run Python with a package that makes it easy to use all of the motors and sensors in the EV3.

Of course, like all things Linux, it took dad (me!) hours to get this all set up, what with all the different versions of each package, the need to use a remote shell over Bluetooth to install packages on the robot, etc. But now that it is all done it works quite well.

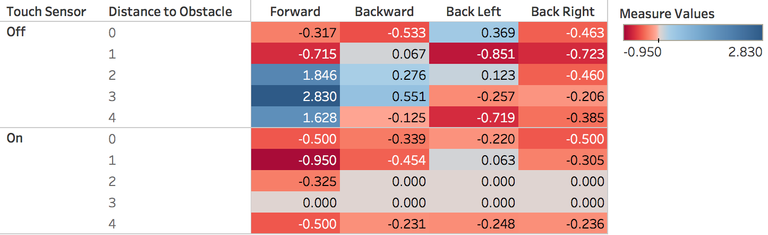

Here is an example of the lookup table (called the Q table) that the robot learned after 1000 steps with exploratory behavior slowly decreasing from a 100% chance of making a random action in the first step to just under a 10% chance by step 1000.

Recall that the robot's view of the world is just the state of its sensors: whether the touch sensor is on (hitting something) or off, and the distance reported by the infrared (IR) sensor. The IR sensor reports distance from 0 to 100, with 0 being closest to an object and 100 at around 70 cm away. I rescaled this to 5 distances (0 to 4), because otherwise the robot would have to learn a much bigger table, which would require many, many more trials.

The table tells the robot its expected long-term reward of taking each action in each possible state. The robot wants to maximize its reward, so actions with bigger numbers (shown in blue) are better. To find the best action, the robot finds the row that matches its current sensor readings and then finds the action in that row with the highest value. For example, if the touch sensor is off, and the distance to an obstacle is 3, the table tells the robot that moving Forward is the best choice with an expected reward of 2.83. All other actions in that state have lower expected values.

The table also shows limitations with the robot's sensors. If you look at the touch sensor On part of the table, you'll see that there are values where the IR sensor indicated it was 4 units from an obstacle; however, if it was that far, the touch sensor shouldn't be on. This is a limitation of the the robots ability to sense its environment. If the robot is moving along a wall, but is slightly pointed toward the wall, its front bumper can catch the wall and trigger the touch sensor, while the IR sensor is still pointing much further down the wall and measuring a much larger distance. Soon we plan to test replacing the IR sensor with the more precise and accurate Lego Ultrasonic Sensor.

You can also see several cells with 0.000, meaning the robot never took an action in that part of the state space. In fact, since the row for Touch = On and Distance = 3 is all 0's, it means that the robot was never in those states.

Overall, it's very exciting to see such a simple robot learn by doing. Although the Q learning algorithm is fairly old, it is still surprisingly useful. DeepMind's Atari game playing used a much more advanced version of this same algorithm (called Deep Q Network) to learn to play a large number of different Atari 2600 games given only the screen pixels and score as input.

This is a semester long project, so I'll be posting more updates as we move through the project.

Todd

To support this post please upvote, follow @toddrjohnson, and consider using one of my referral links below:

Honeyminer: Start mining bitcoin in 1 minute

Proud member of

Join us @steemitbloggers

Animation By @zord189

Congratulations! This post has been upvoted from the communal account, @minnowsupport, by toddrjohnson from the Minnow Support Project. It's a witness project run by aggroed, ausbitbank, teamsteem, someguy123, neoxian, followbtcnews, and netuoso. The goal is to help Steemit grow by supporting Minnows. Please find us at the Peace, Abundance, and Liberty Network (PALnet) Discord Channel. It's a completely public and open space to all members of the Steemit community who voluntarily choose to be there.

If you would like to delegate to the Minnow Support Project you can do so by clicking on the following links: 50SP, 100SP, 250SP, 500SP, 1000SP, 5000SP.

Be sure to leave at least 50SP undelegated on your account.

That's a nifty project and using the Q learning to work at the obstacle avoidance for a robot. I haven't delved too much into AI but I would love to build a small AI robot similar to this, or to do more.

I've put all the code up on GitHub, but it is not yet ready for others to use. I've got to clean to finish up a few things and clean up the code, then V1 will be ready and I will announce it. ev3Dev runs on a number of inexpensive robots, so you are not just limited to the EV3, though the code would likely need some changes to work with others.

That's an interesting project. I never would've come up with that!

Oh man, I would love to do something similar to this with my daughter when she is older... I recently bought a kiddies Arduino kit, I'm hoping that that will help spark some interest!

ev3dev runs on a few other platforms, but it doesn't look like Arduino is supported. However, I believe there are python packages for Arduino, along with the hardware to make the simple kind of robot needed for something like this.

Ha, my daughter isn't up to that level of skill yet (she is only 6!), more that the Arduino board is for playing around and building with circuit boards and bits and pieces! She did manage to solder a little electronic thing when she visited an open lab day... So I think it is the sort of thing that would intrigue her!

A good excuse for me to learn python though...

howdy sir toddrjohnson! well sir..this post was way too technical for me but I saw your name on steemitbloggers and picked you out for a visit.

good post, I just didn't understand it!

Its pretty geeky, but my degree (from way back) is in Artificial Intelligence, so I love these sorts of things. I am going to try to write a very simple demonstration of how this learning works using a much simpler example.

haha! well sir it was very impressive but a "redneck" version is probably a good idea as well! lol. do you work full time outside the home and if you do, is it in some type of technical work?

Never had the patience, or inclined parents, to do this sort of thing when I was a kid but I always wanted to.

I wanted to do this kind of thing, but where I grew up we didn't have science fair. I heard about one when I was in 5th or 6th grade and asked my teacher if I could do it. She said, "Oh, we don't do those things here." I was truly disappointed; however, this was after I asked my teacher how copies were made. She promised to show me and one day she took me to the copier and said "You put the original here and press this button and copies come out." At that point I realized that she didn't have clue as to how the copies were made, so I just said "Oh, I see!" Honestly, I expected more from my teacher, but it was clear that she didn't know and I didn't want to embarass here.

Oh man that is an awesome project! I wish I had engaged in more stuff like that when growing up. I suppose it's never to late to start tho right lol. Wicked cool. <333 :)

I'm impressed that this is a science fair project. Great job dad! Way to put your computer skills to a good use. :-) Seriously though, it's awesome to see you going to such a great effort to help make a meaningful experiment. The only science fair projects I remember involved volcanoes...I think... Or maybe I just saw that in a movie once.

Boy, has school/science fair projects changed since my boys were in school. This would have been a way more fun and perhaps even an old dog like their mom could have learned a thing or two! LOL

Wow, this is very impressive! Obstacle avoidance by a robot! Who knew?

Cute photo of your daughter and bearded dragon too!

Way above my head, I will look for redneck version when it comes out, but good looking lizard for sure.

Kudos to you for making science interesting for younger generations...

Wow! This is all a bit over my head, but super cool and impressive!

I used to play around with mindstorms when I was in high school! You're an awesome dad for helping out your daughter with this. I'm sure the father/daughter bonding time will be something she remembers for a while :)

Very interesting @toddrjohnson. So different from my childhood a million years ago...

Found your post on #steemitbloggers

That was really really interesting Todd. I even read it twice #steemitbloggers. Was the new sensor measuring distance better?

Congratulations @toddrjohnson! You have completed the following achievement on the Steem blockchain and have been rewarded with new badge(s) :

Click on the badge to view your Board of Honor.

If you no longer want to receive notifications, reply to this comment with the word

STOPDo not miss the last post from @steemitboard:

I have to admit I don't understand any of the techincal speak, but this sounds like something my son would do/build! Looks like a wonderful father-daughter project!