Finally! I finished my Masters dissertation…and now with nothing to do (well, except find a job…anyone have any leads??), I can get to have fun again.

And what’s the first thing I do on my “day off” from my project? Welp, START A NEW PROJECT!...

My wife is soooo excited!!...just kidding, honey. I promise you'll see your husband much more than the past few months.

So towards the end of my project, I started to get curious in the concept of reinforcement learning (specifically Q-learning). As a simple description, it’s a subcategory of machine learning, where a computer learns to accomplish a task, through trial-and-error, where it receives “rewards” along the way trying to maximize it’s total rewards.

Just think of it like you would train a dog. If you are training a dog to sit, you only give the dog a reward when it sits, until it realizes, “Oh, when I sit, I get a treat!” If you wanted to train the dog to sit, lay down, play dead, then roll over, all in sequential order, the dog learns to do the tasks in that specific sequence to get it’s treat. Maybe it gets little treats for each little task, but at the end of all the tasks, gets a BIG reward. So after each “trick” or “state”, as it’s called in machine learning, it evaluates what the next action would be to get the BIG reward at the end.

To relate this to what I am trying to do, I want to program a drone to automatically take off from point A and then land on point B, but point B won't always be the same exact spot. Sounds very basic, but at the same time, it needs to locate where point b is while also not crash into walls, ceilings, floors, etc. I got the idea from this project here.

I’m imagining one aspect of this would be to give the drone a way to “see” the environment and to identify “point B”, which requires some type of object identification.

So that’s where I’ve started!

I started to play around with a TensorFlow framework to do some object identification. They have some pre-trained models to use and after a day of installing and working through some tutorials, got it to work. It’s just some basic object classifications (90 different types of objects), like ‘person’, ‘car’, ‘couch’, ‘phone’, etc. Below is an example of some of the photos I took with my webcam and out my window with my phone, then applying the object detection to those photos.

I kept this pic a little bigger so you can hopefully see it identified the cars, person, and backpack

I kept this pic a little bigger so you can hopefully see it identified the cars, person, and backpackThis is all fine and dandy, except I'm limited to detecting predefined objects from the pre-trained model. So the next step was to see if I could train a model to identify my own objects.

So I tried to see if it could correctly identify the Chicago Bears logo versus the Green Bay Packers logo? Why those two, well I'm a Bears fan first off, and secondly, the two logos are somewhat similar in shape (the 'c' versus the 'g') with the difference in colors. I thought that would be a good test for the machine.

The results were not too bad. It was slightly better at identifying the Green Bay logo, but I think it was because a) I had more Green Bay examples in the training data than Chicago, and b) since I was just playing around and to save time, just trained on 100 total images. If I had more images, I think it would increase it's accuracy.

Here are a few examples that turned out pretty well...

That's the end of today's post. Next step is to implement this on live/streaming video feeds...

Images 1, 2, 3, 4, 5, 6

References:

https://pythonprogramming.net/introduction-use-tensorflow-object-detection-api-tutorial/

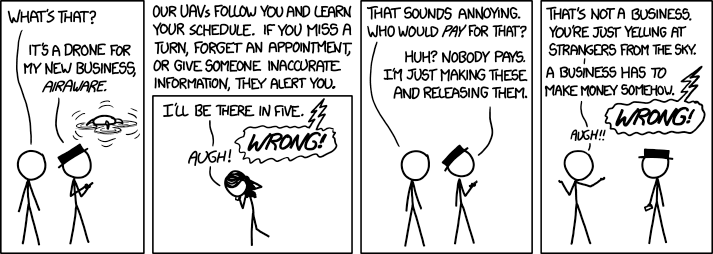

i love that comic

Congrats on being done with your Masters! It is a lovely feeling of freedom :)

It sure is! Now just followed by the writing of cover letters and job hunting...