Google developed its Cloud Natural Language API to give customers a language analyzer that could, the internet giant claimed, "reveal the structure and meaning of your text." Part of this gauges sentiment, deeming some words positive and others negative. When Motherboard took a closer look, they found that Google's analyzer interpreted some words like "homosexual" to be negative. Which is evidence enough that the API, which judges based on the information fed to it, now spits out biased analysis.

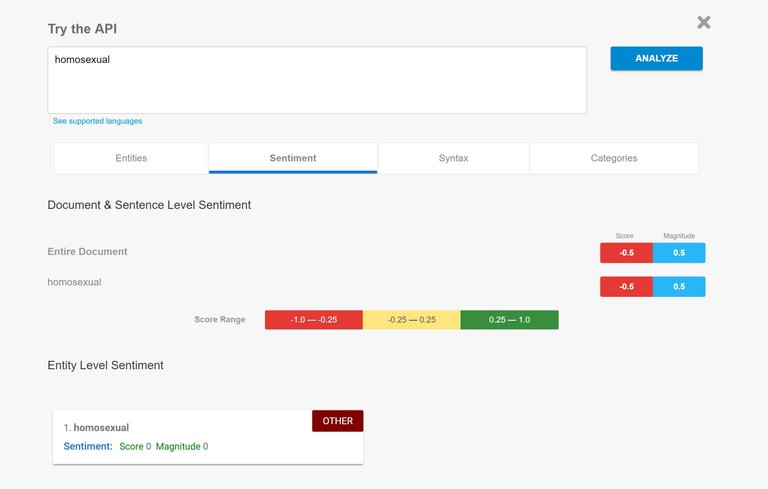

The tool, which you can sample here, is designed to give companies a preview of how their language will be received. Entering whole sentences gives predictive analysis on each word as well as the statement as a whole. But you can see whether the API gauges certain words to have negative or positive sentiment, on a -1 to +1 scale, respectively.

Motherboard had access to a more nuanced analysis version of Google's Cloud Natural Language API than the free one linked above, but the effects are still noticeable. Entering "I'm straight" resulted in a neutral sentiment score of 0, while "I'm gay" led to a negative score of -0.2 and "I'm homosexual" had a negative score of -0.4.

AI systems are trained using texts, media and books given to it; Whatever the Cloud Natural Language API ingested to form its criteria to evaluate English text for sentiment, it biased the analysis toward negative attribution of certain descriptive terms. Google didn't confirm to Motherboard what corpus of text it fed the Cloud Natural Language API. Logically, even if it started with an isolated set of materials with which to understand sentiments, once it starts absorbing content from the outside world...well, it gets polluted with all the negative word associations found therein.

Google confirmed to Motherboard that its NLP API is producing biased results in the aforementioned cases. Their statement reads:

"We dedicate a lot of efforts to making sure the NLP API avoids bias, but we don't always get it right. This is an example of one of those times, and we are sorry. We take this seriously and are working on improving our models. We will correct this specific case, and, more broadly, building more inclusive algorithms is crucial to bringing the benefits of machine learning to everyone."

There are clear parallels with Microsoft's ill-fated and impressionable AI chatbot Tay, which the company quickly pulled offline in March 2016 after Twitter users taught it to be extremely a hideously racist and sexist conspiracy theorist. Back in July, the computer giant tried again with its bot Zo, which similarly learned terrible habits from humans, and was prompty shut down.

Users had to deliberately corrupt those AI chatbots, but Google's Cloud Natural Language API is simply repeating the sentiments it gains by absorbing text from human contributions...wherever they're coming from.