I've finally setup my own full-RPC steemd node on a 6 core Xeon server with 256GB of ECC DDR4 RAM, in a datacenter nearby SteemData (over private, 1gbit network with sub 1ms network latency).

Running a full node is an additional maintenance workload, but it seems to be no longer avoidable. I hope that this new deployment, in combination with @gtg's node as a backup, will improve speed and reliability of SteemData services.

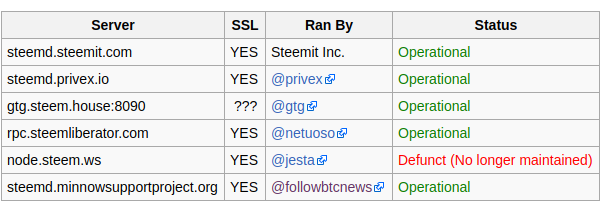

Public Steemd Nodes

Steemit's official nodes have been rock solid in the past month, and served well as a backbone for many of my services. I have also used @gtg's nodes extensively, since they are hosted in EU.

I am really happy about the proliferation of full steemd RPC nodes by the community, however I haven't had a chance to extensively test them yet.

Why Private node?

I currently run 3 databases as a service, and attempt to maintain steemd internal state synced up to the main SteemData database. I'm also syncing up the new databases from scratch (hive, sbds). All in all I'm currently performing millions of requests daily to steemd instances.

Unfortunately, SteemData servers are located in Germany, which adds a fair amount of network latency to most of the public nodes I tested. The per-request network latency, as well as limitations on available throughput were causing some issues, as the database indexers could not catch up with the blockchain head.

Why 256GB of RAM?

It is possible to run RPC nodes on hardware with lower specs, but unfortunately my needs require the fully specced out setup.

Reducing memory usage by selectively enabling features

It is possible to run RPC nodes with lower requirements. For one, not every app needs all the plugins. An app like Busy or Dtube doesn't need the markets plugin for example.

Secondly, its possible to blacklist certain operations from being indexed in account history plugin, which can also drastically reduce memory usage.

The point of SteemData is to process and store all the available information, so these optimizations do not apply.

Using SSD instead

Without high throughput and low latency requirements, its possible to run the shared memory file on a SSD. By doing so, a full RPC node could be hosted on a server with as little as 16GB of ram.

SteemData is making a lot of arbitrary requests, and to stay near-real time in state synchronization, the throughput and latency are crucial. Which is why I need all of the state to be mapped out in RAM, and the node be hosted in the same datacenter as the rest of SteemData servers. This setup is an over-kill during normal operations, but very much needed when syncing up from scratch.

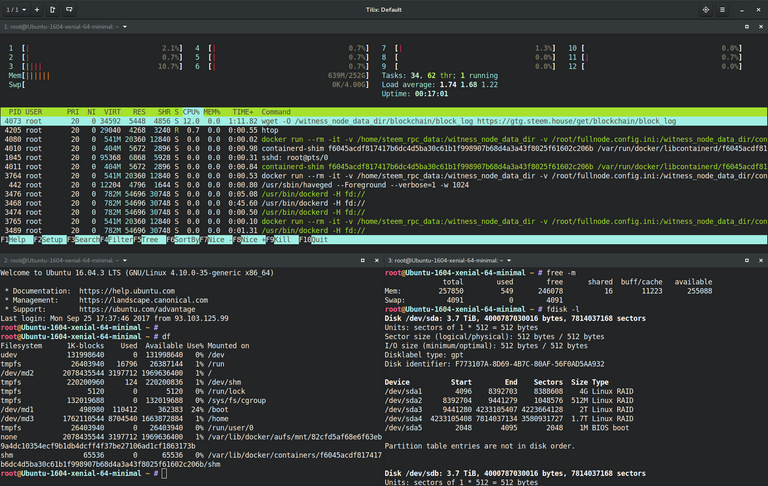

Setup

I've made a custom docker image, based on Steemit's. (Dockerfile, run-steemd.sh)

I've assigned 200GB of 'ramdisk' for shared memory file, using ramfs,

with the following fstab entry:

ramfs /dev/shm ramfs defaults,noexec,nosuid,size=210GB 0 0

.

I've adopted @gtg's awesome full node config as a base, and tweaked it a bit.

rpc-endpoint = 0.0.0.0:8090

p2p-max-connections = 200

public-api = database_api login_api account_by_key_api network_broadcast_api tag_api follow_api market_history_api raw_block_api

enable-plugin = witness account_history account_by_key tags follow market_history raw_block

enable-stale-production = false

required-participation = false

shared-file-size = 200G

shared-file-dir = /shm/steem

seed-node = 149.56.108.203:2001 # @krnel (CA)

seed-node = anyx.co:2001 # @anyx (CA)

seed-node = gtg.steem.house:2001 # @gtg (PL)

seed-node = seed.jesta.us:2001 # @jesta (US)

seed-node = 212.117.213.186:2016 # @liondani (SWISS)

seed-node = seed.riversteem.com:2001 # @riverhead (NL)

seed-node = seed.steemd.com:34191 # @roadscape (US)

seed-node = seed.steemnodes.com:2001 # @wackou (NL)

.

Lastly, I run everything in Docker.

docker run -v /home/steem_rpc_data:/witness_node_data_dir \

-v /root/fullnode.config.ini:/witness_node_data_dir/config.ini \

-v /dev/shm:/shm \

-p 8090:8090 -d \

furion/steemr

Conclusion

This setup is fairly new (in production for less than 1 day), but the results are already promising. The syncing speed is more than 100x faster vs using remote nodes, and I haven't ran into any throughput limitations yet. As long as the node doesn't crash, things should be golden.

Keep up the good work @furion

For those who might think that such private RPC node doesn't serve the network. Of course it does serve the network. It will reduce load on other public nodes leaving those resources to those who can't run their private nodes.

Yup, I've been depending on Steemits nodes for months, and by moving to my own node, Steemits workload should be a bit lower.

Furthermore, the whole point of SteemData is to avoid hammering steemd, since querying databases can be many orders of magnitude more efficient.

True,. 15,000,000 requests vs one single query to SteemData.

That makes a difference.

@furion you can probably take a look at steemd.com which has been down for the last 7 hours...you guys do a awesome job securing the blockchain, hopefully your initiative can encourage others and we experience an overall better experience...also have to take a look at steemdb and steemd which can vary or probably lag behind (especially steemdb), probably your initiative can help better the services offered by others

SteemData is open and free to use, but unfortunately its not a HA setup (high-availability).

I do have ~200 active connections on MongoDB alone, from various users and apps.

The database has been rock solid so far, and there is a fairly easy scalability path:

The weak points in SteemData right now are:

But still impressive results as the systems has been stable and supporting so much application. I particularly like Steemdata as it helps me navigate my own feed just in case I miss anything and helps me monitor users active users...probably we can get more dedicated team on the nodes as it goes down something but this one has been lengthy, I am not to sure of the overall effect it has on the platform...apart from that fantastic work...

Are you making SteemData available as a public RPC endpoint also, because it seems from the article you plan to keep it private, at least for now (Why Private node?). If you do plan to make it public at some point, I'd love to add it to my list of "goto servers" as well. Regardless, it's another great addition that you bring to the community.

On a separate note regarding @gtg's endpoint, you left SSL as a question mark. However, I can attest that gtg.steem.house requires SSL / wss, despite the use of an alternate port (8090).

We cant continue adding RAM to servers for higher performance, when at the current stage you are running 256GB Node (which ORACLE is using for their DB and JS apps) we are serving less than 400k users. That mean with 1 milion, Steem will 768GB Ram ?

I hope you SteemData distress steemd in proper way, otherwise we are facing strong facepalm in near future.

Great

saya sangat menyukai cerita yang anda pamerkan

semoga anda senang apa yang sayang katakan, senang bisa melihatnya

lol which language is it ? :D hahaha

Holy s.it! 256gb RAM, thats quite a big server ur running there. Congrats. J

One could get away with a lot less, but I want it to be as fast as possible.

RAM on steroids :D

Are you still working on Viewly @furion?

Yes, I am.

I've been recently taking some time to fix/improve my steem services and witness infrastructure.

I've been fortunate enough to have a small team helping me with Viewly, so things are still progressing.

Very pleased to hear all of that. ^

Well done and congratulations 256gb RAM is a very fast one!

Can you please let me know if there are any updates for Viewly... i did sign up for the newsletter but no news for now plus i did not notice on the site a way to create an account and start adding videos!

Thanks a lot and have a great day!😉

Maybe it went into spam. Feel free to join us on Telegram.

Maybe it went there...honest to be i never look at the spam section. I am using Telegram for a while so can you please let me know how can i join you there...

Thanks a lot!

Good stuff man. Looks like you'll be able to use the rewards from this post alone to run the RPC server for at least a month or two for no cost. Go Steem

Great idea. I would like to see more hopefully with an dumbed down explanation of what some components are. The scientific terms make it hard to follow, but from what I understood Im impressed!

thanks 4 sharing

Interesting set up .id like to set up something similar to this very soon . Good work :)

I have 12 core xenon work station 38 gb ecc is an dell t5500 can I run a node from Ireland?

Yes

Damn geekyou! I will respond to that... I just need my new 1TB RAM

Threadripper... X000$! Mostly in memory! Hehe!

Looking nice!

Great innitiative @furion ! #resteemed

Great. keep up the good work!

Thank you for the lovely post, wishing you all the best.:) information is sure to come in handy, but more than that, it gives me great confidence to know your hard work is being the useful.

that's where all the ddr4 memory is going >:( My rig is still stuck at 8gb because another stick is $50 :'(

Upvoted and resteemed, you are doing many cool and good things !

i am a quality control chemist but i love programming. i've been in bed with computers since childhood. its just awful that i turned away from ma dreams. But now im coming back to them. Some of these great projects excites my innermost zeal to pursue this God given talents. ive already made inquiries about IT education. And hopefully i will start in the next few months. Looking forward to learning from ya. And hey @furion, thank ya for sharing this great INSPIRATION!

I almost forgot. I'm following you from now.

Powerful hot machine lol ! 256 GB 6 cores ? :O Amazing i wonder how it would be for Bitcoin mining ? CPu mining ? :D lol

256GB RAM, there is a fairly large server url running. Congratulations.👏👏

You are always the best. I really like your posts.

I congratulate you on sharing so nice stuff.

I UPVOTED and FOLLOWING you.

im sorry i dont understand the Technical part of the post you have wrote. keep up the good work.cheers.

Thanks for keeping the engine room running. It's a witness vote well spent.

what a setup there!

well well

short story for a particular moment is really interesting to read

overall

love this post because of unique content and photographs and of course love between them

:)

upvoted

@furion very Informative details of Steem data with nodes promising performance on private server.

the hardware and coding modes program quite very impressive.

love your work buddy

You have my respect and upvoted on your Very Great article.

I don't think you have enough memory =op

Oh wow , what a great article !!!

256GB RAM? Are you serious? @furion

That's great news - thanks! I've been checking the sync status of the Hive DB each day, hopefully it might finish sooner because of this.

Probably today :)

Excellent!

This one is on Roids.

So steemy, love it when you talk nerdy to me.

Congratulations @furion! You have completed some achievement on Steemit and have been rewarded with new badge(s) :

Click on any badge to view your own Board of Honor on SteemitBoard.

For more information about SteemitBoard, click here

If you no longer want to receive notifications, reply to this comment with the word

STOPNice post

256 gb of RAM. Hell that huge one .... good for shared the information ... please keep on updating 😀

Furion, please, give us a 101 class on this. I dont even know what you meant when saying RPC, nodes and all those technical things.

informative upvote and followed :)

wow ... now i know that you are the owner of steemd. this site really help me alot ... i'll vote you as witness ...

i hope i can read more article from you about how to setup server to be witness server, as i really want to build witness server ... gracias !!!

sorry my bad english ...

Oh That's Informative, I am going to Resteem this piece. Thanks.

Congratulations, your post received one of the top 10 most powerful upvotes in the last 12 hours. You received an upvote from @blocktrades valued at 102.54 SBD, based on the pending payout at the time the data was extracted.

If you do not wish to receive these messages in future, reply with the word "stop".

Congratulations @furion, this post is the second most rewarded post (based on pending payouts) in the last 12 hours written by a Hero account holder (accounts that hold between 10 and 100 Mega Vests). The total number of posts by Hero account holders during this period was 235 and the total pending payments to posts in this category was $3827.66. To see the full list of highest paid posts across all accounts categories, click here.

If you do not wish to receive these messages in future, please reply stop to this comment.

Thanks for sharing @furion .

256gb of Ram... Wow!! Keep it up, great job

Nice job.

Great work @furion. Hope you may do greater things in the future

Really interesting stuff :-)

Looks like your setup is a BEAST ..

Good work