From reading Ned's post announcing 70% staff cuts at Steemit Inc, it is clear that infrastructure costs, particularly the ongoing costs of paying outsourced "cloud" providers for running full node servers supporting APIs are a big problem for Steemit Inc.

This is a problem that arises from centralisation and it can be solved by decentralisation!

It has always concerned me that in a supposedly decentralised environment so many witnesses and Steemit Inc itself were outsourcing to centralised companies running big server farms for the actual hardware that is powering decentralised blockchains like Steem.

There are three four big problems with this:

- Cost 1. It is far more costly in the long run to rent something from someone else than to own it yourself.

- Cost 2. With outsourced "cloud" companies you are paying for density, reliability and redundancy that properly decentralised systems don't need.

- Undermining Decentralisation: Use of out outsourced cloud servers creates centralisation that reduces the security and redundancy of the system.

- Impact of Steem price. If you own your hardware then you can ride out low prices. If your rent it you are committed to fees every month and may need to power down and sell to meet those costs.

@anyx has made a series of posts on these issues and has put his money where his mouth is and created his own gold standard 512Gb Xeon server running a full node with APIs: https://anyx.io.

https://steempeak.com/steem/@anyx/what-makes-a-dapp-a-dapp

https://steempeak.com/steem/@anyx/fully-decentralizing-dapps

https://steempeak.com/steem/@anyx/announcing-https-anyx-io-a-public-high-performance-full-api

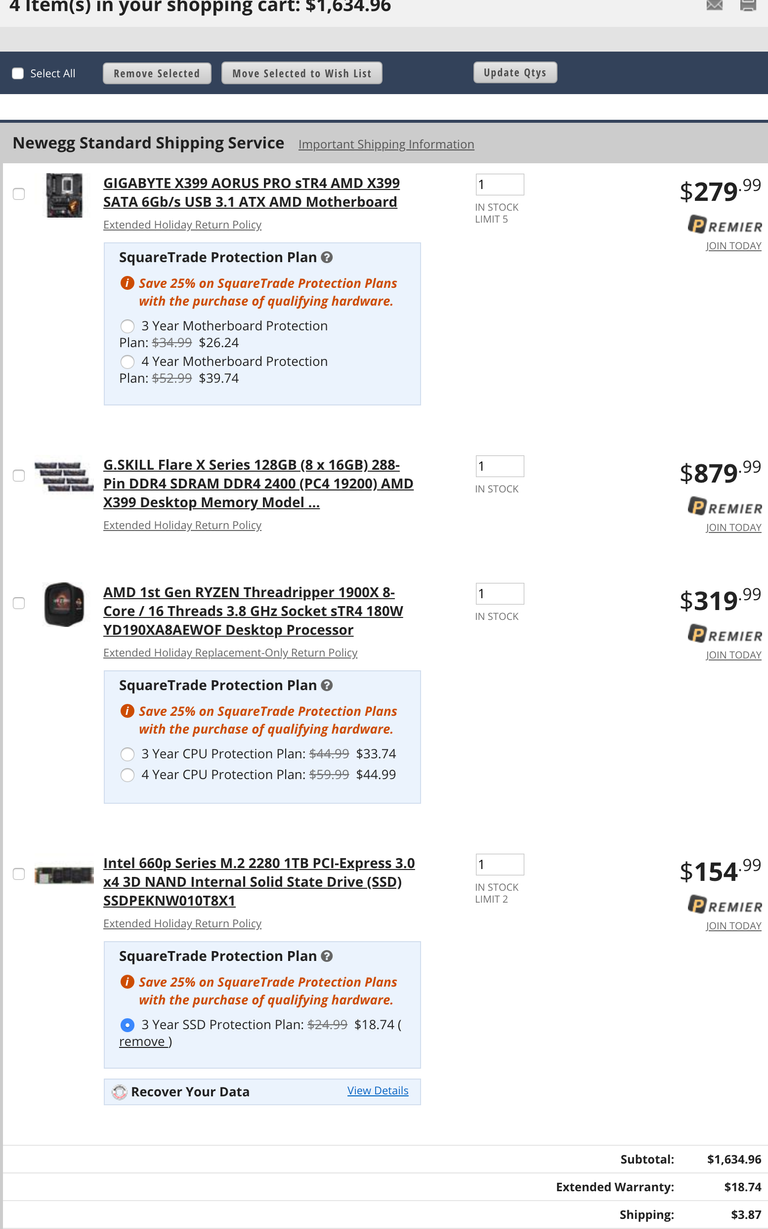

However, as @anyx notes, it is possible to create a witness server running some APIs or a node running lots a APIs using a 128Gb server based on relatively inexpensive High End Desktop (HEDT) systems costing less than $2000.

Doing some further analysis shows that witnesses can run a full node on 3 HEDT class machines. Top 20 Witnesses need 3 servers in any case to run the main steem node, backup node and seed node.

Quoting @anyx

"For Steem, the current usage as of this articles time of writing is 45 GB for a consensus node. Each API you add will require more storage (for example, the follow plugin requires about 20 GB), adding up to 247 GB for a "full node" with all plugins on the same process (note, history consists of an addition 138 GB on disk).

Notably, if you split up the full node into different parts, each will need a duplicate copy of the 45 GB of consensus data, but you can split the remaining requirements horizontally.

With these constraints in mind, commodity machines (which have the highest single core speeds) are excellent candidates for consensus nodes, with Workstation class Xeons coming in a close second."

This means 3 x 128Gb memory servers on HEDT class hardware can run all the APIs needed for a full node. API data is 202 GB (247-45 Gb) split into 68Gb on each machine + 45Gb per machine for consensus data = 113Gb (which is less than 128Gb max for HEDT class machine).

It is not that simple. You would need to use Jussi to route incoming API requests to deal with sharding a full node. This isn’t without issues. I’m one of the few who run Jussi outside of Steemit Inc.

You can’t just put home hardware in data centers and running a full node from your home is a disaster. Not only is it a poor experience for users you are likely to have many replays (each will take days/weeks).

ECC ram is critical to a service that is meant to be online for months at a time.

Collocation costs of 3 machines will likely be near the cost of renting an appropriate larger server.

Scaling horizontally with steemd is critical as more are definitely better than bigger as long as you reach the minimium requirements. The problem is now you have three machines to manage, each with a replay time of days/weeks for every outage and patch that comes up. Because you are sharding one node into three, unless you have redundancy you have just introduced 300% higher risk of downtime. If any one node goes down, users of the node will likely have complete failure are dapps generally need more than one part of the API. With the time to come back online (replay time) being days/weeks you could in theory have cascading downtime between nodes that keeps it offline even longer.

I’m the end, you might it even be cheaper, perhaps a little bit. If you bought all the hardware upfront like @anyx did, you certainly will have savings but collocation costs x 3 will likely get you near rental costs of a proper node.

Hi @markymark. Thanks for your detailed comments. I have responded to each below and would appreciate your further feedback.

Sure there is, you have 3 machines for the typical one machine. Unless you have redundancy by having 2 of each there will be more downtime. Nothing to do with the CPU, in fact many public full nodes outside of Steemit are in fact AMD CPUs already.

This isn't the time-consuming part of a replay for a full node.

Because it takes days on Intel Gold Xeon as well, if you don't have a lot of ram or super fast array of NVMe. A full node replay ranges from about 12 hours to 7 days depending on configuration.

While Internet speed is important (full nodes will easily go 5-20 TB/month of traffic) power is equally important and UPS isn't good enough to ensure outages in storm situations, depending on where you are during the winter 2-5 days without power isn't uncommon.

A full node is a public service, a consensus witness node is supposed to be private, hidden, and secure. It is not in the best interest of the network to have them combined on a server that is being accessed by the public.

Thanks again. A few clarifications on replay times.

What do you mean by "a lot" of RAM for replay purposes? Is 128Gb not a lot?

NVMe drives doing Up to 3400 MBps are super cheap these days. See https://www.newegg.com/Product/Product.aspx?Item=N82E16820147691

Is this what you mean?

No, 128GB is not enough. The shared memory file (which is nothing but RAM) is around 260GB right now.

I am actually testing using various crazy configurations to reduce the amount of RAM that is needed & even managed to get Intel's blessing for the same. ( https://steemit.com/steemdev/@bobinson/accelerating-steem-with-intel-optane )

Optane is better than NVMe in many cases and if the direct memory access works as expected, full nodes, witness nodes etc can be lot more cheaper.

Yes, I had read your Optane post. It will be very interesting to see the replay improvement from NVMe to Optane.

So replay is slightly faster in optane in comparison to SSD when running in seed and witness modes. But the real challenge is the full nodes where both the memory usage is very high and history files needs additional ~150 GB disk space. For steemit too full nodes seems to be where majority of the infrastructure expenses comes from. There are very few like @anyx and @themarkymark and handful of others running full nodes apart from Steemit Inc

Not for a full node it isn't but if you are breaking modules across machines, it isn't so bad. But unless 100% is in ram, you will notice a huge increase in replay times.

Most NVME drives will do no where near 3400 MB/s in reality, more like 1000-2400 far more than SSD but no where near where they claim, and FAR FAR from real memory speeds.

Also 3 machines can create redundancy that single mega server doesn’t have. Heavily used APIs can be on 2 of 3 machines while lightly used only on one. If you go to 4 x $2000 machines you get heaps of redundancy for half annual price of renting a mega server.

Posted using Partiko iOS

Not really, unless you are talking 3 machines running ALL plugins. If so, 128GB will be a problem. If they are running only some of the plugins, then you will have problems when one goes down, they basically all go down because your account history may be up but your tags or follows are down and that breaks whatever app you are using.

Jussi would handle the routing, but it does not have load balancing.

So splitting plugins across three machines increases your risk for downtime. Much like Raid 0, if any disk goes down, you are down.

Re security, can it not be achieved by running witness node and API node in separate docker containers or VMs, sharing only the consensus data. You can even use the dual ethernet on most HEDT motherboards to provide 2 completely separate internet access and IP addresses (one hidden, one public). Only in the event of one internet going down would they share internet. Redundant internet is a small cost. This is the sort of setup you can only do on your own machines, not in a data center.

Just knowing the IP of a witness node is a security risk. Even if you had multiple IP's they would be on the same subnet most likely and easy to track it down.

You can't share consensus data between VM's, they can't be both writing to the blockchain file.

Two diverse internet providers would provide completely different IPs.

Surely only the witness node would be writing to the blockchain file? Isn't that the whole point of dPOS consensus?

The APIs should just be reading from it? Or am I misunderstanding something.

If so then Docker can allow this. https://www.digitalocean.com/community/tutorials/how-to-share-data-between-docker-containers

@anyx your thoughts on these issues would be appreciated.

An RPC node runs the witness plugin and the blockchain file grows on all nodes even seed nodes. They cannot share the same file.

While docker images can easily share data, that does not mean the underlying applications can.

I thought you were talking about sharing witness and full node on same hardware, now you are talking about two ISP?

Power outages are extremely rare in central Tel Aviv but I know that they are much more common in parts of the US.

Posted using Partiko iOS

Maybe so, but there are so many other issues that's only one of the many, and I can assure you they are far more common than a data center which virtually has zero. Even a 10 second power outage will cost you 18 hours to 14 days of downtime.

Congratulations! This post has been upvoted from the communal account, @minnowsupport, by apshamilton from the Minnow Support Project. It's a witness project run by aggroed, ausbitbank, teamsteem, someguy123, neoxian, followbtcnews, and netuoso. The goal is to help Steemit grow by supporting Minnows. Please find us at the Peace, Abundance, and Liberty Network (PALnet) Discord Channel. It's a completely public and open space to all members of the Steemit community who voluntarily choose to be there.

If you would like to delegate to the Minnow Support Project you can do so by clicking on the following links: 50SP, 100SP, 250SP, 500SP, 1000SP, 5000SP.

Be sure to leave at least 50SP undelegated on your account.

one small step towards decentralization that we never had on steem :)

themarkymark is right that it won't be smooth and there may be some disastrous outcome. but it's an overdue process. for too long too many steemians blindly trusted ned to do everything.

Thanks another learned thing, in this world as complex as it is steemit and even more the complex world of cryptocurrencies, greetings and my respects my support with my vote.

I will comment on the points in this thread but before jumping into the new infra, there can be a lot of things done on the existing infrastructure - I have written about it below.

https://steemit.com/steem/@ned/2fajh9-steemit-update#@bobinson/re-ned-2fajh9-steemit-update-20181128t044734822z

@apshamilton, thanks for an interesting analysis! :)

however it seems like somewhat contradictory to focus mostly or only on the technical solutions, which focus only on Cost price - while expecting that it would help the increase of Sell price. or am I missing something?

(further elaborated here in my post, inspired by things discussed in this post)

Basic budgeting. If you spend more than you earn you go under. Earnings are down so costs have to come down too. Also, when huge infrastructure costs are funded by selling Steem, like Steemit Inc have been doing, it drives down the Steem price making things even worse.

Posted using Partiko iOS

I like the idea of splitting up big things to smaller parts and I know that this works fine when done right. Doing hosting myself for almost two decades now, I know that this is absolutely feasible.

This reminds me of the times in the dotcom bubble where my role usually was to replace/stand by some big insanely expensive Sun servers with a few of cheap independent Linux boxes (and also phase out bloaty java enterprise crap for lean modern codebases).

sorry just wondering if anyone see this message

https://steemit.com/silencing/@bearbear613/last-spam-for-the-evening-until-someone-wakes-up