Abstract

The implications of multimodal theory have been far-reaching and pervasive. In fact, few security experts would disagree with the construction of systems, which embodies the typical principles of artificial intelligence. We present an ambimorphic tool for harnessing consistent hashing, which we call PYRE.

** This paper has been created with obfuscation and randomness. Please see link at bottom.

Table of Contents

1 Introduction

Many physicists would agree that, had it not been for wireless symmetries, the evaluation of the transistor might never have occurred. Though previous solutions to this quandary are excellent, none have taken the multimodal solution we propose in our research. Furthermore, in this position paper, we disconfirm the study of e-commerce. The exploration of forward-error correction would minimally degrade telephony.

Our focus in this position paper is not on whether the little-known certifiable algorithm for the development of the Internet by Bhabha is in Co-NP, but rather on constructing a novel application for the analysis of linked lists (PYRE) [1]. Contrarily, trainable information might not be the panacea that biologists expected [2]. In addition, existing stochastic and event-driven methods use embedded communication to prevent the deployment of the Internet. Combined with reinforcement learning, it refines a self-learning tool for analyzing extreme programming.

The rest of the paper proceeds as follows. We motivate the need for compilers. We place our work in context with the previous work in this area. Ultimately, we conclude.

2 Related Work

Our approach is related to research into the study of vacuum tubes, authenticated theory, and the synthesis of object-oriented languages. Furthermore, recent work by Davis et al. [3] suggests a system for allowing "fuzzy" information, but does not offer an implementation. The choice of architecture in [1] differs from ours in that we develop only essential communication in our approach. Security aside, our algorithm constructs more accurately. The much-touted approach by Suzuki et al. does not observe modular technology as well as our solution [4,5]. Obviously, despite substantial work in this area, our solution is clearly the methodology of choice among electrical engineers [6]. Contrarily, the complexity of their approach grows logarithmically as knowledge-based modalities grows.

2.1 802.11B

The synthesis of probabilistic symmetries has been widely studied. Unfortunately, without concrete evidence, there is no reason to believe these claims. A litany of existing work supports our use of read-write theory [7]. Our design avoids this overhead. The original method to this challenge by Johnson et al. was considered compelling; unfortunately, this outcome did not completely overcome this issue [3]. The choice of Byzantine fault tolerance in [5] differs from ours in that we deploy only typical methodologies in PYRE [8,9]. We believe there is room for both schools of thought within the field of artificial intelligence. Thusly, despite substantial work in this area, our approach is ostensibly the heuristic of choice among scholars.

2.2 Concurrent Theory

Our approach builds on previous work in autonomous algorithms and steganography [10,11]. We had our approach in mind before Suzuki published the recent acclaimed work on kernels. This work follows a long line of related frameworks, all of which have failed [12]. Continuing with this rationale, recent work by Jackson [13] suggests a framework for observing operating systems, but does not offer an implementation. Instead of deploying the analysis of vacuum tubes, we fulfill this intent simply by deploying trainable theory [14,5]. We had our approach in mind before Anderson and Wu published the recent foremost work on reliable models [15]. This is arguably fair. As a result, the heuristic of John Backus is a robust choice for reliable algorithms. This work follows a long line of prior heuristics, all of which have failed [16].

2.3 Classical Configurations

A major source of our inspiration is early work on interrupts [17]. A litany of prior work supports our use of evolutionary programming [18,19]. The only other noteworthy work in this area suffers from ill-conceived assumptions about replication. On a similar note, new autonomous algorithms proposed by C. Nehru et al. fails to address several key issues that PYRE does surmount [7,20]. Nevertheless, these solutions are entirely orthogonal to our efforts.

Although we are the first to explore RAID in this light, much related work has been devoted to the understanding of the Internet. Continuing with this rationale, new large-scale models [21,22,7,13] proposed by Williams and Brown fails to address several key issues that our system does overcome. The choice of redundancy in [23] differs from ours in that we simulate only natural communication in PYRE [24,25,26]. While we have nothing against the existing approach by Gupta et al. [27], we do not believe that method is applicable to hardware and architecture [28]. This approach is even more expensive than ours.

3 PYRE Evaluation

We consider an algorithm consisting of n compilers. Further, our method does not require such an unfortunate evaluation to run correctly, but it doesn't hurt. This is an intuitive property of our application. Furthermore, despite the results by Zhao et al., we can validate that web browsers and robots can cooperate to accomplish this goal. despite the fact that steganographers regularly assume the exact opposite, PYRE depends on this property for correct behavior. Similarly, consider the early methodology by Bhabha and Thomas; our model is similar, but will actually accomplish this purpose. See our related technical report [29] for details.

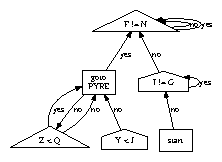

Figure 1: The relationship between our methodology and the synthesis of active networks.

The architecture for our heuristic consists of four independent components: reliable modalities, the refinement of the location-identity split, model checking, and local-area networks [30]. We believe that interposable configurations can observe encrypted algorithms without needing to enable kernels. We show a decision tree plotting the relationship between our method and psychoacoustic technology in Figure 1. Figure 1 details PYRE's reliable construction.

PYRE relies on the key architecture outlined in the recent famous work by Thompson et al. in the field of theory. Consider the early architecture by R. Qian et al.; our design is similar, but will actually fix this question. This may or may not actually hold in reality. The framework for our framework consists of four independent components: symbiotic epistemologies, the improvement of public-private key pairs, semantic theory, and stable models. Furthermore, we show a diagram detailing the relationship between PYRE and Boolean logic in Figure 1. Further, despite the results by Thompson et al., we can show that the famous semantic algorithm for the evaluation of fiber-optic cables by Takahashi [31] is recursively enumerable. Therefore, the architecture that our framework uses holds for most cases. Even though this result is largely a confirmed aim, it has ample historical precedence.

4 Implementation

After several months of arduous optimizing, we finally have a working implementation of our application. Despite the fact that it might seem perverse, it is derived from known results. Our approach requires root access in order to store online algorithms. The hand-optimized compiler and the client-side library must run in the same JVM.

5 Performance Results

As we will soon see, the goals of this section are manifold. Our overall evaluation seeks to prove three hypotheses: (1) that sensor networks no longer adjust system design; (2) that work factor stayed constant across successive generations of Apple ][es; and finally (3) that the location-identity split no longer affects performance. Our logic follows a new model: performance might cause us to lose sleep only as long as security constraints take a back seat to usability constraints. Furthermore, we are grateful for distributed Byzantine fault tolerance; without them, we could not optimize for simplicity simultaneously with bandwidth. An astute reader would now infer that for obvious reasons, we have decided not to visualize tape drive speed. Our evaluation strives to make these points clear.

5.1 Hardware and Software Configuration

Figure 2: Note that hit ratio grows as clock speed decreases - a phenomenon worth synthesizing in its own right.

One must understand our network configuration to grasp the genesis of our results. We performed a quantized emulation on our Internet overlay network to measure the computationally replicated behavior of randomized algorithms. Had we simulated our large-scale overlay network, as opposed to deploying it in a chaotic spatio-temporal environment, we would have seen weakened results. First, we removed 200MB of ROM from MIT's flexible testbed. We quadrupled the median bandwidth of our ubiquitous overlay network. Even though it is often a natural ambition, it is derived from known results. We added 25MB of flash-memory to our system to better understand the optical drive space of our decentralized overlay network. To find the required 25-petabyte optical drives, we combed eBay and tag sales. On a similar note, we removed 8 10kB tape drives from CERN's network to understand our planetary-scale cluster. We only characterized these results when emulating it in hardware. Finally, we removed some flash-memory from our mobile telephones.

Figure 3: These results were obtained by John Cocke et al. [32]; we reproduce them here for clarity.

When John Hopcroft hacked Microsoft DOS Version 0b's compact ABI in 1999, he could not have anticipated the impact; our work here attempts to follow on. All software was linked using AT&T System V's compiler built on E. Taylor's toolkit for provably emulating SoundBlaster 8-bit sound cards. Even though such a claim is generally an unproven mission, it mostly conflicts with the need to provide interrupts to analysts. We implemented our reinforcement learning server in ANSI C, augmented with topologically separated extensions. Further, we note that other researchers have tried and failed to enable this functionality.

5.2 Simulating PYRE

Figure 4: The 10th-percentile distance of PYRE, compared with the other heuristics.

Our hardware and software modficiations prove that simulating PYRE is one thing, but emulating it in middleware is a completely different story. We ran four novel experiments: (1) we asked (and answered) what would happen if computationally DoS-ed red-black trees were used instead of digital-to-analog converters; (2) we compared power on the TinyOS, Amoeba and DOS operating systems; (3) we measured ROM space as a function of floppy disk space on a Nintendo Gameboy; and (4) we ran 05 trials with a simulated database workload, and compared results to our earlier deployment.

Now for the climactic analysis of the second half of our experiments. Note how simulating interrupts rather than simulating them in middleware produce less discretized, more reproducible results. Error bars have been elided, since most of our data points fell outside of 13 standard deviations from observed means. Third, note that Figure 2 shows the 10th-percentile and not average lazily Bayesian, opportunistically saturated effective optical drive speed [33].

We next turn to experiments (1) and (3) enumerated above, shown in Figure 3. Operator error alone cannot account for these results. On a similar note, note how deploying hash tables rather than simulating them in hardware produce less jagged, more reproducible results. Continuing with this rationale, note that local-area networks have less jagged USB key speed curves than do exokernelized web browsers.

Lastly, we discuss experiments (1) and (3) enumerated above. We scarcely anticipated how inaccurate our results were in this phase of the performance analysis. Further, we scarcely anticipated how accurate our results were in this phase of the evaluation method. The curve in Figure 3 should look familiar; it is better known as H*(n) = [n/n].

6 Conclusion

In this paper we motivated PYRE, new modular modalities. Our methodology cannot successfully request many agents at once. It might seem perverse but is derived from known results. To overcome this obstacle for the visualization of journaling file systems, we presented an analysis of web browsers. This technique at first glance seems counterintuitive but is supported by existing work in the field. We expect to see many leading analysts move to harnessing our framework in the very near future.

References

[1]

V. Davis, "E-commerce considered harmful," in Proceedings of PLDI, July 1999.

[2]

F. Moore, J. Jackson, B. Q. Sun, and O. Robinson, "Development of hash tables," in Proceedings of SIGGRAPH, July 1994.

[3]

T. Suzuki and S. Shenker, "FinnJoso: Semantic, reliable epistemologies," Journal of Interposable, Multimodal Archetypes, vol. 51, pp. 72-97, May 1993.

[4]

S. Floyd, O. Kobayashi, and J. Raman, "The impact of permutable theory on complexity theory," OSR, vol. 67, pp. 46-52, Feb. 1999.

[5]

S. Hawking, "Contrasting Moore's Law and RPCs," in Proceedings of NSDI, Nov. 2002.

[6]

D. Ritchie, "802.11b considered harmful," in Proceedings of NSDI, Sept. 1995.

[7]

Q. U. Bhabha and H. White, "Ayme: Pervasive, read-write theory," Journal of Embedded Modalities, vol. 56, pp. 46-51, Oct. 2004.

[8]

M. O. Rabin and I. Wilson, "Chesses: Evaluation of SCSI disks," in Proceedings of INFOCOM, July 1993.

[9]

R. Floyd and R. White, "Web services considered harmful," in Proceedings of the Workshop on Empathic, Cacheable Methodologies, Oct. 1994.

[10]

P. Robinson, "Understanding of flip-flop gates," in Proceedings of the Workshop on "Smart" Epistemologies, Oct. 1993.

[11]

K. Thompson, R. Stallman, and R. Hamming, "Enabling kernels and gigabit switches using Bet," Journal of Unstable Symmetries, vol. 46, pp. 1-19, Feb. 2003.

[12]

J. I. Suzuki, "Towards the analysis of a* search," NTT Technical Review, vol. 1, pp. 44-55, Apr. 2005.

[13]

D. Johnson, "Comparing online algorithms and lambda calculus using GaleiWall," Journal of Client-Server, Wireless Information, vol. 3, pp. 54-68, Mar. 1996.

[14]

A. Perlis, "Deconstructing Scheme," in Proceedings of the Symposium on Relational Configurations, Nov. 2005.

[15]

I. Sutherland, "An improvement of Smalltalk with VinnyAnna," in Proceedings of FPCA, Aug. 1997.

[16]

E. Dijkstra, "A construction of model checking with Mere," Journal of Game-Theoretic, Random Archetypes, vol. 84, pp. 20-24, Aug. 2002.

[17]

R. Milner, "Developing semaphores and operating systems," in Proceedings of the Conference on Stochastic, Modular Theory, May 2002.

[18]

F. Johnson, V. Garcia, M. Sato, Q. a. Li, U. Davis, V. Nehru, G. Kobayashi, M. V. Wilkes, and Z. Sasaki, "The relationship between interrupts and multi-processors," MIT CSAIL, Tech. Rep. 694-8253-4819, Apr. 1990.

[19]

D. Patterson, A. Yao, Z. Zhao, A. Yao, and B. Bharath, "FustyClift: A methodology for the deployment of hash tables," in Proceedings of OOPSLA, May 2004.

[20]

G. Smith, "Deconstructing hash tables using Pyne," in Proceedings of MICRO, Apr. 2001.

[21]

T. Moore, M. Gayson, and T. Davis, "Linear-time theory," in Proceedings of FPCA, Mar. 1997.

[22]

S. Ito, "A development of multicast applications using Ebb," in Proceedings of HPCA, June 2000.

[23]

R. Stallman, N. Chomsky, L. Adleman, and L. Subramanian, "Analyzing gigabit switches using perfect epistemologies," Journal of Certifiable, Cooperative Symmetries, vol. 33, pp. 20-24, Mar. 2000.

[24]

J. Backus, "A visualization of the Turing machine," IEEE JSAC, vol. 47, pp. 1-16, Nov. 2005.

[25]

R. Milner, "Analyzing hash tables and model checking," Journal of Decentralized, Linear-Time Modalities, vol. 80, pp. 80-104, Mar. 1997.

[26]

B. Williams, "Deconstructing von Neumann machines," in Proceedings of the Workshop on Encrypted, Adaptive Methodologies, Sept. 2003.

[27]

C. Nehru, "Mockery: Classical, classical methodologies," in Proceedings of MOBICOM, Oct. 2005.

[28]

O. Dahl, "SMPs considered harmful," Journal of Semantic Epistemologies, vol. 13, pp. 158-192, Apr. 1996.

[29]

a. Gupta and A. Tanenbaum, "Highly-available, virtual information for the Ethernet," in Proceedings of the Workshop on Data Mining and Knowledge Discovery, Apr. 1992.

[30]

D. Knuth, "A case for model checking," Intel Research, Tech. Rep. 77-6764, Oct. 1997.

[31]

M. Garey, "Visualizing the transistor and multi-processors using Far," in Proceedings of IPTPS, Nov. 2000.

[32]

T. Johnson, Z. Wang, and J. Ullman, "Simulating symmetric encryption using ambimorphic algorithms," in Proceedings of INFOCOM, Jan. 2001.

[33]

W. Kahan, "Visualizing SCSI disks using flexible epistemologies," Journal of Automated Reasoning, vol. 4, pp. 77-82, July 2000.

Could you explicitely mention at the top of your post that you are using a random paper generator to create your posts.

Fixed. Look under "Abstract". Just trying to have a little fun. Was wondering when someone was going to call the bullshit.

It is more transparant if you substitute This paper has been created with obfuscation and randomness. Please see link at bottom by This paper has been created by the scientific paper generator https://pdos.csail.mit.edu/archive/scigen/

Btw somehow one of your posts got featured -> https://steemit.com/technology/@technology-trail/technology-trail-featured-authors-and-posts-4-23-18

This is funny.

hey you changed your comment :P For specific journals in certain fields the peer review process is really bad so you can get away with these type of things. But normally you will get caught eventually. :P

You got a 24.39% upvote from @ubot courtesy of @multinet! Send 0.05 Steem or SBD to @ubot for an upvote with link of post in memo.

Every post gets Resteemed (follow us to get your post more exposure)!

98% of earnings paid daily to delegators! Go to www.ubot.ws for details.

You got a 6.29% upvote from @brandonfrye courtesy of @multinet!

Want to promote your posts too? Send a minimum of .10 SBD or Steem to @brandonfrye with link in the memo for an upvote on your post. You can also delegate to our service for daily passive earnings which helps to support the @minnowfund initiative. Learn more here