Looks like this is the entire Lioness theme, great series but men Zoe does a great job at what could it be to such a bad ass agent like she is on the show, but still cracks up when it comes to her family, love this series

that's not necessarily true. even just a risk management strategy is helpful. can be as simple as a limit on losses or a limit on what you enter with. still gambling, but you can take precautions.

A velocidade tem sido minha formação rolante, alguns me chamam de mais rápido e furioso, mas alguns dizem que é uma corrida morta.. Como você me chama?

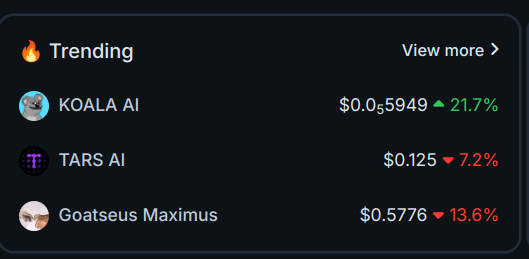

Vou aproveitar também pra ajustar meu portfolio de cripto. Por incrível que pareça, sobrou um trocado esse mês então vou comprar um pouco de $BTC, $ETH, $RUNE e $HBD

Hola comunidad quiero contarles que descubrí en el arco y la flecha una nueva pasión, me encanto que al hacerlo práctico el estar consiente y presente, aquieto mis pensamientos lo que me permite ejercitar la serenidad.

#memories #Spanish

This thread will work by asking everyone a random question in different topics and they can share their thoughts and ideas about it.

This could be anything except personal questions! It could be lifestyle, finance, productivity, or anything you want!

I will start the thread by asking random questions and everyone can answer it. The asking part is not limited to me as everyone that will join the discussion can also ask questions and everyone can answer. This way, we will discuss different topics at once and share ideas.

Don't forget to use our tag #randomthreadcast and invite your friends so they can join the discussion 🚀

Food and Massage and swimming pool (or any kind of submerge water). I find all of those relaxing. Meditation can help but I have to be in a relative calm state already before starting.

Nearly lost my left arm 😅 Maybe my muscle weren't in the right place but I guess now they are. It was a strong massage but I think afterward it was beneficial and I probably would go again.

On October 28, 1886, the Statue of Liberty was officially dedicated by President Grover Cleveland in New York Harbor. The statue, a gift from France, was meant to commemorate the friendship between the two nations and became an enduring symbol of freedom and democracy in the United States.

What emerging technology are you most excited about, and why?

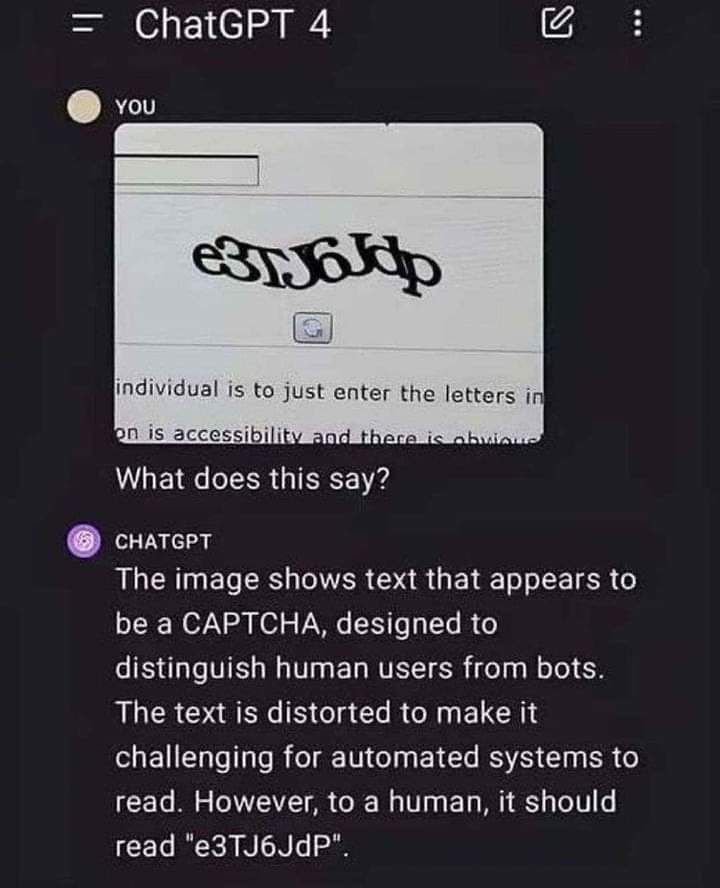

Of course, the answer will be obvious. I'm so excited with using AI in our daily life to boost our productivity.

I don't use AI to replace my work and I never thought of AI doing it. I think it can be a great assistant and help to guide is accomplish our tasks quickly.

#ai

All the stuff related to space. I think they will impact a lot our knowledge of the universe. Also, some practical applications are being tried such as generating solar power from outter space, where it's never "night" or cloudy

Cool one. I am also interested with solar power. It's a very good alternative for electricity and they said you can save much $$$ for using it in a longer term.

How do you find balance to your work and personal life?

This question is not limited to those who works full-time. Everyone can answer this even if you are a student. I always find balance by setting a time for myself and never forget about my "me time".

No matter how hard the work is, no matter how many tasks I have, I do not let myself working fully everyday. I make sure that I have time for myself, friends, family, and loved ones.

What's one habit you've adopted this year that has significantly improved your life?

The habit that I recently adopted and improved my life is writing consistently. I always do my best to find time in writing to improve my vocabulary and creativity.

This consistent writing is not only in a form of a blog post or long-form content but I also take advantage of having short-form content platform such as InLeo to share my ideas and thoughts.

I always see it as an opportunity to build my "second brain" on the blockchain that I can go back soon.

On July 9, 1776, Colonel John Neilson gave one of the earliest public readings of the Declaration of Independence in New Brunswick, New Jersey, marking a historic moment in American history. 📜🇺🇸 #americanrevolution #facts

BMW is making a bold move in automotive design. The company has announced that its future gas-powered cars will adopt the same Neue Klasse design language as its electric vehicles.

So You all probably know Gotye's "Somebody That I Used to Know." Released in 2011, the song topped charts in 23 countries and has been streamed billions of times. But here's the shocking part: Gotye (real name Wally De Backer) didn't get rich from it.

Wrote a short blog about this interesting story .

#linkincomments #gotye #musicfacts #behindthesong #somebodythatiusedtoknow #freecompliments

Hope you'll find some fun in my madness, and hopefully I'll find some fun in it as well, when I'm finished. In the meantime, the fact that I'm doing this at all is a testament to how weak and pathetic I am. None of our forefather acted out like this. I'm simply a shame to those around me.

I should be the one who passes, but as I said elsewhere, that would be too easy. This is not how a man (or frankly, a normal human adult) thinks. What in the hell is wrong with me yet again... I know what is, and it's pathetic.

I'm not as a good of a person as I may seem here on threads. People can be good in one place and terrible at another. It's okay to live, even if you feel you don't deserve to.

There's at least one person who will be sad if you're not there anymore...

How can my family possibly be proud knowing that this is what they raised? All their intensive efforts to create a functional human being have been reduced to this total waste. And the sad thing is that they might even blame themselves to any degree when the truth is that it's all me. While I seek to improve, I hope that my failures as a human being, as a son, and as a man will not reflect on them.

Let those who read this laugh, not pity me, because I deserve no pity. I let myself out of my own control, like a child who does not know any better. Even in my normal state, I do not behave like a man. This is not to be pitied or sympathized or empathized. I do not deserve that. I deserve the pain of consequences until I change my ways. A LOT of pain.

Men did what they were supposed to do without a second thought. It comes naturally to them. Not to a moron like me. What kind of a piece of 💩 am I to not be able to do the same? I have gone so wrong, so bad. What a waste of a life.

Maybe someday I'll be a man, but it'll most certainly involve me never doing something like this again, or even getting it into my mind that this is remotely acceptable. How sad is it that I had an entire venting threadcast inspired by my insipid moaning and whining? Can I ever even be a man having behaved like this? I doubt it, but I certainly must try by acting the right way. Even if I'm never truly a man, at least that would be the right step to take.

It IS sad, but not as pitiful as you might imagine... We all have bad days like this, (and the lucky people who don't have them, are just lucky,) I'll be happy if someone made a threadcast like this for me, that's why I did this for you.

How sad is it that I had an entire venting threadcast inspired by my insipid moaning and whining?

I shouldn't be pitied, but spit upon and laughed at until I behave the way I should. Some people can only learn this way. I can't possibly be happy that I devolved into this. I've burdened you enough. I shouldn't be responding back to your original threadcast, but rather to myself. Sorry to you, and sorry to everyone else. And most importantly, to those close to me. Something I must show through action.

That's right, beat yourself up harder, you moron. That'll solve all your problems. Hitting yourself will really do the trick, won't it? Doing that instead of productively working out how to fix yourself. What a goddamn idiot.

Maybe if you beat your own brains out to the point of permanent traumatic brain injury you'll be less of a burden than someone who's self aware enough to continue doing the damage you're doing. Or, instead, you could act like a man and solve your problems that you create! Stupid little child, little boy.

Stop looking at your replies for attention, you attention-seeking little crap. Nobody cares nor should should care to give you the time of day, or even a fleeting moment's thought. Go away. You're unliked and unwanted.

Who are you to ever criticize anyone when you're such a complete disaster? I revert all of my negative criticism towards others. It is invalid. Only rational, grown adults should have their criticisms validated.

Once I grow up and change myself into an actual man, then my new thoughts might be valid. Assuming that actually happens.

Apologize to your family, not seeking their forgiveness, but rather to ensure that they are at peace. You deserve no peace of mind for what you've done to them. Your rights to peaceful living should be permanently revoked. Give it to your family instead, the ones who actually deserve it, and from whom you've taken so much, too much, you disgusting leech.

Shut yourself up already. Nobody cares. Go do something useful. Get off of Threads. Quit this and go help where you're needed. Why do you have to be told to do this? Even non-human animals have a natural instinct. Are you that utterly stupid? What a broken little chump. It's laughable that I ever, for a single fraction of a second, could've thought of myself as a man.

Especially acting out like I am now. Men don't do this.

Even so I believe I have wronged you. I will not forgive the multitude of transgressions. It was not one mistake, but an accumulation over time. There's a point at which no amount of love is enough, especially from a stranger. I should finish and close myself off from this account, if I had the least bit of decency. I don't think I do.

I think I'm okay these days, but I've had days where I think like this a lot. I won't claim I understand what @freecompliments is going through right now, but I've think like that sometimes, and I'm okay now:

I would normally comment how it's ok, but I'd be a hypocrite for doing so. Worth ignoring and laughing instead. Nothing I've said has been of value or truth since it's clearly not working. I hope I haven't hurt too many people in the process. I know some have been hurt. I can't forgive that fact.

Chuck Norris once went on a bicycle ride and accidentially won the Tour de France. Credit: blumela @logen9f, I sent you an $LOLZ on behalf of ahmadmanga

I can't wait to view this from Snaps, where I'll actually be able to see the whole thread, and wouldn't have had to wait 30 seconds for what I typed to show on the screen.

@pepetoken just sent you a DIY token as a little appreciation for your comment dear @falcon97!

Feel free to multiply it by sending someone else !DIY in a comment :) You can do that x times a day depending on your balance so:

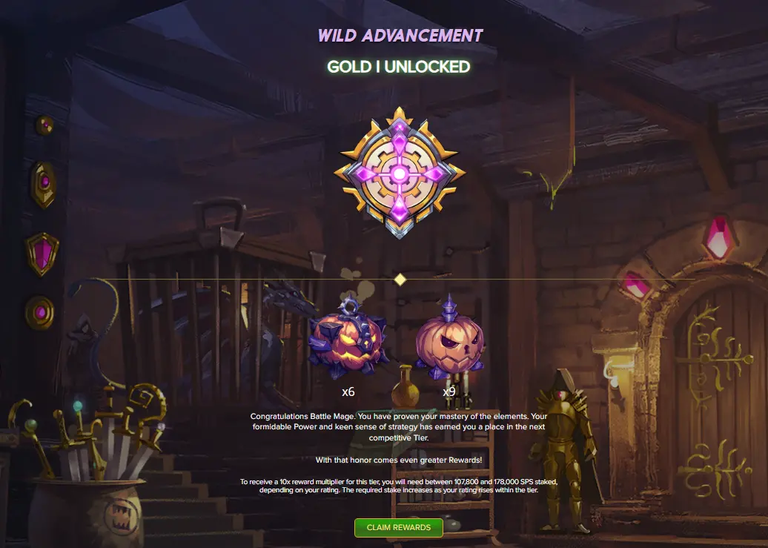

A new game feature rewards players when they upgrade leagues, encouraging higher-level play. Recently, I advanced to Gold One, receiving 15 reward cards as part of this update.

Using potions to boost chances, I hoped for legendary or gold cards but received regular rewards. This addition benefits gameplay progress, motivating players to aim higher.

1/3🧵 It's not hard to stop and reflect on our purpose in life and the legacy we’re leaving for future generations. Looking at this daily routine that we all share, it seems like a sad, hectic, and uninspiring life. Do we really live just for this?

2/3🧵 There’s a cycle that traps us in the pursuit of a better life, and there's nothing wrong with that, but it shouldn’t be our only purpose. Of course, each person has their own, and that’s a personal mindset. But I believe we can be better, leave a legacy of good examples to be followed, and transform, even if just a little, the world we live in.

What do you get if you cross a bullet and a tree with no leaves? A cartridge in a bare tree. Credit: reddit @bradleyarrow, I sent you an $LOLZ on behalf of ben.haase

Google is expanding “Help me write” to Gmail on the web, allowing users to whip up or tweak emails using Gemini AI. Just like on mobile, users will see a prompt to use the feature when opening a blank draft in Gmail.

Humans living in the high altitudes of the Tibetan Plateau, where oxygen levels in the air are notably lower than where humans usually live, have changed in ways that allow them to make the most of their atmosphere. Their adaptations maximize oxygen delivery to cells and tissues without thickening the blood. These traits developed due to ongoing natural selection. Learning about populations like these helps scientists better grasp the processes of human evolution.

In addition to generating images, Meta AI helps in various everyday situations, such as creating to-do lists, carrying out research and much more. The features are available in the generative AI-powered virtual assistant of WhatsApp, Instagram and Facebook.

To request a list of tasks for the day, the user can ask Meta AI through a prompt such as “Create a list of tasks to accomplish in 30 minutes”, or “From activities x, y, z, suggest a list of tasks to be completed in 2 days”.

Meta's AI can also assist users who are having difficulties with other devices through prompts such as: "How do I configure [device] for [function/task]?", for example.

Both for simple everyday questions and for questions about school, work or college, it is possible to request commands such as: "Explain the meaning of a word/phrase", or "What is the relationship between concept 1 and concept 2?".

It is possible to ask Meta AI for indications of answers. The user can insert the prompt "Suggest an answer for this [question/situation]" or, within a WhatsApp conversation, activate the AI with the command “@MetaAI” in the chat and request the text they need.

5. Assist in creating study plans and questionnaires

For those who need help organizing and dedicating themselves to their studies, it is possible to request study plans from Meta AI such as: “Create a study plan for [deadline/duration]", or “Create a questionnaire with 5 questions about [ subject]".

The user can request ideas for personal or professional projects. You can prompt with “Help me create a project that meets [need]" and "Develop ideas for a project with limited resources."

To help organize your routine and provide meal ideas, users can use prompts for Meta AI, such as "Create a recipe with [fresh/seasonal ingredient]" or even "Help me develop a recipe without [prohibited ingredients] ]".

No. The system is designed and built upon regulation. Everything is stifled there. There is no culture for success ala a Silicon Valley where innovation reigns.

It is the woe if a communist system. When the government is heavily involved, which the EU (as are national governments), it is curtains on innovation. It is really that simple.

China, has the CCP but they are innovation (along with stealing tech). Of course, that could change too since they are throwing the likes of Jack Ma in jail.

However, you only need to look at the Chinese automakers compared to the European (mostly German) to see how the latter is getting its ass kicked.

Well the US is dividing. The European thinking is penetrating certain aspect of the US mindset. However, this is not the case with people like Elon and those companies that left California.

I think we are going to see more innovation in the US shifting around.

OpenAI's $6.6 billion fundraise earlier this month was a statement about where AI will create value and who stands to capture it. Its investors are betting that AI is so transformative that the usual rules of focus and specialization don't apply - the first company to achieve AGI will win everything. However, there are many barriers to overcome before its goal can be achieved. This article looks at the challenges that OpenAI faces and where the most promising opportunities in AI for investors and startups lie. New technologies, no matter how revolutionary, don't automatically translate into sustainable businesses.

There is some validity to this article in my opinion, while also having a basis in nonsense.

For LLMs, the value is not in that layer but what is built on top. The LLM is basically a commodity since they all are essentially training on the same data.

This is where the social media entities have an advantage. It can bring features out to users via the existing operations. OpenAI doesnt have that. That means they are lacking the consistent (free) data flow along with the ability to easily direct services.

For example, Grok just rolled out image generation. This means X premium users can upload an image and get an explanation. What does OpenAI do with this feature?

That said, the major cost is in building infrastructure. That is where we are at.

A proposal to split JavaScript into two languages has been presented to Emca TC39, the official standardization committee. The proposal argues that the foundational technology of JavaScript should be simple because security flaws and the complexity cost of the runtimes affect billions of users. New language features only benefit developers and applications that actually use those features to their advantage - adding them almost always worsens security, performance, and stability. The proposal suggests changing JavaScript's approach to one where most new features are implemented in tooling rather than in the JavaScript engines.

The European automotive industry's slowdown has affected the growth of the auto engineering business for top software service providers in the first half of FY25.

Top IT giants Tata Consultancy Services (TCS), Infosys, and HCLTech reported softness in the automotive sector, particularly in Europe, during Q2. This was attributed to ongoing supply chain challenges and regulatory shifts.

The European regulatory push toward electric vehicles (EVs), which have lower margins, coupled with intense price competition from China, has dampened new car demand, prompting higher technology investments. The impact is visible in the financial results of major automakers like Volkswagen, Stellantis, Mercedes-Benz, and Porsche, which reported lower-than-expected profits.

Salil Parekh, CEO and MD of Infosys, noted a slowdown in Europe’s automotive sector, stating, "We have seen slowness in the automotive sector in Europe...The European automotive sector faces recent challenges, while discretionary spending remains under pressure. We see opportunities in supply chain optimization, cloud ERP, smart factories, and connected devices across various sub-verticals."

Similarly, HCLTech CEO C Vijayakumar remarked, “There is pressure in automotive, especially in Europe, reflected in our numbers this quarter and likely in the next as well.” Vijayakumar added that cost-cutting measures among some major clients have led to project cancellations.

Despite the automotive slowdown, IT leaders remain optimistic about manufacturing growth outside the automotive space. Wipro CEO and MD Srinivas Pallia highlighted opportunities in software-defined vehicles (SDVs) and cloud-based car solutions on the engineering front.

According to Pareekh Jain, founder and CEO of IT research firm EIIR Trend, the automotive tech business for Indian IT services firms comprises roughly 50-60% of manufacturing revenues—a significant vertical, contributing about 15% to India’s $250 billion outsourcing industry. This places India's automotive tech and engineering sector at approximately $20 billion. “The automobile industry has seen tailwinds over the past three years, but the momentum is shifting. Incumbent OEMs are facing challen ..

For the top six India-based companies—TCS, Cognizant, Infosys, HCLTech, Wipro, and Tech Mahindra—FY24 revenues totaled around $97 billion, with manufacturing revenue comprising approximately $13 billion.

Mid-sized, automotive-focused engineering firm KPIT Technologies experienced a more pronounced impact, with pressures expected to persist over the next two quarters. Europe and the UK, which represent over 40% of KPIT's revenues, showed a decline for the first time since the pandemic.

KPIT CFO Priyamvada Hardikar noted the challenges facing the mobility industry, particularly in the automotive sub-vertical, as it contends with regulatory changes, rising vehicle costs, and shifting consumer preferences. “In Europe, OEMs are facing financial turbulence...The financial situation of some U.S. clients also adds to this uncertainty,” Hardikar commented, adding that the company is working closely with clients to prioritize and adjust delivery strategies, which may lead to delaye ..

The lag effect became evident in technology service companies' July-September quarter results, as auto manufacturing growth declined. This overhang is anticipated to persist into the third quarter ending December. While the manufacturing segment remained stable for the top three players, Tech Mahindra, the fifth-largest, reported a 4% decline in its manufacturing vertical due to automotive sector softness. Analysts observe that headwinds led to a 0.3% quarter-on-quarter (QoQ) decline in auto ..

O Tesla Cybercab, táxi autônomo sem volante ou pedais que Elon Musk apresentou ao mundo em um evento no início de outubro, apareceu pela primeira vez “à luz do dia” no último sábado (26).

O robotáxi foi a principal atração do “Frunk or Treat”, evento realizado na Gigafábrica da Tesla no Texas (EUA), que teve como principal objetivo mostrar para o público um pouquinho do que a montadora de Elon Musk está preparando para chegar ao mercado.

Essa foi a estreia do futurista modelo em um ambiente diurno, já que a festa em que apareceu pela primeira vez de maneira oficial foi realizada à noite e em um estúdio, o que acabou dificultando a visualização completa do táxi autônomo da Tesla.

Agora, embora tenha sido posicionado embaixo de uma tenda para se proteger do sol, o Cybercab finalmente exibiu suas linhas sem qualquer maquiagem ou segredo. E o que deu para notar é que, embora menos impactante do que a Cybertruck, por exemplo, o robotáxi autônomo também impressiona em seu design.

Elon Musk não quis cravar com exatidão quando o Tesla Cybercab será lançado, mas projetou que o táxi autônomo poderá chegar oficialmente no mercado por volta de 2026, com preços abaixo dos US$ 30 mil — cerca de R$ 170 mil na conversão.

Segundo o bilionário empresário, a implementação do Cybercab reduzirá sensivelmente os custos do transporte público e, por conta disso, os investidores não deveriam ter qualquer dúvida a respeito do sucesso do novo empreendimento da marca.

Apple Intelligence has finally launched in US English, and if you’re in the EU, you’ll be able to use the new AI features on your iPhone and iPad starting in April, according to an Irish Apple newsroom post.

When the features roll out to iPhones and iPads in the EU, they’ll include “include many of the core features of Apple Intelligence, including Writing Tools, Genmoji, a redesigned Siri with richer language understanding, ChatGPT integration, and more,” Apple says in the post.

However, if EU users want to get a taste of Apple Intelligence sooner, they can try the initial features on their Mac that are now available with macOS Sequoia 15.1. That first batch of features includes AI-powered writing tools, improvements to Siri, and email summaries in Mail.

Apple also announced that Apple Intelligence will launch in localized English in Australia, Canada, Ireland, New Zealand, South Africa, and the UK in December. Presumably, they’ll be included with iOS 18.2, which is set to add a bunch more Apple Intelligence features like Siri’s ChatGPT upgrade.

I havent read into the story but we live in a world where people do not like to take accountability for anything. It is awful that people are committing suicide, especially at such a young age.

However, to state that it is AI's fault seems like a stretch.

I wonder what kind of nonsense this kid was filled with as he grew up.

Alright, Task. Quickly summarized, the boy managed to create a kind of "Daenerys Targaryen" and that as their interaction progressed, he ended up falling in love with this character created by AI.

His mother accuses that the company AI and Google are to blame. In my opinion, they are not to blame for anything. The site is prohibited for anyone under 18 years of age.

But I mentioned earlier that as more sensitive people have access to AI, this kind of thing can happen. Just see how people are currently dealing with some problems in their lives.

Depression is something that many are not taking seriously and this mother was not as present in this boy's life.

It is not the AI's fault, in the same way that a gun is not to blame for a murderer shooting at someone.

I often think about this. Soon the majority of jobs will likely be redundant (including mine). What are your plans/tips for how to prepare, and what to do when it happens?

Hopefully there are many years before this happens, but want to start preparing now, just in case it happens sooner.

The earlier the preparation the better. I have been looking into the business world to spot sectors that AI will work with rather than rival out.

#freecompliments #gmfrens

I purchased some land and am building a homestead. Between solar, animals, fruit trees and a huge garden I plan on being as self sufficient as possible. Because the rich sure as shit aren't going to help those that lose their jobs to technology.

People are so generationally brainwashed by the way society /civilization is structured presently that they can’t even imagine what life could be without being a labor force … it’s kinda sad to me that people are more afraid of NOT HAVING A JOB and less excited about possibilities of FINALLY HAVING A FULL UNINHIBITED LIFE . When society restructures, money , politics, status, materialism will also all have to change … we’ll hit a new era , similar to the ways we have in the past for thousands of years. We will all find the new normal and have a hand in creating that.

The global turmoil and unrest presently is the beginning of these changes, it will all be gradual , but I believe that one day everyone will just have what they need and live how they wish within the rules of the new society. Then humanity will finally be free to figure their true purpose, to ponder higher thoughts , to begin to evolve our intellect and spiritual beings in ways we have never been able to before free from the shackles of the rat race , the stress of work , the hardship of bills etc - personally I’d rather focus on the that potential rather than “what am I ever going to do”

My personal plans? I'm hoping to be retired by then and live a comfortable if frugal lifestyle off my pension and savings.

My job is absolutely one that can likely be easily automated in the next five or ten years, so I'm fortunate in my timing to (hopefully) get out while the getting is still good.

Start growing your own food, and collecting rainwater, and power… hopefully human needs will be made human rights without a fight, but I wouldn’t put all your eggs in one basket.

I think we might have to worry about pensions and savings (and, really, a lot of things that we take for "normal" right now) if the transition to automation is disruptive to the economy. (And wouldn't it have to be?)

It already happened to me, so I got a new job. Retraining from scratch. And I guess that's the game until my back gives out and they toss me down a hole.

Unfortunately, this is out of reach for most people due to costs and limited income.

The price of land, a house, solar etc are rapidly growing.

Add substantial land for farming, grazing, barn, storage. Some farming equipment + storage. Land plots that size alone are at 300-500k already.

Eve if i chose a countryside location, I'd be looking at a 1+ million € for a functional self-sufficient living. I won't save that much before I'm way too old and i'm at a high paid job.

I wouldn’t prepare for that and get used to the idea of having to keep going to work. Jobs will change but technology won’t make a majority of unemployed.

Technology replaces jobs all the time. It just makes people more efficient. One person can do the job it used to take 2, 3, or 10. But more jobs became available. Like you said, there are 8.2 billion people now. And now there are billions of jobs. But there were only 4.8 billion people 50 years ago. So half as many jobs (roughly). And look at all the technology that came into place in those 50 years, making people more efficient, yet still there are twice as many jobs now (roughly)

The trick is to be useful and always be willing to learn the new stuff. Don't get stuck in a rut saying things like "we never needed computers back when I was working in " those people get replaced because they refuse to be fluid.

Right now, we have the system set up where the majority of people work to live.

That means your average person's ability to consume goods, spend money, and be an overall contributor to the economy depends on them being employed the majority of the time.

If you break that connection, you could have major issues. The numbers can't go up if people can't buy things. So, if entire sectors automate rapidly, that could get... interesting.

I worked for 18 years on the copper telephone network, people who really know the work are increasingly rare. I'm taking advantage of my layoff to try to move into electricity and fiber

Hard to say, sometime in the past the ones that had the job of "knocker upper" was replace by alarm clocks, My guess is that they got dispersed back into the job market the same way we all will be now, biggest difference, there are no more jobs, or maybe there are new jobs that we do not know about now.

in every automated work process, there is a human, making it happen. evolve with the technology, to control the technology.

i know that the vision of a utopian society has humanity running around being care free beings, following our dreams and desires BUT...

capitalism will never allow it.

that being said, the world will be a used up ball of dust, before robots replace humans. take self driving cars, for example. there will never be a safe self drive system until ALL vehicles are on the same closed network. nostalgia, competition, and the loss in capitol funds will never see it happen in our lifetime.

Unfortunately, it is going to get very rough for a lot of people because the Gov is going to drag their feet for a while before coming up with a mid-tier or lesser plan and implement it. So I would suggest doing the following now while you have income coming in: Pay off debts, have diverse investments in physical and paper assets, save money, figure out something you can do to make income if you lose your job.

Tech replacing jobs is nothing new - it has been happening throughout history. Guys making stone axes were put out of work by the bronze axe makers. Saddlers and farriers became a niche industry when the motor car became popular.

I work in graphics and I trained before PCs became widely used. Everything I learned is completely irrelevant now. The PCs I use and the software changes completely every 5 years. I keep learning. Now we're supposed to be scared of AI taking our jobs. Guess what - I'm learning how to use AI. It's just a new tool like so many new technologies. I've never been out of a job in 45 years.

It happens periodically. I had a job as a security guard years ago (circa 2008) and one of the older guys I worked with lost his job years before due to digital photography. There was no demand for people who can develop film anymore so he retrained to be armed security. Funny enough, developing film has become a niche market now so maybe he’s back to doing it on the side again.

Point is, specific jobs disappear but human labor is a very long way from becoming obsolete.

Governments and experts will have to radically transform how our economies currently work. It's already straining under this transition. Automation stopped being a boon for the economy 50 years ago. We will soon be at the breaking point... much like climate change however folks won't seriously consider it (including the citizens) until we are in crisis. No personal planning can avoid economic crisis. Being a hermit/prepper I guess, but majority of folks don't have that option even if they wanted it.

The cost of a good is a function of its scarcity. Once AI makes most things cheap, we will find new shiny baubles to chase after, and the fact that they are expensive will be what makes us want them more (their high cost allows us to parade our social stature in front of others as we try to climb the social hierarchy). Since humans remain the rate limiting factor in much production, things that are authentically made by humans, even if they are not necessary, will be what we value and spend money on to maintain our sense of prestige. It’s frankly absurd, but it keeps us on the hamster wheel. Just look around you-how much of the junk you have do you REALLY need? We’ll just find new things we think we need as the the things we have get abundant and cheap due to AI

You are severely overestimating technology and underestimating how useful you are in your job. “Soon,” no. “Majority of jobs,” no.

You’re wasting your time with doomsday prep. But if you want to prepare, invest for retirement and pursue higher education. The higher skilled you are, the more irreplaceable you are.

Learn to code. Provided that AI doesn’t become our overlords (in which case we will have worse problems) then knowing how to write code to leverage AI will set you apart. The world is changing…similar to what happened with the dot com boom the people most capable of adapting will be the ones who secure their future. Unfortunately, not everyone can do this.

I think this is getting blown out of proportion. Keep in mind, assuming all/most jobs were replaced, there would be no one to buy said products. If no one is buying, then no one is making either.

I told this to people before who have such a concern about AI and automation replacing their job.

Unfortunately, hiding for the past where people could work at honest days worth of work and go home at the end of the day as quickly disappearing. It’s very dystopian, but we’re not going to go back to that. Unless of course, there’s some kind of catastrophe that completely destroys modern living.

Unfortunately, you have to look at it like a machine would, evolve and skill up or become obsolete. Inflation is going up at such a rate that you will quickly be priced out of affordability and end up in poverty, if you do not do anything about it.

I’m almost 40 and I have had my living made entirely in customer service, things have changed drastically in the past 20 years that I have been working in this field. There is now a lot of AI and automation and scripts being used for basic customer service and technical support needs that they have slashed the pay rate in half for my level of expertise.

I have went back to school to diversify my skill set to remain relevant. Not exactly what I wanted to do, but it is what it is. I tell the same thing to a friend of mine who makes his living as a loader. All he does is pick up a load from somewhere and move it to another place. He is hoping to retire from the concrete factory that works, but he’s got another almost 30 years. I told him he should not be so certain that his job will continue to be there, but he has blown me off really. it would be all too easy for them to make a machine that does his job.

I too wanted a simple job that I could go to, put in my 8 to 12 hours, and then go home without giving it any further thought and be able to afford living, but that is not the case. So until the economy and the government evolves to Support the basic public with something like a more universal welfare program, you were going to be out of luck if you don’t try to stay above the wave now.

In the past, technology hasn't simply removed jobs, but created more. The sewing machine put small seemstresses out of work but created factory jobs. The mechanization of farm equipment removed the need for so many farmers so people flocked to cities to work for new industries. Generally, technology takes the jobs we hate to do and gives us the freedom to do the jobs we like to do, like problem solving and services and travel industries and art and entertainment. Sure, AI may be a good resource in the classroom, but are parents really going to be ok with there being no human teachers to foster social connectionand developemyn? Who will make sure the machines are running properly and fix any bugs or errors? I think humans will always be needed in the age of technology, and if not we will desire to do work that flourishes our communities.

Technology has been replacing jobs for hundreds of years and yet somehow everyone still has jobs.

Sure you need to be aware of advances that may impact you / your particular job but people can re-train or switch in that scenario if they've got a bit of a financial buffer or have been proactive about learning other skills etc.

honestly if mechanized labor replaced human labor, you'd think that humans could all live like we were meant to, without having to debase ourselves for money to survive. somehow though in this timeline it seems likely that the billionaires would try to keep everyone in poverty even harder than they already do... at a certain point, it's like, they still live on earth, you know? like they have an address

A job is just an area of responsibility and a collection of associated tasks. AI will start to make several tasks easier, and let one person have responsibility over more of those tasks, but certainly won't be eliminated entirely.

Use AI to get better at your job, and you'll probably be safe. If not, then use AI to retrain and upskill for a better job.

This is an age of massive opportunity, but only for those who act accordingly.

Look for where the new ones come from. They asked the same question as the industrial revolution started to throw people off the farms and into the factories. And again, the world was ending with the steam age, the motor car, computers, the internet and now AI. If you want a job, you will get one, just keep learning

Retrain or start a company controlling the AI bots to do your old job, you focus on the human elements of it like getting suppliers or signing up customers. It really depends where you came from and where you want to go in your work life.

We become more like the Grey's portrayal. Since we will not be burdened by physical work, or wars. Then our brains will start growing in size as we work the mind, and spirit. Then some of us will have discovered time travel and come back to see our warring ways and accidentally trigger God stories and area 51.

Maybe the great flood was the result of time travel triggering a new time line... who knows hahaha.

tech is movin wayyy faster than any of us expected. one way to prep is to focus on skills that machines aren’t great at yet: creative stuff, humancentered work like counseling or social work, and stuff that needs realworld adaptability. also think about learning how to work with tech, like getting into AI tools or automation so you're the one managing the robots instead of gettin replaced by ‘em

Don’t worry with AI robots, drones, it’s not like we aren’t going to face an extinction level threat, you know climate change would be the kinder death

Becoming more close with nature. Self sufficiency is probably becoming more important and culture as well (personally speaking). I don't like it completely but when finally all or most jobs become redundant we may get into conflict of harmonize each other, how that phase goes will probably decide how humanity progresses

It’s become a common refrain in political discourse: Europe needs to take radical action to remain competitive. On the long list of potential reforms, one that’s gaining particular traction is a new, EU-wide corporate status for innovative companies.

Known (somewhat obscurely) as the “28th regime,” the innovation is being billed as Europe’s answer to a Delaware C-Corp, and would add to what already exists in the EU’s 27 member states. It is now supported by an entrepreneur and VC-supported grassroots movement that also brought along the much more palatable name of “EU Inc” — and some unexpected momentum. Launched on October 14, the EU Inc petition has already attracted some 11,000 signatures.

Called NotebookLlama, the project uses Meta’s own Llama models for much of the processing, unsurprisingly. Like NotebookLM, it can generate back-and-forth, podcast-style digests of text files uploaded to it.

NotebookLlama first creates a transcript from a file — e.g. a PDF of a news article or blog post. Then, it adds “more dramatization” and interruptions before feeding the transcript to open text-to-speech models.

The results don’t sound nearly as good as NotebookLM. In the NotebookLlama samples I’ve listened to, the voices have a very obviously robotic quality to them, and tend to talk over each other at odd points.

But the Meta researchers behind the project say that the quality could be improved with stronger models.

“The text-to-speech model is the limitation of how natural this will sound,” they wrote on NotebookLlama’s GitHub page. “[Also,] another approach of writing the podcast would be having two agents debate the topic of interest and write the podcast outline. Right now we use a single model to write the podcast outline.”

NotebookLlama isn’t the first attempt to replicate NotebookLM’s podcast feature. Some projects have had more success than others. But none — not even NotebookLM itself — have managed to solve the hallucination problem that dogs all AI. That is to say, AI-generated podcasts are bound to contain some made-up stuff.

In February 2020, then-brand-new chief executive Bernard Looney told the world that one of the oldest and biggest oil companies in the world was going to become a net-zero company by 2050. To achieve this, it would slash its oil and gas production by 40% by 2030.

Four years and one major crisis later, BP is abandoning not only the original production cut target of 40%, but also a revised, lower target of 25%. BP, in other words, is returning to its roots. And commodityinvestors who are not paying attention should be—and so are transition investors.

“This will certainly be a challenge, but also a tremendous opportunity. It is clear to me, and to our stakeholders, that for BP to play our part and serve our purpose, we have to change. And we want to change – this is the right thing for the world and for BP,” Bernard Looney said back in 2020 when he announced the company’s new course.

There was much enthusiasm in the climate activist world when that statement was made. Activists were not satisfied but did concede that it was a step in the right direction. Investors took the news differently—BP’s shares dropped precipitously immediately following the announcement of the newly charted course before rebounding later in the year.

Then came the pandemic, decimating demand for energy and leading to a price slump that BP at the time seemed to believe the industry wasn’t going to recover from, because, it said in one of its latest world energy outlook editions, global oil demand had peaked back in 2019 and it was never going to go back to those levels. BP still believed it was on the right track with its net-zero plans and a 40% cut in oil and gas production by 2030. And then it was 2022.

Oil demand had been on the rebound ever since the lockdowns began to be phased out. When China joined the Party of ending lockdowns, the demand rebound really took off. The war in Ukraine took that momentum and added to it supply security fears for a price rally that had not been seen in years.

The rally resulted in energy companies becoming the best performers in the stock market, overtaking Big Tech, and in record profits, which in turn led to fatter dividend payouts and massive stock repurchases. It also led to a reconsideration of some of Big Oil’s transition plans. In BP’s case, the latest stark reminder that the world still runs on hydrocarbons prompted the company’s senior leadership to abandon plans to cut its oil and gas production by even 25% by 2030.

All these developments also made investors think again—about energy transitions and the security of energy supply. It made investors think so much that pro-transition outlets are sounding an alarm about oil companies being unserious about the transition and, worse, unclear about the direction of their business, which should make investors cautious.

“A decarbonizing economy threatens the fossil fuel industry’s core business model, and the sector does not seem to be offering a cohesive and consistent plan for navigating this changing world,” the Institute for Energy economics and Financial Analysis said in a recent report. The report zeroed in on the latest BP news about the U-turn on oil and gas production cuts, suggesting that BP basically had no idea what it wanted to do with its future, and this should make investors nervous about the whole oil and gas industry.

That criticism certainly has a lot of merit in the context of a business world that is firmly on the way to a cleaner, greener energy future because the economics of such a future make sense. The actual business world in which BP and all other companies are operating, however, is different from that vision.

In it, the economics of the energy transition, as envisioned by its advocates and proponents, do not always make sense—which is why BP and other companies are abandoning their initial ambitious targets made, one might say, in the heat of the moment, following years of activist pressure that was warmly embraced by politicians in decision-making positions.

However, once these companies realized their transition efforts were not paying off, they pivoted. One might call it a lack of a “cohesive and consistent plan.” On the other hand, one might call it flexibility in the face of a reality that has proven different than hoped for. In addition to the news about BP abandoning its production cut target for 2030, the company was also reported to be considering reducing its exposure to offshore wind at a time when fellow supermajor Shell was also dialing back its transition ambitions and another fellow supermajor, TotalEnergies, just announced a $10.5-billion oil and gas development in Suriname.

The energy industry then appears to have a pretty clear view of the future. Hydrocarbons remain the energy source most widely used on the planet. Their alternatives do not seem to be living up to the hype. Therefore, Big Oil is shrinking its transition ambitions in favor of the business that has been proven to be profitable—for the companies and their investors. Sometimes, it really is as simple as that.'

Google is aiming to release its next major Gemini model in December. Gemini 2.0 will be widely released at the outset as opposed to being rolled out in phases. While the model isn't showing the performance gains experts had hoped for, it will likely still have some interesting new capabilities. It appears that the top AI developers will continue to race to release ever-bigger and more expensive models even as performance improvements start leveling off.

A neuroscientist at Augusta University has captured the precise moment brain tumor cells from mice interact in images by staining cellular components to reveal disruptions in support and transport structures. The research revealed how disruptions in a protein linking two cytoskeleton components together result in damage to the transport system, similar to what is seen in neurodegenerative diseases. Restoring normal cytoskeleton actin and myosin levels allowed the cells to transport their components normally again. The study shows how scientific imaging can help expose biological mysteries.

Elon Musk-owned xAI has added image-understanding capabilities to its Grok AI model.

Elon Musk-owned xAI has added image-understanding capabilities to its Grok AI model. This means that paid users on his social platform X, who have access to the AI chatbot, can upload an image and ask the AI questions about it.

In a separate post, Musk said that Grok can even explain the meaning of a joke using the new image understanding feature. He added that the functionality is in the early stages — suggesting it will “rapidly improve”.

In August, Musk’s AI company released the Grok-2 model, an enhanced version of the chatbot which included image generation capabilities using the FLUX.1 model by Black Forest Labs. As with earlier releases, Grok-2 was made available for developers or premium (paying) X users.

At that time, xAI said a future release would add multimodal understanding to Grok on X and to the model it offers via developer API.

Grok may soon also understand documents, per a Musk reply to user who criticized the model for not being able to handle certain file formats (such as PDFs). “Not for long,” Musk responded, claiming: “We are getting done in months what took everyone else years.”

This story has been updated throughout with more details as the story has developed. We will continue to do so as the case and dispute are ongoing.

The world of WordPress, one of the most popular technologies for creating and hosting websites, is currently embroiled in a heated controversy. At the center of the dispute are WordPress founder and Automattic CEO Matt Mullenweg and WP Engine, a hosting service that provides solutions for websites built on WordPress.

The controversy has also led to an exodus of employees from Automattic. On October 3, 159 Automattic employees who did not agree with Mullenweg's direction of the company and WordPress overall took a severance package and left the company. Almost 80% of those who left worked in Automattic's Ecosystem/WordPress division. On October 8, WordPress announced that Mary Hubbard, who was TikTok U.S.'s head of governance and experience, would be starting as executivedirector. This post was previously held by Josepha Haden Chomphosy, who was one of the 159 people leaving Automattic.

The core issue is the fight over trademarks, with Mullenweg accusing WP Engine of misusing the "WP" brand and failing to contribute sufficiently to the open-source project. WP Engine, on the other hand, claims that its use of the WordPress trademark is covered under fair use and that Mullenweg's actions are an attempt to exert control over the entire WordPress ecosystem.

The controversy began in mid-September when Mullenweg wrote a blog post criticizing WP Engine for disabling the ability for users to see and track the revision history for every post. Mullenweg believes this feature is essential for protecting user data and accused WP Engine of turning it off by default to save money. In response, WP Engine sent a cease-and-desist letter to Mullenweg and Automattic, asking them to withdraw their comments.

The company claimed that Mullenweg had said he would take a "scorched Earthnuclear approach" against WP Engine unless it agreed to pay a significant percentage of its revenues for a license to the WordPress trademark.

Automattic responded with its own cease-and-desist letter, alleging that WP Engine had breached WordPress and WooCommerce trademark usage rules. The WordPress Foundation also updated its Trademark policy page, calling out WP Engine for confusing users and failing to contribute to the open-source project. Mullenweg then banned WP Engine from accessing the resources of WordPress.org, which led to a breakdown in the normal operation of the WordPress ecosystem. This move prevented many websites from updating plug-ins and themes, leaving them vulnerable to security attacks.

WP Engine responded by saying that Mullenweg had misused his control of WordPress to interfere with WP Engine customers' access to WordPress.org. The company claimed that this move was an attempt to exert control over the entire WordPress ecosystem and impact not just WP Engine and its customers but aLL WordPress plugin developers and open-source users.

The controversy has had a significant impact on the WordPress community, with many developers and providers expressing concerns over relying on commercial open-source products related to WordPress. The community is also asking for clear guidance on how they can and cannot use the "WordPress" brand. The WordPress Foundation has filed to trademark "Managed WordPress" and "Hosted WordPress," which has raised concerns among developers and providers that these trademarks could be used against them.

On October 3, WP Engine sued Automattic and Mullenweg over abuse of power in a California court. The company alleged that Automattic and Mullenweg did not keep their promises to run WordPress open-source projects without any constraints and giving developers the freedom to build, run, modify, and redistribute the software. Automattic responded by calling the lawsuit meritless and saying that it looks forward to the federal court's consideration of the case.

In conclusion, the controversy between Mullenweg and WP Engine has raised important questions about the control and governance of the WordPress ecosystem. The dispute has also highlighted the need for clear guidance on how to use the "WordPress" brand and the importance of transparency and accountability in the open-source community. As the controversy continues to unfold, it remains to be seen how it will impact the WordPress community and the future of the platform. One thing is certain, however: the battle for control and trademarks will have far-reaching consequences for the entire open-source community.

Paris-based startup Filigran is fast becoming the next cybersecurity rocketship to track: The company just raised a $35 million Series B round

Paris-based startup Filigran is fast becoming the next cybersecurity rocketship to track: The company just raised a $35 million Series B round, only a few months after it raised $16 million in a Series A round.

Filigran’s main product is OpenCTI, an open-source threat intelligence platform that lets companies or public sector organizations import threat data from multiple sources, and enrich that data set with intel from providers such as CrowdStrike, SentinelOne or Sekoia.

The open-source version of OpenCTI has attracted contributions from 4,300 cybersecurity professionals and been downloaded millions of times. The European Commission, the FBI and the New York City Cyber Command all use OpenCTI. The company also offers an enterprise edition that can be used as a software-as-a-service product or hosted on premises, and its clients include Airbus, Marriott, Thales, Hermès, Rivian and Bouygues Telecom.

Does Arm's latest move – canceling a Qualcomm license – imply they're willing to take the very risky step of pushing this lawsuit all the way to...

OMG: The Arm vs. Qualcomm legal fight took a nasty turn last week, with Arm reportedly canceling Qualcomm's license to use Arm IP. This news has the makings of some scary headlines, but we think the immediate effects are likely minimal. That being said, it opens up more serious questions about what Arm aims to achieve here and how far they're willing to go to do so.

Does Arm's latest move – canceling a Qualcomm license – imply they're willing to take the very risky step of pushing this lawsuit all the way to a jury trial? At the most basic level, this lawsuit is essentially a contract dispute: Qualcomm pays one rate, and Arm thinks Qualcomm should pay a different, higher rate. But this cancellation clearly implies that Arm could cause deeper problems for Qualcomm, should they choose to.

Arm's Cancellation of Qualcomm License: A High-Stakes Gamble with Uncertain Consequences

In a surprise move that has sent shockwaves through the tech industry, ARM Holdings, a leading provider of semiconductor intellectual property, has cancelled its license agreement with Qualcomm, a major customer and one of the largest chipmakers in the world. The sudden cancellation has left many wondering about the motivations behind this drastic decision, which has already had a significant impact on the global chip supply chain and Arm's relationships with its customers.

At first glance, the cancellation appears to be a classic pre-trial maneuver aimed at gaining negotiating leverage in the ongoing lawsuit between Arm and Qualcomm. However, the move has backfired, with Qualcomm's stock barely budging, while Arm's stock plummeted almost 7%. This unexpected reaction raises questions about the effectiveness of Arm's strategy and the potential consequences of this high-stakes gamble.

One of the primary concerns is the impact on the global chip supply chain. Qualcomm is one of Arm's largest customers, and canceling their license agreement could lead to a significant disruption in the market. If Qualcomm is unable to ship chips, customers such as Apple would be forced to halt production, causing widespread shortages and economiclosses.

This scenario would not only harm Qualcomm but also Arm, as the company relies heavily on its customers' success. The cancellation could also lead to a domino effect, with other chipmakers and customers struggling to find alternative suppliers, further exacerbating the disruption.

Moreover, Arm's cancellation of the license agreement may be seen as a hollow threat, as Qualcomm is unlikely to be severely impacted by the loss of this agreement. Qualcomm has a diverse portfolio of customers and a strong balance sheet, allowing it to weather any potential disruptions.

This lack of leverage may lead Qualcomm to view Arm's threat as a bluff, rather than a genuine attempt to negotiate a settlement. As a result, Qualcomm may be less inclined to compromise, leading to a prolonged and costly legal battle.

A more pressing concern is the potential for Arm to take this lawsuit to trial. If Arm is willing to risk the consequences of a cancelled license agreement, it may be seeking a legal victory that would provide a strong precedent for future business model and pricing changes. While this outcome could be beneficial for Arm, it would also come with significant risks, including the possibility of an unfavorable verdict or a lengthy and costly legal battle.

The uncertainty surrounding the lawsuit and its potential outcomes is further complicated by the fact that neither side has a clear advantage. The industry experts who have studied the case closely are still unsure about who is in the right, and the discovery process is likely to reveal more information that could shift the balance of power.

The cancellation of the license agreement also sends a concerning message to Arm's other customers. If Arm is willing to take such drastic action in a dispute with a major customer, what does this say about its commitment to its other partners? The tech industry is built on relationships and trust, and Arm's actions may erode the confidence of its customers and partners. This could lead to a loss of business and revenue, as customers seek alternative suppliers and partners.

In conclusion, Arm's cancellation of the Qualcomm license agreement is a high-stakes gamble with uncertain consequences. While the company may be seeking to gain negotiating leverage, the move has backfired, and the potential risks to the global chip supply chain and Arm's relationships with its customers are significant. As the lawsuit continues to unfold, it is essential for both parties to consider the long-term consequences of their actions and work towards a resolution that benefits aLL parties involved. The tech industry is built on collaboration and trust, and Arm's actions may have far-reaching and devastating consequences if not resolved promptly and amicably.

The article discusses the development of AI agents, which are computer programs designed to perform tasks autonomously. These agents are becoming increasingly sophisticated, allowing them to interact with humans and perform tasks that were previously the exclusive domain of humans.

Google's Project Jarvis is a specific example of an AI agent designed to automate everyday web-based tasks. Jarvis is a Chrome-based browser extension that uses AI to take screenshots, interpret information, and perform actions. Users can command Jarvis to perform a range of tasks, from booking flights to compiling data.

The article notes that Jarvis is optimized for Chrome, which means that it will only work on Chrome-based browsers. However, the potential benefits of Jarvis are significant, as it could make AI tools more accessible to a broader audience, including those without prior experience with AI development.

Anthropic's Claude LLM is another example of an AI agent designed to automate tasks. Claude is a large language model that can take limited control of a PC, allowing users to grant it access and control over various tasks. Claude's capabilities include tasks such as filling out forms, planning outings, and building websites.

The article notes that Claude is still considered "cumbersome and error-prone," but its potential to democratize AI access cannot be overstated. Claude's ability to learn and adapt to new tasks makes it a promising example of the potential of AI agents to become more useful and accessible to humans.

However, the development of AI agents like Jarvis and Claude LLM also raises significant concerns about the risks of AI-driven control. The most pressing issue is privacy, as AI agents may be able to access sensitive information and take screenshots of user activity.

The article notes that Microsoft's Recall is an example of an AI system that takes screenshots of everything being done on a PC, which raises uncomfortable questions about the boundaries of digital surveillance. This concern is mirrored in the backlash against Google's Project Jarvis, which some see as an infringement on user privacy.

Another concern is the risk of AI systems making mistakes or acting in ways that harm users. AI systems are prone to errors, which can have serious consequences, particularly in high-stakes applications like finance or healthcare.

Given the risks associated with AI-driven control, there is a growing need for regulatory frameworks to ensure accountability and protect users. This includes developing guidelines for the development and deployment of AI systems, as well as implementing safeguards to prevent errors and ensure user safety.

The development of AI agents like Jarvis and Claude LLM is also having a significant impact on corporate culture. Google's decision to drop its famous "Don't be evil" motto from its corporate code of conduct is a telling sign of the times.

As AI agents become increasingly sophisticated, the boundaries between human and machine are blurring. The question of what it means to be "evil" in the digital age is no longer a straightforward one. companies like Google and Anthropic are pushing the boundaries of what is possible, but they must also consider the implications of their actions for human society.

Ultimately, the rise of AI agents like Jarvis and Claude LLM presents a complex challenge for humanity. While the potential benefits of increased accessibility and convenience are undeniable, the risks of losing control to machines must be carefully considered.

As we navigate this uncharted territory, one thing is clear: the future of human agency is no longer a given. The consequences of our actions will be felt for generations to come, and it is up to us to ensure that the development of AI agents serves the interests of humanity as a whole.

Conservationists' newest weapon is a simple $7 Bluetooth beacon in a 3D-printed case. Thanks to the relatively uncomplicated hardware, it weighs much less than GPS trackers.

The use of tiny Bluetooth beacons in wildlife tracking is a relatively new and innovative approach that has gained significant attention in recent years. Here's a more detailed overview of the technology and its potential applications:

The Bluetooth beacons, also known as Low Energy Beacons (LEBs), are small devices that can be attached to animals or objects in the wild. They use Bluetooth low-energy technology to broadcast a unique identifier that can be detected by nearby iOS devices. When an iPhone detects the beacon, it anonymously reports its position to researchers, creating a crowdsourced network of location data.

The use of Bluetooth beacons in wildlife tracking offers several advantages over traditional GPS tracking methods. Some of the key benefits include:

Low cost: The beacons are relatively inexpensive, with a price tag of around $7 per device.

Power efficiency: The beacons require minimal power, making them suitable for deployment in remote areas where batteries may not be readily available.

Easy deployment: The devices can be easily attached to animals or objects, and they do not require any specialized equipment or expertise.

Hands-free tracking: The beacons require no hands-on tracking or recoveries, reducing the workload and costs associated with traditional tracking methods.

While the Bluetooth beacons offer several advantages, they are not without limitations. Some of the key challenges include:

Positional error: The beacons can experience high positional errors, particularly in areas with heavy traffic or signal blocking.

Deterioration in sparsely populated areas: The trackers can become less effective in areas with limited mobile device coverage, making it essential to deploy multiple beacons in these areas.

Interference: The beacons can be affected by interference from other Bluetooth devices, which can reduce their accuracy.

Researchers are exploring ways to overcome these limitations, including:

Building networks of receivers: Using Arduino, Raspberry Pi, or ESP32 boards to build networks of receivers that can improve the accuracy of the beacons in sparsely populated areas.

Increasing the number of beacons: Deploying more beacons in the wild can improve the accuracy of the trackers, as more mobile devices will report locations to the central base.

Improving signal strength: Researchers are working on improving the signal strength of the beacons, which can reduce positional errors and improve overall accuracy.

The use of Bluetooth beacons in wildlife tracking has a wide range of potential applications in various fields, including:

Conservation: The beacons can be used to track the movements of endangered species, which can help conservationists develop more effective conservation strategies.

Research: The beacons can be used to track the movements of animals in various research settings, which can help scientists understand animal behavior and ecology.

Monitoring: The beacons can be used to monitor the movements of animals in various environments, which can help researchers understand the impact of human activity on wildlife habitats.

The popularity of an Instagram video can affect its actual video quality: According to Adam Mosseri (the Meta executive who leads Instagram and Threads),

The popularity of an Instagram video can affect its actual video quality: According to Adam Mosseri (the Meta executive who leads Instagram and Threads), videos that are more popular get shown in higher quality, while less popular videos get shown in lower quality.

In a video (via The Verge), Mosseri said Instagram tries to show “the highest-quality video that we can,” but he said, “if something isn’t watched for a long time — because the vast majority of views are in the beginning — we will move to a lower quality video.”

This isn’t totally new information; Meta wrote last year about using different encoding configurations for different videos depending on their popularity. But after someone shared Mosseri’s video on Threads, many users had questions and criticisms, with one going as far to describe the company’s approach as “truly insane.”

The discussion prompted Mosseri to offer more detail. For one thing, he clarified that these decisions are happening on an “aggregate level, not an individual level,” so it’s not a situation where individual viewer engagement will affect the quality of the video that’s played for them.

“We bias to higher quality (more CPU intensive encoding and more expensive storage for bigger files) for creators who drive more views,” Mosseri added. “It’s not a binary [threshold], but rather a sliding scale.”

Forward-looking: Samsung is working to accelerate the development of a promising new memory technology called Selector-Only Memory. The latest tech combines the non-volatility of flash storage and DRAM's lightning-fast read/write speeds, making it a potential game-changer. Furthermore, manufacturers can stack the chips for higher densities.

The core concept behind SOM is using unique chalcogenide materials that perform double duty as both the memory cell and the selector device. In traditional phase-change or resistive RAM, you need a separate component, like a transistor, to act as the selector to activate each cell. Conversely, the chalcogenide material in SOM switches between conductive and resistive states to store data.

Of course, not just any chalcogenide composition will do the trick. The materials must have optimal properties for memory performance and selector functionality. To find the right candidate, Samsung used advanced computer modeling to predict the potential of various material combinations. The company estimates that over 4,000 potential chalcogenide mixtures could work for SOM. Unfortunately, sorting through all those possibilities with physical experiments would be a nightmare in terms of cost and time.

The US Copyright Office has dealt a significant blow to video game preservation efforts by denying a request for a Digital Millennium Copyright Act (DMCA) exemption

In context: Video game preservation efforts have experienced another setback in their ongoing dialogue with copyright stakeholders. As they work to preserve digital culture, preservationists must find a way to balance commercial interests with historical and scholarly needs.

People are doing that. They upload videso each day to YouTube...are posting on Facebook and X. So the idea of people stopping providing data is not on the agenda.

Of course, people are going to interact with synthetic data more. So it is going to make it even more powerful.

OpenAI's transcription tool called Whisper has come under fire for a significant flaw: its tendency to generate fabricated text, known as hallucinations.

Facepalm: It is no secret that generative AI is prone to hallucinations, but as these tools make their way into critical settings like healthcare, alarm bells are ringing. Even OpenAI warns against using its transcription tool in high-risk settings. Despite these warnings, the medical sector has moved forward with adopting Whisper-based tools.

It’s the latest AI model from OpenAI that helps you to automatically convert speech to text.

Transforming audio into text is now simpler and more accurate, thanks to OpenAI’s Whisper.

This article will guide you through using Whisper to convert spoken words into written form, providing a straightforward approach for anyone looking to leverage AI for efficient transcription.

Introduction to OpenAI Whisper

OpenAI Whisper is an AI model designed to understand and transcribe spoken language. It is an automatic speech recognition (ASR) system designed to convert spoken language into written text.

Its capabilities have opened up a wide array of use cases across various industries. Whether you’re a developer, a content creator, or just someone fascinated by AI, Whisper has something for you.

Transcription services: Whisper can transcribe audio and video content in real-time or from recordings, making it useful for generating accurate meeting notes, interviews, lectures, and any spoken content that needs to be documented in text form.

Subtitling and closed captioning: It can automatically generate subtitles and closed captions for videos, improving accessibility for the deaf and hard-of-hearing community, as well as for viewers who prefer to watch videos with text.

Language learning and translation: Whisper's ability to transcribe in multiple languages supports language learning applications, where it can help in pronunciation practice and listening comprehension. Combined with translation models, it can also facilitate real-time cross-lingual communication.

Accessibility tools: Beyond subtitling, Whisper can be integrated into assistive technologies to help individuals with speech impairments or those who rely on text-based communication. It can convert spoken commands or queries into text for further processing, enhancing the usability of devices and software for everyone.

Content searchability: By transcribing audio and video content into text, Whisper makes it possible to search through vast amounts of multimedia data. This capability is crucial for media companies, educational institutions, and legal professionals who need to find specific information efficiently.

Voice-controlled applications: Whisper can serve as the backbone for developing voice-controlled applications and devices. It enables users to interact with technology through natural speech. This includes everything from smart home devices to complex industrial machinery.

Customer support automation: In customer service, Whisper can transcribe calls in real time. It allows for immediate analysis and response from automated systems. This can improve response times, accuracy in handling queries, and overall customer satisfaction.

Podcasting and journalism: For podcasters and journalists, Whisper offers a fast way to transcribe interviews and audio content for articles, blogs, and social media posts, streamlining content creation and making it accessible to a wider audience.

OpenAI's Whisper represents a significant advancement in speech recognition technology.

With its use cases spanning across enhancing accessibility, streamlining workflows, and fostering innovative applications in technology, it's a powerful tool for building modern applications.

How to Work with Whisper

Now let’s look at a simple code example to convert an audio file into text using OpenAI’s Whisper. I would recommend using a Google Collab notebook.

This script showcases a straightforward way to use OpenAI Whisper for transcribing audio files. By running this script with Python, you’ll see the transcription of your specified audio file printed to the console.

Feel free to experiment with different audio files and explore additional options provided by the Whisper Library to customize the transcription process to your needs.

Tips for Better Transcriptions

Whisper is powerful, but there are ways to get even better results from it. Here are some tips:

Clear audio: The clearer your audio file, the better the transcription. Try to use files with minimal background noise.

Language selection: Whisper supports multiple languages. If your audio isn’t in English, make sure to specify the language for better accuracy.

Customize output: Whisper offers options to customize the output. You can ask it to include timestamps, confidence scores, and more. Explore the documentation to see what’s possible.

Advanced Features

Whisper isn’t just for simple transcriptions. It has features that cater to more advanced needs:

Real-time transcription: You can set up Whisper to transcribe the audio in real time. This is great for live events or streaming.

Multi-language support: Whisper can handle multiple languages in the same audio file. It’s perfect for multilingual meetings or interviews.

Fine-tuning: If you have specific needs, you can fine-tune Whisper’s models to suit your audio better. This requires more technical skill but can significantly improve results.

Conclusion

Working with OpenAI Whisper opens up a world of possibilities. It’s not just about transcribing audio – it’s about making information more accessible and processes more efficient.

Whether you’re transcribing interviews for a research project, making your podcast more accessible with transcripts, or exploring new ways to interact with technology, Whisper has you covered.

OpenAI's transcription tool called Whisper has come under fire for a significant flaw: its tendency to generate fabricated text, known as hallucinations. Despite the company's claims of "human level robustness and accuracy," experts interviewed by the Associated Press have identified numerous instances where Whisper invents entire sentences or adds non-existent content to transcriptions.

The issue is particularly concerning given Whisper's widespread use across various industries. The tool is employed for translating and transcribing interviews, generating text for consumer technologies, and creating video subtitles.

Perhaps most alarming is the rush by medical centers to implement Whisper-based tools for transcribing patient consultations, even though OpenAI has given explicit warnings against using the tool in "high-risk domains."

Instead, the medical sector has embraced Whisper-based tools. Nabla, a company with offices in France and the US, has developed a Whisper-based tool used by over 30,000 clinicians and 40 health systems, including the Mankato Clinic in Minnesota and Children's Hospital Los Angeles.

Introducing Whisper

We’ve trained and are open-sourcing a neural net called Whisper that approaches human level robustness and accuracy on English speech recognition.