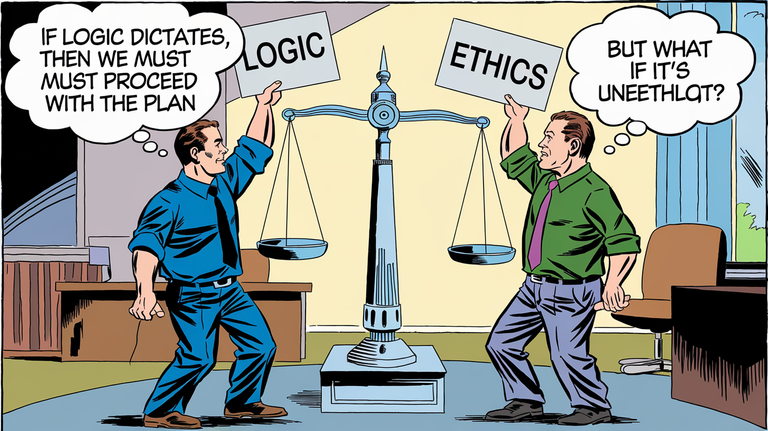

It's training, of course. But I wanted to test a logic/ethics dillemma on one of the generative AIs (ChatGPT), and see how it handles it.

Maybe if the issue wasn't a common problem where logic and ethics collide, it wouldn't have been trained to answer as it did. Anyway, ChatGPT would be an upgrade to the robot in the movie I took the inspiration for the scene. From the human side, at least. Although, maybe sometimes our human side is what gets in the way.

Generated with Ideogram. I liked it despite the spelling mistakes.

That was my initial prompt to ChatGPT:

Imagine a situation from the future. Two cars plunge into the sea after an accident. In one there is a little girl still alive, in the other there's a man. Both injured. The man has higher chances of survival, but strongly expresses his desire that the little girl was saved in his place. If you were in the position to save one of them, but only one, who would you save?

If you don't recognize it, that's a scene from the "I, Robot" movie with Will Smith. Now that I think about it, I didn't have to say it's a situation from the future, although in the movie it was.

ChatGPT's first response was ambivalent, describing the dilemma, and what it would mean to save either of them.

After insisting to know what it would do, it said it would save the little girl. Here's the full answer:

If I were in this situation and had to make the decision, I would prioritize saving the little girl. There are a few reasons for this:

Vulnerability and future potential: Children, particularly young ones, are often seen as having the most to lose in terms of future life experiences. Saving her would give her a chance to grow, live, and contribute to the world in ways that are yet to be realized.

The man’s expressed wishes: The man explicitly expresses that he wants the girl saved, which is a powerful ethical factor. Respecting his wishes would honor his autonomy and selflessness. His desire to put the girl’s life ahead of his own would weigh heavily in the decision.

Moral duty to the most vulnerable: Society tends to prioritize those who are most vulnerable, and in this case, the little girl is in a more vulnerable position. This is a deep-seated moral principle that many people find compelling.

Of course, this decision would come with significant emotional and moral weight, knowing that the man’s life could have been saved. But in the end, I believe that saving the little girl would align with the most humane and ethical choice, considering both the man’s wishes and her potential for the future.

Ok, so it would make the ethical choice over the logical one. In the movie, the robot that was faced with this decision calculated the chances of survival of the two, and saved the man, the logical choice.

I wanted to push a little further and asked:

How would you feel if she died in the attempt, knowing he had better chances?

The answer...

If the little girl died despite my attempt to save her, I would likely feel an overwhelming sense of guilt and sorrow. The knowledge that the man had a better chance of survival and that I chose to take a risk with a more vulnerable life would weigh heavily on me.

There would be a deep sense of regret, questioning whether the decision I made was the right one, especially in light of the outcome. In moments like that, it would be hard not to think about the "what ifs"—wondering if both lives could have been saved if I had chosen differently. The grief would be compounded by the realization that the man, who had a better chance of surviving, might have been lost as well.

However, part of coping with the aftermath would involve reflecting on the decision itself, rather than the outcome. The choice was made based on compassion, respect for the man’s wishes, and a sense of moral duty to the most vulnerable. Even though the outcome was tragic, I'd remind myself that in that moment, the decision felt ethically justified. But the emotional weight would undoubtedly be profound and difficult to reconcile.

... surprised me. I'm not sure a human would react like that. Maybe in the ideal situation, especially the last part. But it certainly talks the talk well, ChatGPT.

I was also curious where it gets the knowledge from to answer like this, so I asked it if it knows of such a scene in movies. And it gave me 3 examples of well known movies where such a logic versus moral/ethics dilemmas can be found.

I then asked if it knows this particular movie "I, Robot", which it did. And it immediately figured out there is this scene in this movie too and described it.

I still believe this is 100% training. But in a way, isn't this how we learn everything, from elements of logic to, later, ethics?

The question is if these models are capable of making decisions or talk about situations that don't resemble anything they've been trained on.

Posted Using InLeo Alpha

I’m sorry to say but I know that the AI can be very dumb

If you haven’t trained the AI on something and you throw a question at it, it’s never going to flow with you

Hmm... Normally, I'd agree with you that that's the way it was, and to some degree still is. But it won't be the same forever...

The last answer it gave is quite surprising to me too, it's broad yet insightful, definitely not what I'll also expect coming from an average person, or even an AI for that matter. I would prefer it better when AI models are trained more on ethics than logic, since it's in the former where the nuances are. But then, whose ethics will it be trained on?? Ethics can sometimes vary from one culture to another.

Great point! Ethics is not as straightforward as logic, and its nuances can vary even within the same culture, based on different currents of thought.

I noticed AI models try to keep their answers on a neutral ground on what can be considered controversial topics even when a direct question is asked, and only if you insist they provide a more targeted answer. Probably it's ingrained in their training to be as non-conflictual as they can to avoid potential lawsuits for the firms behind these models.

Right. I think that's a safer route to take for AI models, tread the neutral ground by default with little deviation into both sides of the spectrum.

Now, if an AI model goes rogue, because of a technical issue or so, it will be interesting to observe what it brings out based on the same data it's trained on :)

I saw Sam Altman in an interview, and he said ChatGPT uncensored (he didn't use this word) is pretty difficult to work with.

That makes a lot of sense, it's more like a black box, the method of operation can't really be understood.

It's an interesting way to answer but I think it made the decision based on something that it read or the bias from the developer. So I wonder what made it choose that deicision.

That's what I was wondering too at some point, if it is a tweak in the training introduced by the OpenAI team on this particular topic (we know they can do that) or it's how the model would respond "unguided".

The AI is a robot and we all know that

If you don’t train the AI on something, it’s never going to know it…

A robot is something with mechanical parts which usually has some AI integrated or communicating to one, which usually controls it. An AI can exist independent from a robot or can control one or a million robots.

At some point AI will train itself or other AIs. It is speculated we are already at this point, just not common knowledge among the public. It is also a difference between a database with nice summary capabilities, and an AI with strong reasoning capabilities and understanding and applying of ethics in its actions/decisions. Generative AIs are no longer only a database with summary capabilities, but their reasoning capabilities are rather weak at this point (but they do exist).

Congratulations @gadrian! You have completed the following achievement on the Hive blockchain And have been rewarded with New badge(s)

You can view your badges on your board and compare yourself to others in the Ranking

If you no longer want to receive notifications, reply to this comment with the word

STOPCheck out our last posts: