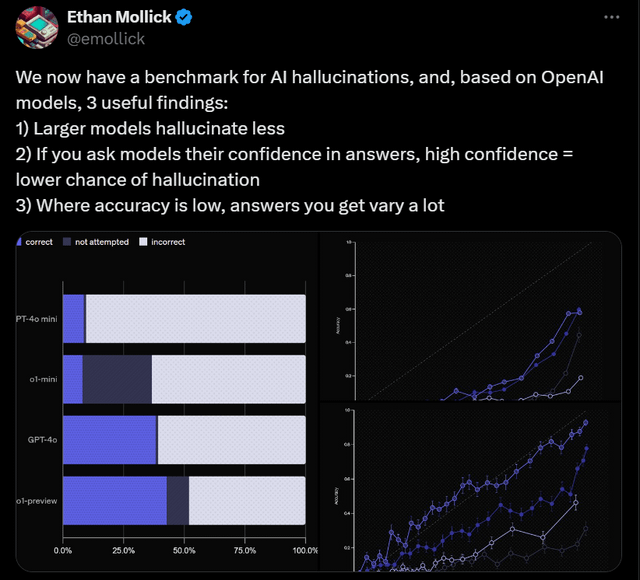

AI researchers have pinpointed key insights into hallucinations, revealing that bigger language models tend to be more reliable. Asking models about their confidence levels can actually help predict the likelihood of fabricated information, and when accuracy dips, the responses become increasingly unpredictable. #AIResearch #MachineLearning