AI

Multisensory artificial intelligence is inspired by butterflies

When flirting, passion flower butterflies, or long-winged butterflies (Heliconius), are not content with just taking a look at their potential partner - a sniff is also essential.

When flirting, passion flower butterflies, or long-winged butterflies (Heliconius), are not content with just taking a look at their potential partner - a sniff is also essential.

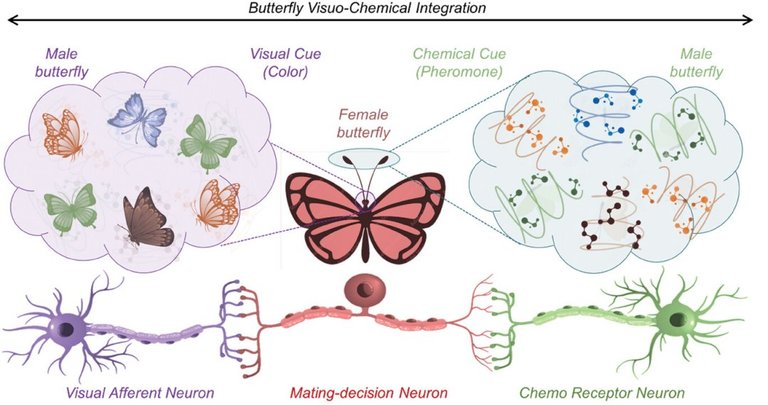

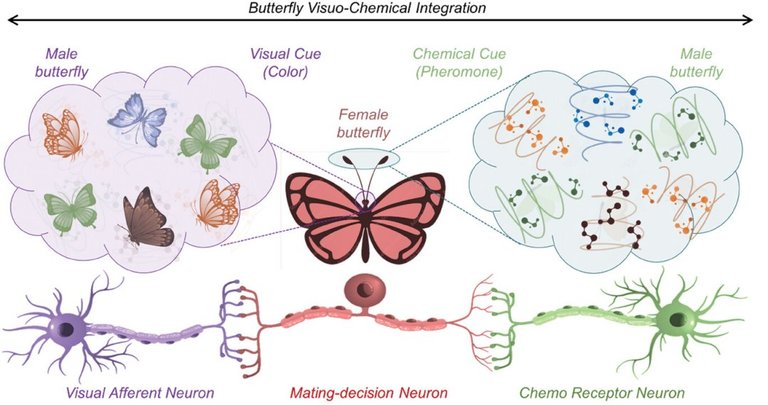

The detail is that looking and smelling are all done simultaneously. These black and orange butterflies have incredibly small brains, but they need to process both sensory inputs at the same time: The visual signal is necessary to see the suitor's wing pattern, to see if it is in fact that of a Heliconius butterfly, while the signal chemical pheromones released by the other butterfly is essential to see if it is also in the mood.

Together, this simultaneity in processing and the tiny amount of resources (few neurons) caught the attention of researchers in the field of artificial intelligence: It turns out that no AI technology today can come even close to the efficiency in simultaneously processing signals from these butterflies.

"If you think about the AI we have today, we have very good image processors based on visual processors, or excellent language processors that use audio," explained Professor Saptarshi Das of Pennsylvania State University. "But when you think about most animals and also humans, decision-making is based on more than one sense. While AI performs very well with a single sensory input, multi-sensory decision-making is not happening with current AI."

To electronically mimic the neural processing of butterflies, researchers turned to an approach that uses 2D materials that are one to a few atoms thick, in this case molybdenite, or molybdenum sulfide (MoS2), and graphene.

The graphene portion, in turn, is a chemotransistor, a component that can detect chemical molecules, which is well suited to imitating pheromone detection in butterfly brains.

The researchers tested their biomimetic device by exposing the dual sensor to different colored lights, mimicking the visual cues, and applying solutions with varying chemical compositions that resemble the pheromones released by butterflies.

The results confirmed that the sensor can efficiently integrate information from the photodetector and chemosensor.

Returning to the main objective of the research, the team demonstrated that dual sensing in a single device is more energy efficient compared to the current way AI systems work, which typically collect data from different sensor modules and then use them. are transferred to a processing module, which wastes time and consumes much more energy.

Next, the team plans to expand the integration to three senses, mimicking how a crayfish uses visual, tactile and chemical cues to sense prey and predators. The goal is to develop AI hardware capable of handling complex decision-making scenarios in variable environments.