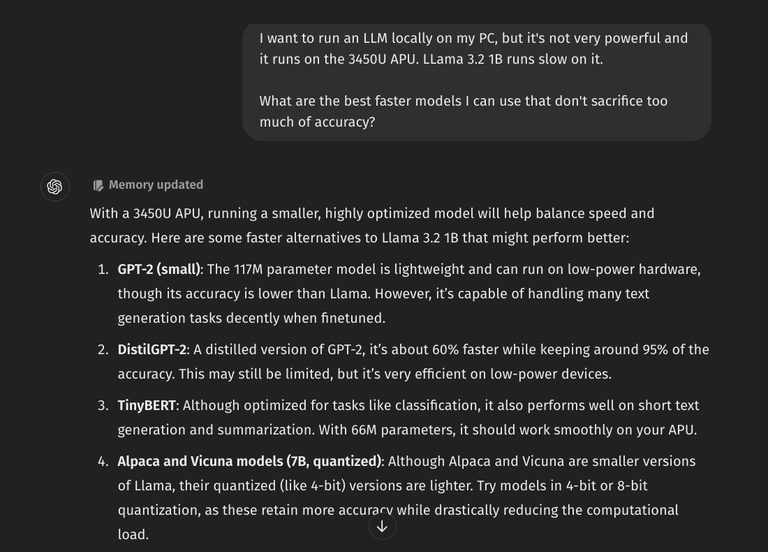

Prompt: I want to run an LLM locally on my PC, but it's not very powerful and it runs on the 3450U APU. LLama 3.2 1B runs slow on it.

What are the best faster models I can use that don't sacrifice too much of accuracy?

Summarized Response: For a PC with a 3450U APU, try faster, smaller models like GPT-2 small, DistilGPT-2, or TinyBERT, which balance efficiency and reasonable accuracy. Quantized versions of Alpaca and Vicuna (7B) models can work well, retaining good performance with low computational requirements. Also, GPT-Neo 125M offers decent speed and output.

Using quantization libraries like bitsandbytes or GGML can further improve speed and efficiency by reducing model size without major accuracy loss.

Context:

- Optimize models for low-power devices.

- Quantization boosts speed, reduces size.

- Use compact models like TinyBERT, DistilGPT-2.

- Balance accuracy, efficiency.

With a 3450U APU, running a smaller, highly optimized model will help balance speed and accuracy. Here are some faster alternatives to Llama 3.2 1B that might perform better:

GPT-2 (small): The 117M parameter model is lightweight and can run on low-power hardware, though its accuracy is lower than Llama. However, it’s capable of handling many text generation tasks decently when finetuned.

DistilGPT-2: A distilled version of GPT-2, it’s about 60% faster while keeping around 95% of the accuracy. This may still be limited, but it’s very efficient on low-power devices.

TinyBERT: Although optimized for tasks like classification, it also performs well on short text generation and summarization. With 66M parameters, it should work smoothly on your APU.

Alpaca and Vicuna models (7B, quantized): Although Alpaca and Vicuna are smaller versions of Llama, their quantized (like 4-bit) versions are lighter. Try models in 4-bit or 8-bit quantization, as these retain more accuracy while drastically reducing the computational load.

GPT-Neo 125M: This model is smaller and relatively fast, especially if you quantize it. It has decent performance on shorter prompts.

To run these models faster, you could consider using quantization libraries like

bitsandbytesorGGMLfor LLaMA-based models, which reduce the model size and make them feasible on lower-end hardware without sacrificing too much accuracy.Prompt: What's better GPT-NEO or GPT-2?

Response: - GPT-Neo: Based on GPT-3’s architecture, available from 125M to 2.7B parameters. Neo models capture complex sentence structures better, offering nuanced text generation. However, they are more resource-intensive. The 125M version, especially with quantization, may work acceptably on low-end systems.

Recommendation: For faster performance, go with GPT-2 (117M or 345M). For better quality in nuanced tasks, try GPT-Neo 125M (quantized), though it may need more processing power.

Between GPT-Neo and GPT-2, here are some factors to consider to help decide which is better for your use case:

1. Architecture and Size

2. Performance and Quality

3. Resource Efficiency

4. Fine-Tuning and Customization

Overall Recommendation

If speed and efficiency are your top priorities, GPT-2 (117M or 345M) is likely better suited. However, if you prioritize nuanced text generation and can manage some performance overhead, GPT-Neo 125M (quantized) might give you better quality without straining your APU too much.