If you've been seeing my posts over the last week or so, you'll have seen some of my test outputs for my first-ever publicly-released AI model: Pixel Art Diffusion (V1.0). I'm thrilled to announce that it is now live! It runs in a modified Disco Diffusion Colab notebook and can be found on Github EDIT: For the moment, grab the notebook from here while I resolve an error with the main Github branch :).

About Pixel Art Diffusion (from the notebook)

Pixel Art Diffusion is a custom-trained unconditional diffusion model trained on an original (small) dataset of ~1500 256x256 pixel art landscapes using the fine-tuning openai diffusion model notebook by Alex Spirin. I will expand this dataset over time.

Pixel Art Diffusion runs within a fork of Disco Diffusion 5.2 Warp notebook by Alex Spirin.

About Pixel Art Diffusion's Native Models

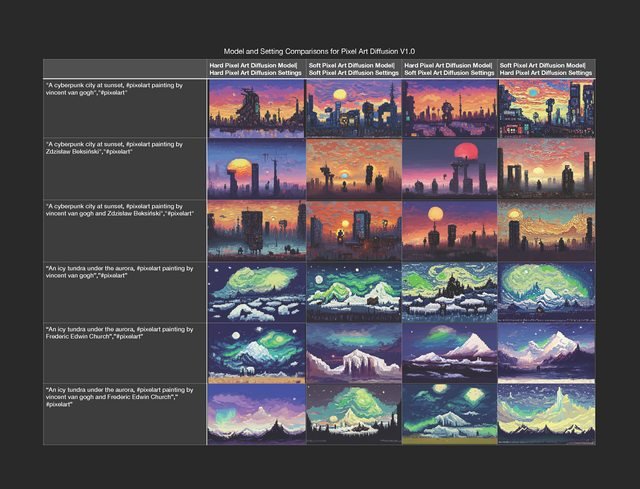

There are actually two separate models within Pixel Art Diffusion--"Hard" and "Soft"-- which you can select in the drop-down diffusion_model menu. The underlying datasets are identical, but the training steps and original checkpoints I trained each from are different, and in my testing, I’ve found that they’re good at different things.

Soft is probably my favorite of the two, if I had to pick. I think it’s more diverse and successful in its outputs. It’s better at “soft” pixel art vibes and colors, although it can be made crunchier by tweaking settings some. Hard is crunchier by default and also more saturated.

By default, Pixel Art Diffusion’s settings are tuned for the "Soft" Pixel Art Diffusion model. The optimal settings (at least, that I’ve found) for the "Hard" P.A.D model are similar, but not quite the same. They are as follows:

CLIP settings: Switch out RN50 for RN101.

steps: 75

skip_steps: 7

eta: .75

The notebook's default settings are just loose guidelines that I’ve found work well; they are NOT hard and fast rules. Based on your prompts, prompt structure, and preferences, the settings that work best for you may be quite different. Experiment, don’t get discouraged and, above all, HAVE FUN. If you want to look at more in-depth information regarding any setting or a number of setting combinations, have a look at the EZ Charts Wiki.

Model Output Examples

As you can see below, the "best" model and setting for one prompt is by no means always the best for another prompt (higher quality image here).

Additional Image Processing

At this time, Pixel Art Diffusion does a pretty good job of capturing the pixel art aesthetic, but it is not pixel-perfect. If you want to create an image that is pixel-perfect, you can always give your PAD outputs a pass through pixellation tools such as Pixelator or Pixlr’s “pixellate” filter tool.

Once the image is pixel-perfect, you can upscale it to your desired size using Lospec’s Pixel Art Scaler.

Experimental

Try passing your outputs back through PAD an an init image (for best results, you should probably use the same seed you used for the init). This might be a disaster, but it might also improve overall pixellated/aesthetic effect and details.

Below are a few re-renderings of old favorite prompts of mine in quasi-pixelated glory:

For a list of Google Colab notebooks that you can use to make your own AI art, see KaliYuga's Library of AI Google Colab Notebooks.

Very cool, thank you for releasing this open source, I'll play with this tonight :)

Woah congrats this is really cool, can't wait to try it!

Let me know how it goes :D

what's your opinion on imagen by google?

I honestly haven't played with it yet, as I've been deving Pixel Art Diffusion the whole time it's been out, and that's taken all my energy. What do you think of it?