My Python Tools: #1 Langdetect

By Enio...

I am pleased to introduce you to my My Python Tools series, focused on addressing different programming tools related to the Python programming language. These will consist of snippets, scripts, libraries, frameworks, application programs, among others, and in which we address content in a manner accessible to both specialist and non-specialist audiences. Without further ado, let's review this edition's toolkit.

Resource name

Langdetect

Understanding a few concepts

Content served through web documents often incorporates metadata that allows applications to recognize the dominant natural language present in it, and the same is true for many REST APIs, which specify the language as context information in the data served. Whether we are on the backend or fronend, this will not always be the case and, depending on our functionality, we will need to determine at runtime the language(s) present in a string depending solely on that string.

There are a few approaches to recognize languages that can be easily implementable, but when it comes to fast implementation, it is often not a universal solution but rather limited. For example, I have been able to implement the approach based on the recognition of common words or tokens.

These are usually made up almost entirely of prepositions, articles, pronouns, etc. Thus, to recognize whether a text is written in English, it may be sufficient to look for the words the, and, of, to, a, in, it, you, he, for, among others. The key would then be to measure and compare the frequency with which these words appear.

If we want a more robust and more generalizable solution, then we will have to apply an approach based on n-grams. An n-gram is a substring of characters extracted from a collection of words. For example, Wes and Str are n-grams of 3 characters in length. They are present in both English and Spanish, but their frequency in English words far exceeds their frequency in Spanish words. For that reason, those N-grams receive a prior probability or weight in each language.

In the end, the languages present will be detected through a probabilistic approach by the presence and absence of these N-grams in a given text. These solutions are also considered a classification problem within natural language processing.

Of course, this is not something that is implemented in a 10-line script. Just having the weights of the n-grams per language alone would certainly take up several files, not to mention that the weights must be realistic. You have to use libraries that have already done this work. That is the case of Python langdetect.

Description

The Python langdetect library allows to recognize the language or languages present in a given text. Currently it can recognize among 55 languages, these are: Afrikaans, Albanian, Arabic, Bengali, Bulgarian, Catalan, Chinese, Croatian, Czech, Danish, Dutch, English, Estonian, Finnish, French, German, Greek, Gujarati, Hebrew, Hindi, Hungarian, Indonesian, Italian, Japanese, Kannada, Korean, Latvian, Lithuanian, Macedonian, Malayalam, Marathi, Nepali, Norwegian, Persian, Polish, Portuguese, Punjabi, Romanian, Russian, Slovak, Slovenian, Somali, Spanish, Swahili, Swedish, Tagalog, Taiwanese-Mandarin, Tamil, Telugu, Thai, Turkish, Ukrainian, Urdu, Vietnamese and Welsh.

The algorithm for this library is originally from Nakatani Shuyo and Google, is open source and available on GitHub. An extensive presentation of how the algorithm works is available in slideshares. The version presented here (langdetect) is a Python port of the same algorithm.

Some features

- Supports up to 55 languages

- Detect language using naive Bayesian filter

- Its developers claim that it can achieve 99% detection accuracy.

- It is not deterministic, i.e., given the same input, the accuracy of the results may change with each run.

Website or repository

https://pypi.org/project/langdetect/<Example

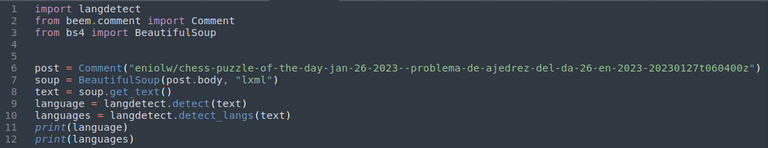

The following script demonstrates the library by detecting the languages contained in a Hive post of mine. First the post is downloaded using beem. Then you clean up the input data a bit by removing the HTML code using BeautifulSoup, although this step may be unnecessary. Finally we run the functions detect which returns the most likely language code and detect_langs which returns the most likely languages present.

import langdetect

from beem.comment import Comment

from bs4 import BeautifulSoup

post = Comment("eniolw/chess-puzzle-of-the-day-jan-26-2023--problema-de-ajedrez-del-da-26-en-2023-20230127t060400z")

soup = BeautifulSoup(post.body, "lxml")

text = soup.get_text()

language = langdetect.detect(text)

languages = langdetect.detect_langs(text)

print(language)

print(languages)

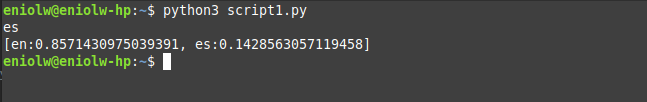

In the output it can be seen that the library estimates that English is the most likely language and that the post does indeed have texts in English and Spanish. It indicates the language following the ISO 639-1 codes, so you may have to familiarize yourself with them. You can see how it includes the calculated probabilities. If you run the script again you can see that the results will vary a little.

In summary

The next time you use Google Translator with a text whose language you don't know so you ask it to detect the language automatically, remember that behind the scenes that functionality is most likely the same as the one shown here, with a different implementation of course. The langdetect library is quite minimalistic, but effective, and will allow you to solve more than one problem.

Wow such a cool library!! I will test it for sure

!1UP

Great, It can be useful for sure!

Great job Your tool is fantastic. It wil be more helpful for us if I used properly thank you

interesting. wonder if it will work with mulit-language post.

I tried with a three-language post and it could detect the three of them, although sometimes it only detected two. That's because it isn't deterministic.

That isngood news. Thanks for the information

You have received a 1UP from @gwajnberg!

@leo-curator, @stem-curator, @vyb-curator, @pob-curator, @neoxag-curator

And they will bring !PIZZA 🍕.

Learn more about our delegation service to earn daily rewards. Join the Cartel on Discord.

I gifted $PIZZA slices here:

@curation-cartel(2/20) tipped @eniolw (x1)

Send $PIZZA tips in Discord via tip.cc!

Thanks for your contribution to the STEMsocial community. Feel free to join us on discord to get to know the rest of us!

Please consider delegating to the @stemsocial account (85% of the curation rewards are returned).

You may also include @stemsocial as a beneficiary of the rewards of this post to get a stronger support.

Wow!! this is beautiful. I just enrolled in a programming course because it is always fascinating how programmers write languages that birth beautiful outward results, I am only trying to find time within my current job as a health worker and learning codes. I do not know if I can become a professional coder in the future but currently, I am doing it because I am inquisitive, and want to learn something new for fun.

Thank you for sharing your knowledge. Interesting the use we can give to the Langdetect library. I have already been practicing with it, thanks to your publication. Greetings and looking forward to new publications of this type.

I'm glad you got to test it. Thanks for stopping by.

The rewards earned on this comment will go directly to the people( @beckyonweb ) sharing the post on Twitter as long as they are registered with @poshtoken. Sign up at https://hiveposh.com.