Data tokenization is a process of replacing sensitive or confidential data with unique tokens or placeholders. This is done to enhance data security and privacy. Instead of storing or transmitting the actual sensitive information, such as credit card numbers or personal identification numbers (PINs), tokenization substitutes these data points with tokens. These tokens are typically generated using an algorithm and have no inherent meaning or value, making them useless to anyone who might gain unauthorized access to them.

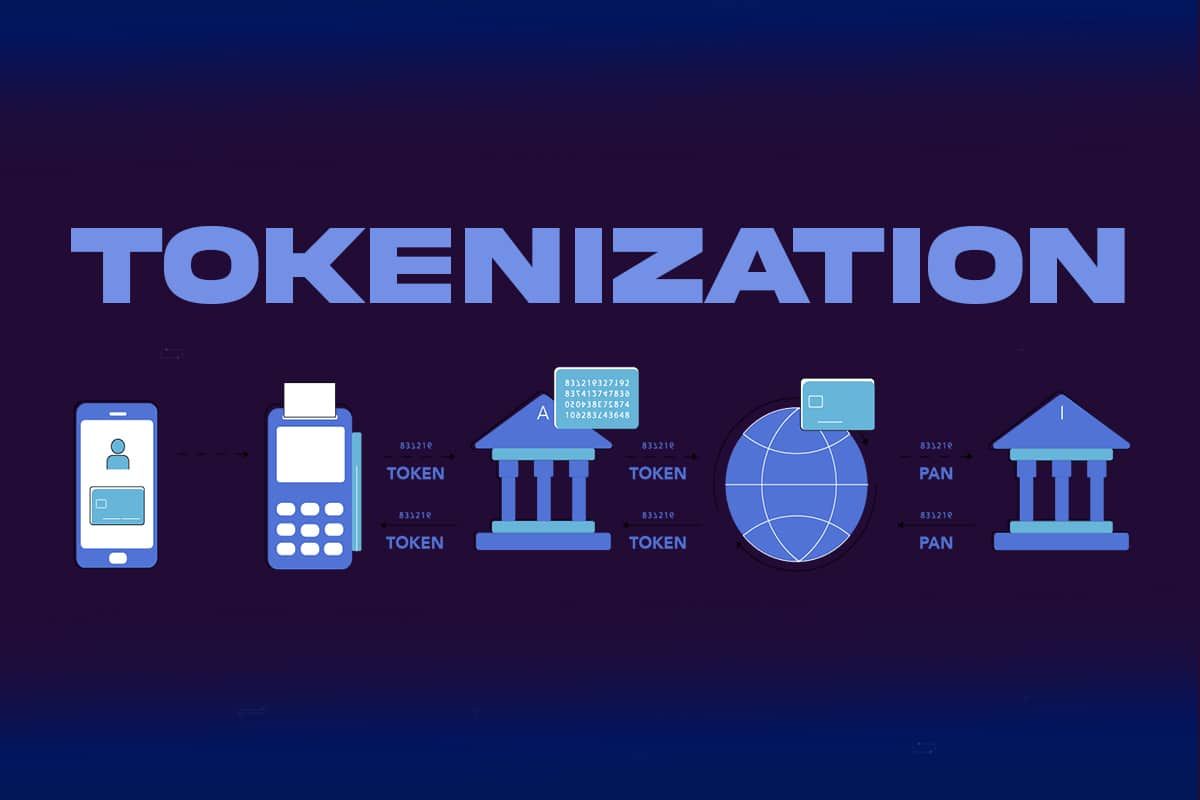

Here's how data tokenization works:

Data Identification: First, you identify the sensitive data that needs protection, such as credit card numbers, social security numbers, or personal names.

Tokenization Process: When a piece of sensitive data is collected, it is replaced with a randomly generated token. This token is typically meaningless on its own and does not contain any sensitive information. The mapping between the original data and the token is securely stored in a database or token vault.

Storage and Handling: The tokens, which are not sensitive, can be stored and processed without the same level of security precautions as the original data. This reduces the risk associated with handling sensitive information. Even if an attacker gains access to the tokenized data, they won't be able to reverse-engineer it to obtain the original sensitive data without access to the tokenization system.

Token Mapping: To use the tokenized data, you need to maintain a mapping between tokens and their corresponding original data. This mapping is stored securely in a separate system and is only accessible by authorized personnel or systems.

Referential Integrity: Tokenization preserves referential integrity, meaning that when you replace sensitive data with tokens, you can still use those tokens in place of the original data for various purposes like processing payments or conducting database queries. The system can seamlessly map tokens back to the actual data when needed.

Security Measures: The security of the tokenization process is crucial. Access to the token vault or mapping database must be tightly controlled, and encryption and access controls should be used to protect both the tokens and the mapping.

Tokenization Use Cases: Tokenization is commonly used in industries like finance for securing payment card data, in healthcare to protect patient information, and in various other sectors where data privacy is paramount.

How important is data tokenization?

Data tokenization is highly important, particularly in industries and contexts where sensitive information needs to be safeguarded. Its importance can be understood from several perspectives:

Data Protection: Data breaches can be costly and damaging to an organization's reputation. Tokenization significantly reduces the risk of data exposure since even if an attacker gains access to tokenized data, they won't have access to the actual sensitive information without the tokenization system.

Regulatory Compliance: Many industries are subject to strict data protection regulations (e.g., GDPR, HIPAA, PCI DSS). Tokenization helps organizations comply with these regulations by minimizing the amount of sensitive data that must be stored and protected.

Privacy Enhancement: Tokenization enhances individual privacy by ensuring that sensitive personal information, such as social security numbers or medical records, is not stored or transmitted in its raw form. This is especially important in healthcare, finance, and other sectors handling personal data.

Referential Integrity: Tokenization systems maintain the link between tokens and their corresponding original data, allowing authorized users to perform necessary operations (e.g., processing payments) without exposing sensitive information.

Risk Reduction: By tokenizing data, organizations reduce the risk associated with storing and handling sensitive information. This can lead to cost savings in terms of security measures and potential liability.

Simplified Security: Tokenization can simplify security practices since the focus shifts from securing sensitive data at multiple touch-points to securing the tokenization system itself.

Ease of Use: Tokenization can make data handling more efficient for legitimate users. They can work with tokens without needing to be concerned about the intricacies of securing sensitive data.

Scalability: Tokenization can scale with an organization's needs. Whether dealing with a small amount of sensitive data or vast datasets, tokenization remains an effective method of protection.

While data tokenization is highly valuable, it's important to note that it is not a one-size-fits-all solution. Its implementation should be carefully planned, and organizations must ensure that the tokenization system itself is adequately protected, as compromising the tokenization infrastructure could lead to data exposure.

Benefits And Limitations Of Tokenization

Data tokenization offers several benefits but also has some limitations. Here's a breakdown of both:

Benefits of Data Tokenization:

Enhanced Security: Tokenization significantly improves data security by replacing sensitive information with meaningless tokens. This minimizes the risk of data breaches and unauthorized access to sensitive data.

Data Privacy: Tokenization helps organizations comply with data protection regulations (e.g., GDPR, HIPAA) by ensuring that sensitive data is not stored or transmitted in its raw form, enhancing individual privacy.

Referential Integrity: Tokenization systems maintain the link between tokens and original data, allowing authorized users to perform necessary operations while still protecting sensitive information.

Simplified Compliance: Compliance with industry-specific security standards and regulations becomes more manageable because tokenization reduces the scope of sensitive data that must be protected.

Reduced Liability: Tokenization can reduce the financial and legal liability associated with data breaches, potentially saving organizations significant costs.

Efficient Data Handling: Authorized users can work with tokens without needing to handle sensitive data directly, streamlining data processing workflows.

Scalability: Tokenization can scale to handle small to large datasets and various types of sensitive information.

Limitations of Data Tokenization:

Complex Implementation: Implementing a tokenization system can be complex and may require changes to existing data management processes and infrastructure.

Initial Setup Costs: There are upfront costs associated with implementing tokenization, including system development and integration, which may deter some organizations.

Token Storage: The secure storage of the token-to-data mapping is critical. If this mapping is compromised, the protection provided by tokenization can be undermined.

Performance Impact: Tokenization can introduce a slight performance overhead, especially when many tokens need to be generated and managed. However, modern computing systems have minimized this impact.

System Dependency: Organizations become dependent on the tokenization system. If this system fails or experiences issues, it could disrupt operations.

Data Recovery Complexity: Retrieving original data from tokens may be complex in some cases, especially if the tokenization system experiences issues or is unavailable.

Cost Considerations: While tokenization can save costs in the long run, there are initial investment costs. Organizations should weigh these against potential security benefits.

Are Tokenization and encryption the same thing?

Tokenization and encryption are related but distinct data security techniques, each with its own purpose and characteristics.

Tokenization involves replacing sensitive data with a unique identifier or "token." These tokens are typically random, meaningless values that have no inherent value or connection to the original data. The mapping between the original data and its corresponding token is securely stored in a separate database or token vault. Tokenization is often used in scenarios where you need to protect sensitive data while still maintaining referential integrity. It is commonly used in payment processing systems, healthcare, and other industries to secure personal and financial information.

Encryption, on the other hand, is the process of transforming data into an unreadable format using cryptographic algorithms. The original data is transformed into ciphertext, which can only be decrypted back into its original form by someone with the appropriate decryption key. Encryption is used to ensure data confidentiality, especially when data is in transit (e.g., during data transmission over the internet) or when data is stored on devices or servers.

Here are some key differences between tokenization and encryption:

Purpose:

Tokenization primarily focuses on protecting sensitive data by replacing it with tokens while preserving the ability to perform certain operations with the data.

Encryption primarily focuses on data confidentiality by rendering the data unreadable and requiring a decryption key to make it readable again.Data Recovery:

Tokenization allows for the retrieval of original data from tokens as needed because the mapping is maintained.

Encryption requires a decryption key to recover the original data, and without the key, the data remains unreadable.Data Relationship:

Tokenization maintains a clear relationship between tokens and original data, which is crucial for some business operations.

Encryption does not preserve any relationship between the encrypted data and the original data; it is designed to be irreversible without the decryption key.Processing Overhead:

Tokenization typically involves less processing overhead compared to encryption, making it suitable for scenarios where quick access to the original data is required.

Organizations often use a combination of both tokenization and encryption to achieve comprehensive data security. For example, sensitive data might be tokenized for ease of processing, and then the tokens might be encrypted for an additional layer of protection, especially when data is transmitted over networks or stored on devices.