Hate speech policies on social media sites pose a problem to freedom of expression online. While generally well-intentioned in theory, the vague and subjective nature of these policies sweeps up more than just hateful content. The current trend is to broaden these policies even further, putting older content that was previously fine in the crosshairs. Social media sites are private entities not subject to First Amendment protections, but they often express commitments to the principle of free speech. Broad terms of service lead to content restrictions that break that commitment.

What constitutes hateful content is subjective because it is up to the person reviewing the content to determine the uploader's intent. To placate the outrage mobs, sites err on the side of removing borderline or satirical content. Twitter notably suspended users for satirical posts that replaced 'white people' with minority groups, but left up the anti-white posts that the satire was based on. When you can't even use humor to make fun of hate, that's a problem.

As an example, here is the hate speech policy for YouTube:

We encourage free speech and try to defend your right to express unpopular points of view, but we don't permit hate speech.

Hate speech refers to content that promotes violence against or has the primary purpose of inciting hatred against individuals or groups based on certain attributes, such as:

race or ethnic origin

religion

disability

gender

age

veteran status

sexual orientation/gender identity

There is a fine line between what is and what is not considered to be hate speech. For instance, it is generally okay to criticize a nation-state, but if the primary purpose of the content is to incite hatred against a group of people solely based on their ethnicity, or if the content promotes violence based on any of these core attributes, like religion, it violates our policy.

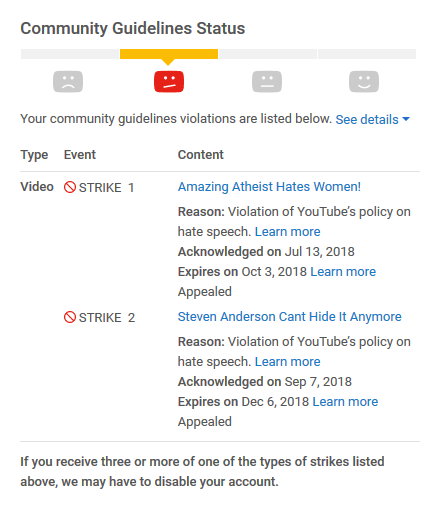

This policy makes it completely up to personal discretion to determine the intent of flagged content. I received strikes on YouTube for 2-3 year old parody/satire videos composed of out of context clips edited for comedic effect. The videos were not hateful. They did not promote violence or incite hatred. If anything, they were making fun of being hateful.

The strikes were appealed and the appeals were rejected. YouTube apparently doesn't care about context so I was forced to remove the entire playlist of out of context videos. I plan to reupload the videos to DTube.

First reuploaded video:

Free Speech Resources

Other Free Speech Posts:

Count Dankula Sentenced

UK Speech Police Offended Again

Lèse-majesté: Archaic Anti-Speech Law

California Bill Threatens Online Press and Speech

UK Parliament Report on Campus Free Speech

Thoughtcrime in the UK?

New Study Shows College Students Conflicted on Free Speech

Who is most supportive of free speech?

Campus Free Speech Zones

This post has received a 3.13 % upvote from @drotto thanks to: @snaves.