I hope I have given a good foundation to the importance of freedom of speech and the dangers of censorship, as well as a brief introduction to the evolution of the internet in these realms. In summary, social media is showing a worrisome trend toward censorship that appears to be pervasive and ideological in nature. The why of this is not clear to me -- there is no financial incentive to do this, and in fact, there appears to be great financial cost to be policing thought and speech on a platform as large as YouTube, Facebook, or Twitter. Computer algorithms have been written, but these are only as neutral as those who write them, and tens of thousands of moderators have been hired, and yet the same "hate speech" appears to be policed differently based upon the author and/or recipient. Perhaps there is an attempt at political control or even social engineering which outweigh the tremendous financial costs. I've heard rumors that other less liberal countries have pressured the tech companies for this censorship, but I have no proof of that. Regardless, the systematic elimination of entire swaths of social, cultural, or political thought (and the associated documentation of these on the internet) is extremely concerning. The modern day equivalent of "book burning" as well as mob shaming, deplatforming, or even doxxing continues to narrow the range of acceptable discourse right down to the level of words. So what can be done about this?

I see a number of options, although most of them are not ideal. I will start with the most promising, which is legislative action. Other options include lawsuits, addressing the 230 status of these companies, trust busting, and getting these platforms re-characterized as public utilities.

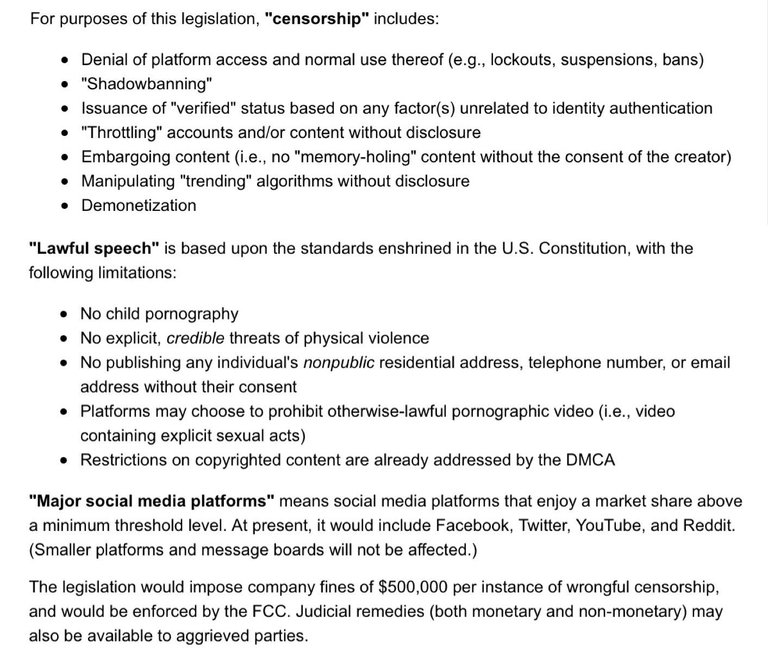

The most efficient tactic to combat censorship is legislative. The most sensible I have seen is the Social Media Anticensorship Act (#SMACA) proposed by Attorney Josh Smith. I will append an outline of the proposal to this post, but in brief it does the following: 1) identifies social media with a significant impact on public discourse (this can be done by number of users or market share, for example), and 2) makes it illegal for these very large companies to censor any speech which is otherwise legal in the U.S, subject to a large per occurrence fine. The advantages to this are that it is simple and could be passed quickly and with a simple majority. Also, the social media companies may actually welcome not having the responsibility (or social pressures by a loud minority of activists or advertisers) of policing its posts other than for outright illegality. This would bring up issues internationally (different laws in different countries), and could even prompt the exodus of the company to another country to keep user bases there and not be subject to fines. But I would see this type of basic legislation as a central part of an Internet Bill of Rights.

Lawsuits are being pursued by some. This is extremely expensive, and these tech companies have very deep pockets. Lawsuits are also quite time consuming (potentially on the order of many years), time enough to allow for significant damage in the interim. But there are a few ways to address this problem from a legal recourse perspective. The most common sense claim would be discrimination, but proving this would be difficult, even if one cold prove that the discrimination were illegal (most discrimination is not). It is not illegal to discriminate on political beliefs, although there could be some claims based on currently protected classes. However, the nature of the firms being private, and the Terms of Service being intentionally vague would both create significant obstacles. I am no lawyer, so I cannot speak to the details here. But this seems at best a tactic to pursue in tandem with another one, and some people and organizations have already expressed their intention to file suits or are in the midst of one.

Other options to address social media company abuses would be to address the Section 230 status of these companies (and define platform versus publisher). Again, I do not know enough about this to have an informed opinion, but it does appear to be a path to pursue. The sway that these companies have with our government would make this difficult, but citizens of various ideological backgrounds could have a meeting of the minds on the potential cronyism at play here, so perhaps this could gain traction.

Another tactic would be an anti-trust one. There is no doubt in my mind that these platforms have been "speaking" with one voice, as the recent deplatforming of Alex Jones by all of them at the same time, but for different (or no purported) reasons would seem to indicate. But having these social media companies broken up into perhaps 5 or 6 different companies currently has not addressed the problem. It is not clear that breaking them up further would have the intended effect. This also would potentially take many years to achieve, and thus may not be a practical solution. It would seem that having a slightly more fractured Big Tech would not stop it from working in conjunction to achieve the same results that we have now.

A final tactic would be to get these corporations categorized as public utilities and then regulated by government. Although I have not explored this particular option in depth, it has some concerning characteristics on the face of it. The unofficial cooperation between Big Tech and government already has major privacy concerns. Unfettered access of the government to every aspect of our lives not only eliminates any last semblance of privacy we may have, but it also invites them in to monitor and regulate our speech. I am envisioning another large bureaucracy (Department of Social Media?) with its thousands of pages of regulations. What could go wrong with that? Part of the reason that we need free speech is to keep our government non-tyrannical. With the addition of laws to the books in other western countries, like Canada and Europe, this could be opening the door for us also to an even worse control. Also, what happens when these platforms naturally die and new ones take their place? At what point does government take over a specific company? Again, I would love to hear the arguments for this as I can only envision downside here. I would like to further explore what this would practically involve.

Those are the ideas I have heard as well as my initial thoughts in regards to the particular problem of social media censorship, which appears to be somewhat dystopian already. There would be other major aspects of an Internet Bill of Rights (limits on what domain registrars or payment processors can do, for example).

So what do you think? Would any of these be practical or effective? Do you have a favorite tactic? Other ideas? Comment and/or remind and let me know what you think.

#InternetBillofRights #censorship #socialmedia

Addendum: #SMACA proposed

text taken from Twitter @HalenIndus

I have to say that I disagree with the SMACA simply because it seems to only go after specific parties. Making a specific law that only applies to a select few, with the intent to force them to change their business is pretty heavy handed. Especially if a smaller competitor can have the same practices that are being banned for the bigger platforms. You wouldn't allow a small pharmaceutical company dump poison into the drinking water just because they don't have a certain percentage of the market while banning their larger competitors from doing the same. It's also unreasonable to give that much power to the FCC. They don't have the resources to manage this kind of regulation, and the enforcement would better fall under the FTC, which already has the resources. If you're interested, I have written an internet bill of rights, which is on my profile.