Introduction

When Bitcoin was first released to the public all the way back in 2009, it was launched on the premise of becoming a “peer to peer electronic cash system”. At the time of its release and for many years afterward, Bitcoin enabled relatively fast and very cheap transactions.

Beginning in late 2016, however, this latter aspect began to change. Fees began to climb from a low of six cents to twenty-five cents in November to as high as $55 for some transactions a year later in December 2017. The rise in Bitcoin’s transactions fees were caused by explosive use of the digital asset, and demand for transactions far exceeded what could fit into Bitcoin’s 1MB blocks (or the maximum transaction weight blocks). Despite optimizations in Schnorr signatures and Segwit activation, these changes do not sufficiently scale Bitcoin transaction processing ability to fully meet demand without fees of at least a few dollars (for relatively fast transactions).

Ethereum also has had similar issues in scaling as it grows in popularity much more quickly than the developers initially anticipated. Though Ethereum can handle about approximately one million transactions per day with low fees, a burst of high profile activities, such as games, ICOs, or other uses can cause fees to rise very quickly, as demand exceeds capacity of the network. Although unlike Bitcoin, Ethereum has an adjustable gas limit for each block, the network is currently at the maximum gas limit without producing an undesired number of “uncle blocks”, or blocks that don’t get included in the main chain, by miners.

The "Shares" Model

EOS promisies no fees and high transaction throughput

Many projects, such as BitShares, NEO, Steem, NEM, EOS, and Tezos, have adopted to use what is often described as “Delegated Proof of Stake” (DPoS) as an alternative to more traditional Proof of Work (PoW) consensus mechanisms.

The delegate part of the name comes from the fact that there are a small number of special nodes called “witnesses” or “block producers”, who vote to include actions into each block. Users can then “delegate” their influence (usually in the form of a token or a derivative from their token) to select who can be a witness.

With a small number of nodes with high performance requirements, this system can scale to near levels of centralized servers.

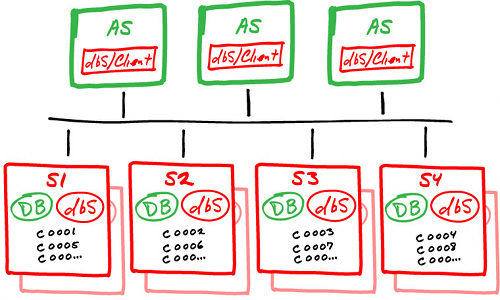

Transactions in the DPoS model are typically rate limited by the amount of native currency held by each user. For instance, a user with 10 BitShares will be able to make 10 times as many transactions with only 1 BitShare in the same span of time.

To further illustrate this concept, consider a time sharing arrangement between multiple people. In a timeshare, the person with more shares of the property is able to use the property for more days of the year than someone with less shares.

This model completely eliminates the transaction fees from the equation.

The Elephant in the Room

So with no fees, high performance, and decentralization, why haven’t all blockchains moved to this model?

As it turns out, a DPoS model is just not that different from today’s centralized servers, doesn’t necessarily eliminate fees entirely as they might claim, and is actually less efficient than a fee based transaction structure.

"Delegated"

The first issue with DPoS is that the number of important nodes is small. Usually this is under 25 nodes (with few exceptions) for a few reasons. First is that network latency can increase as the number of nodes you add increases, thus lengthening blocktime. In order for DPoS systems to achieve low latency, they necessarily must keep the number of nodes.

This introduces a denial-of-service (DoS) attack vector on the network as if more than 33% of the nodes go down the network is unable to continue producing blocks. It also means that if these witness nodes are located in a just a few countries, such as China and Russia, then the liveliness of the network will depend heavily on the actions of those two governments. In short, it is much less expensive to attack a “network” of only two dozen witness nodes than something on the order of Ethereum or Bitcoin, which have over 30,000 and 5,000 reporting nodes respectively.

The second issue with DPoS with respect to decentralization is that it can be very difficult for a new witness node to join the set of block producers. In DPoS, witnesses are typically voted in. The problem is that if a supermajority of nodes decide to censor transactions, they can simply censor enough transactions that represent votes against them to always stay in power. While transaction censorship is still somewhat of a problem in Proof of Work, the sheer number of parties in Proof of Work makes this much more difficult to achieve than in DPoS.

Witnesses in DPoS can also advertise prioritising their voters transactions over their opponents transactions, since there is no strict in protocol way of choosing which transactions to include (other than the introduced rate limit which prevents too many from one person). This kind of bribery can also be detrimental to the network since it can cross competing incentives for voters (short transaction times or more fair witnesses?). Combined with the interests of national governments, the ability for free activities on the blockchain may be restricted based on the geographical distribution of the witness nodes.

Breaking Down Transaction Rate-limits

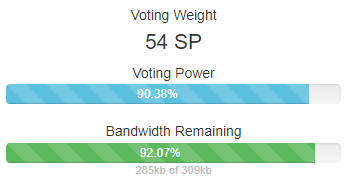

As these approach zero, my ability to do meaningful things on Steem diminishes.

At first it sounds great to not have to pay a transaction fee every time a transaction is made, and honestly it is true that it could involve less mental calculation for certain types of users (namely the infrequent ones).

But removing a per-transaction fee also introduces a new constraint, a limit on the number of transactions over a time period. For certain actions, such as browsing, posting, and voting on content on Steem, this makes each action have a cost. Do you post that comment or not, since it may prevent you from commenting on something else you may have more interest in?

In other contexts, this can become even more detrimental. Imagine a business that wishes to use a smart contract on a DPoS system very frequently. If they keep a modest amount of asset, then they can only perform a certain number of actions before being forced at the protocol level to wait. What if there is an emergency and the rate limit has been hit?

To get around this the business would need to hold more of the asset. Suddenly buying the asset becomes a calculation of how many transactions over a certain time period you might need to make. This is much more difficult since you must essentially pre-pay the transaction fee before you know how much you are actually going to use it. It doesn’t actually end up being cheaper than per-transaction fees unless you can perfectly predict necessary usage at the time of purchase.

(In)efficient Protocols

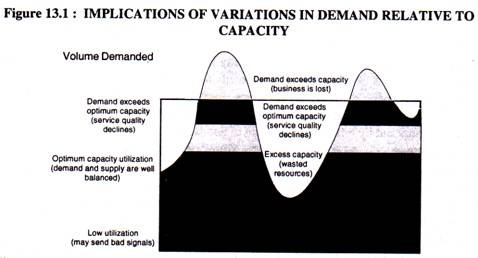

So far we’ve only discussed the problems in DPoS that arise when the network is able to meet demand. But what happens when the network encounters a time of demand that exceeds its capacity? Granted this is generally less common thanks to the high performance requirements of a node, but in the case capacity is exceeded, there is no measure to prioritize transactions.

One might argue that you could assign the total capacity of the network to some proportion of all shares in existence. This way you will never hit max capacity since everyone will be rate limited before this happens. But the problem with this model is that most of the time then the network will be vastly underutilized. If someone buys a lot of shares just for investment purposes, they are functioning like a timeshare squatter to increase the value of their share. This makes the fee calculation from above even more expensive.

If you don’t do that, then you might also argue that you could again prioritize users holding more of the currency. But that has the same effect of making the upfront calculation when buying the currency more difficult, as you must both correctly predict your necessary usage with the usage conditions of the network at the time of use!

Recap

Even centralized serves make use of distributed computing.

Let’s recap the tradeoffs being made here. We trade a relatively limited bandwidth protocol with a high degree of decentralization, a fee market for prioritizing and paying for transactions at the time of use, and a very expensive attack vector (51% attack) for a high bandwidth protocol with a high degree of centralization, competing economic incentives, difficult to predict upfront calculations, and potentially unpredictable network conditions that could be disrupted much more cheaply.

In my opinion the best way to scale a blockchain is to find ways which leverage the distributed nature of the nodes to split computation (via sharding, enigma, layer 2) to process things in parallel while maintaining the security of all the nodes in the network. The sacrifices and tradeoffs made for DPoS seem to be a marginal improvement over centralized architectures.

There is always a fee when using a blockchain, but the best blockchain tries to make that fee as cheap as possible.

good post dear