Just started this ethereum thing and I am amazed about its potential. I can finally create my own democracy (who would of thought a russian would have revolutionized democracy?) Redefine money, have my own cryptocoins and perhaps even do my part to help the world.

Before this, I thought that GPUS were just a phase and that FPGAs and ASICs were next in line.

Apparently, I was wrong, Keccak256 is a memory hard algorithm, a spunge function.

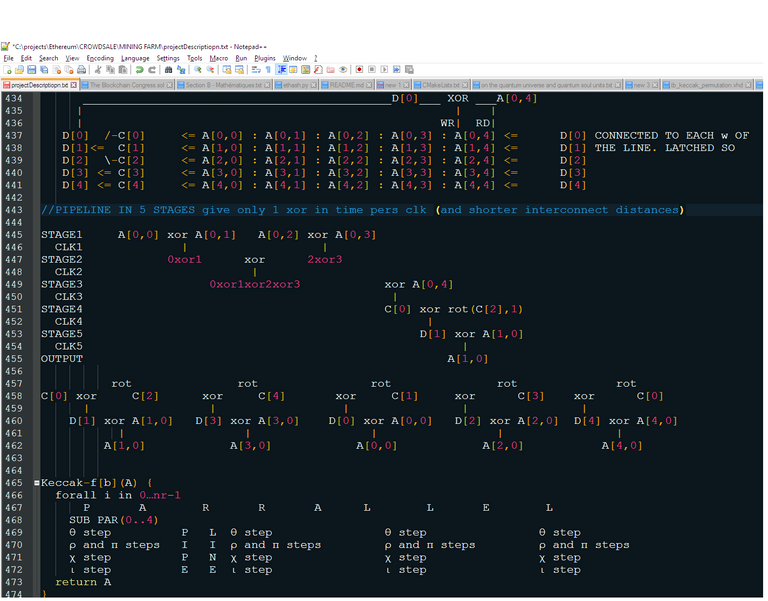

Last night I got into the thick of things and I decided to learn the algorithm by heart, dissecting it, laying it out, rearranging it, visualizing the circuit logic to integrate my very own miner chip.

Then I stumbled upon a dealbreaker... for the GPU

Altera, an FPGA manufacturer is now owned by intel and they are have cooperated to create a high-tech openCL platform that has achieved Khronos Conformance.

After looking at all the specs of the Altera Stratix X, It got my attention and I started analyzing the VHDL code of the Keccak256 algo used to synthesize the miner in FPGA.

Right off the bat, on the chi phase, I saw that developers had neglected to optimize the 5 xor'ed words into a 3 stage pipeline (down goes the max clock frequency because up goes the setup and hold time because longer it takes to go through 3 times more gates per clock cycle)

Then I realized that paralellism has not even been attempted. The VHDL code does not take into account device usage. The bigger the fpga, the more "copies" of the algorithm can run at the same time (which requires more memory i presume). I suspect the device usage was in the low end (%of hardware activated).

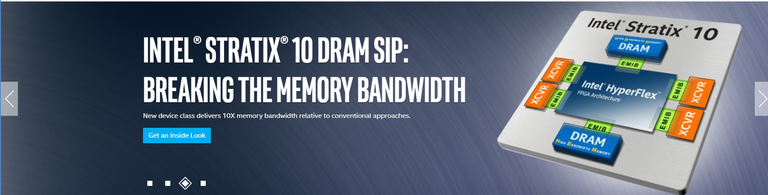

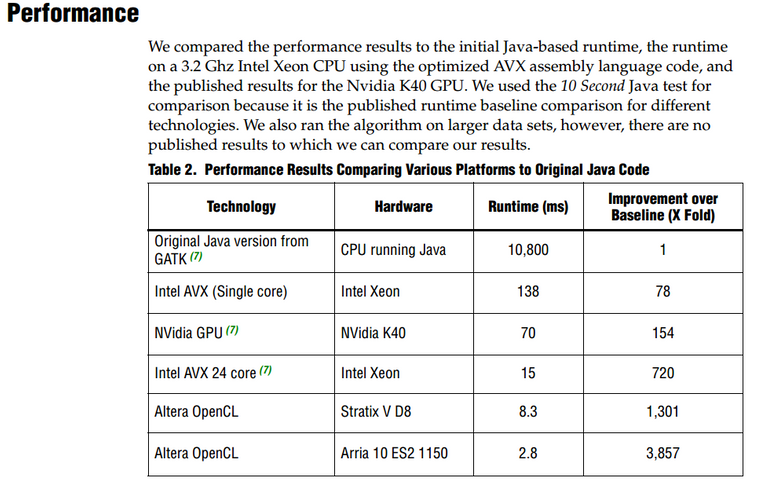

But one thing I know for sure is that even the older stratix V FPGA model beats a GPU at decoding the human genome. The stratix X has twice the clock capability and 5 times the chip density, that's a X10 factor right off the bat without even optimizing the Keccak256 VHDL code.

1.look at the difference in performance between the GPU and the 2 FPGA at the bottom

2.Now compare the Arria 10 (previous figure best) to the Stratix 10 BOOOOOOOM!!!!!

3.Yup a 10 000$ GPU card lost. The Stratix chip is around 250$ retail

Should would I ignore this if I was a serious miner? not one bit. I wanna see the benchmarks fully optimized and loaded.

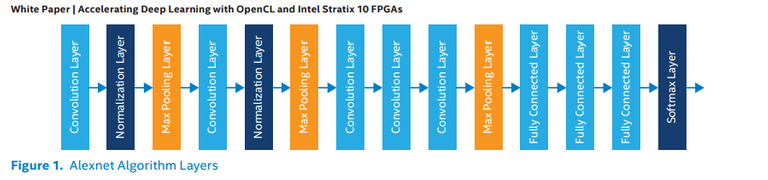

4.Helps to visuale the hw

Looks like big business, centralized banks and conglomerates are planning to massively deploy this recent innovation for big data so we need to leverage the playing field!

5.Stream buffers

6.Spunge function

Congratulations @yanbellavance! You have completed some achievement on Steemit and have been rewarded with new badge(s) :

Click on any badge to view your own Board of Honnor on SteemitBoard.

For more information about SteemitBoard, click here

If you no longer want to receive notifications, reply to this comment with the word

STOPBy upvoting this notification, you can help all Steemit users. Learn how here!

Where in the world did you see a $250 price point? The dev kit itself is priced at $8k. The chip alone is in the $20k~ range.