Recently some drama has begun between the EOS and Cardano communities over a series of posts and counter-posts by a couple of figurehead gentlemen, Dan Larimer and Charles Hoskinson. Both of these guys have a long history (as long as long gets in this space anyway) in blockchain tech and from what I've read had some personal beef. So, fine, they snipe at each other. I don't care about them, but I do care that the communities don't turn on each other or adopt "teams" built around their figurehead. So here's the series of posts and I'll break down what's happening here and how these guys/groups are talking around each other:

Dan's critique: https://steemit.com/cardamon/@dan/peer-review-of-cardano-s-ouroboros

Charles response: https://imgur.com/a/hA8O4 or

Dan's response to Charles's response: https://steemit.com/cardano/@dan/asking-the-right-question

This reads to me like a common tension in software development, engineering versus the academy. In hopes of clearing up the understanding of the community, here's what's going on...

Research papers are peer-reviewed by other experts in the field, not self-described peers as Dan asserts.

A blockchain consensus algorithm claiming to value peer review needs to consider who they consider their peers and all such reviews should be public. In the blockchain space, our peers are other blockchain technology companies.

Cryptography and security research publications have a very reliable pattern - describe the problem, describe what properties will hold under threat, describe your threat model (what the adversary is capable of), and show that any adversary with the described capabilities, the properties hold. That's it. There should be no claim of performance characteristics or even whether a protocol is implementable in the real world. Those are engineering problems. An unrelated example might help...

I came to cryptocurrency in a roundabout way - I was looking for a secure voting protocol in hopes of making voting-by-smartphone possible. I've read me some papers. There's a very large literature on coercion-resistant voting (prove the vote tally is correct without revealing any particular vote even to the voter) and the model is exactly the same.

State the assumptions:

- We want to have a vote

- There is a registrar

- We have some voters who are willing to be coerced [bribed]

- And usually a write-only public database [this is how I got to blockchain])

State the properties we want to have:

- No particular vote, once cast, can have its content revealed even to the voter

- The vote tally will be correct

- Sometimes it's specified how to catch fraudulent votes by an adversary (electronic impersonation)

- Sometimes we don't even want to be able to prove a particular voter voted (imagine a thug coercing you NOT to vote)

State the adversary's capabilities:

- Adversary can arbitrarily slow down and inspect network traffic between voting machines and the tabulator

- Adversary can coerce voter to provide a private key (some protocols allow "spoof" private keys that still work, think "panic code" on a safe - still opens but simultaneously calls the cops)

- Adversary can produce fake messages to the vote tabulator

- Adversary can use the private keys of the voting machines

- ... there are other possibilities, but you get the idea

Set up the protocol:

- Here is where a researcher describes the cryptographic primitives they'll use, network setup, and other assumptions e.g. write-only database, voting machines, pre-allocated private keys to voters from a maybe-corrupted registrar, etc.

- Describe the protocol that uses those things

- Describe possible attacks the adversary could attempt and how the protocol defeats them either entirely or probablistically

Done. Occasionally there will be some proof of concept software or website to check out but generally these proposals lack any real implementation. It's research. This is how we move our understanding of the world forward. In the blockchain space it took a year between the release of the Bitcoin paper and the first iteration of Bitcoin software.

There are very legitimate ways a person could critique one of these research papers. Dan didn't do that, he appealed to engineering feasibility and what he called "realistic" threats

I will demonstrate that despite claims to the contrary, Ouroboros is far less secure due to faulty assumptions in its design

He doesn't actually demonstrate any such thing. To demonstrate in this context means to point out the assumption that doesn't hold, state the property that will be violated, and describe the way an adversary would violate the protocol. Instead he uses heuristic or common-sense arguments

It is entirely possible that in some windows all block producer slots will be randomly assigned to the same producer. While this is statistically unlikely, it is not unreasonable to presume that a long sequence of blocks could be assigned to collusive peers.

stake-weighted voting creates a very high centralization that can only be countered with approval voting followed by giving the top N equal weight

Cardano’s Ouroboros algorithm is not mathematically secure due to bad assumptions regarding the relationship between stake and individual-judgment being distributed by the pareto principle.

Is he wrong? No. There are real world conditions and engineering challenges to overcome in implementing any supposed-secure protocol. This particular style of attack just doesn't do anything to undermine a research paper. The research paper would refer to these kinds of things in their assumptions or declare what facilities they had available to mediate the problems. That's not the same as offering a solution or sample code/design that fixes it. This is a BIG problem for a layperson reading the critique - it's not clear what's in the research paper, what's opinion, and what's engineering experience talking.

Annnnnd the conversation is not helped by the rebuttal. I appreciate what Charles is trying to do - he lists the same things I did above; this is a research paper which means it's highly formalized, and credible critiques will make formal attacks on the assumptions or the protocol with the modeled adversary (or perhaps show that the adversary is too weak as modeled). However, that's after taking the bait riddled throughout Dan's post (BitShares/Steem/EOS > all). Moreover, there are a couple of academy-first assertions that sound pretty elitist (not wrong, just elitist)

This is the initial Foundation upon which all consensus protocols ought to be judged in our space

it's just extraordinary to me how people can be so profoundly naive about the process upon which one has to follow to ensure a protocol is properly designed | This is not a subjective process | This is a well-understood process which has given us modern cryptography

This kind of only entrenches people who are inclined to favor engineering or the more informal argument style and let's be straight on this, Dan Larimer is associated with 2 fantastic systems already in Production (meaning gone live in the world) with a third hitting all its milestones. We have every reason to respect Dan's experience and opinion on these kinds of engineering problems. The informal style doesn't play in the research space but he is not wrong in his assertions about performance, reliability, consistency, etc. Charles is right that research and formal proofs gave us modern cryptography, but to argue against Getting Shit Done is to shoot one's self in the foot.

And the we have the double down...

***According to Charles, the proof of work satisfies his definition of secure ledgers. In my opinion, proof of work provides terrible security based upon real world centralization in mining pools, misalignment of incentives, successful double spends, empty blocks, selfish mining, fee extortion, etc. ***

I'm not familiar with the successful double spends he's talking about so I'll ignore it for now but the rest of those assertions are not relevant. Security just means different things when these guys say it and that will confuse the community. To pick that apart, security research is what I described - stating assumptions, modeling an adversary, stating properties that hold, showing that a protocol does that. Dan is using a layperson's kind of muddy understanding of security. Those things aren't security properties; those are economic properties. His same arguments from the original critique about Pareto distributions apply to all of those points - any system which creates differential advantage will fall out that way.

- Mining pools will always consolidate because the more you win, the more you win

- The current winners obviously are incentivized to change very little because they're winning versus the current losers who may more acutely notice unfair advantages

- Fee extortion is obviously a marketplace effect, demand > supply

So again, he's not wrong that these are problems in the real world system but he is talking around security properties of a protocol. The Bitcoin protocol is impressively secure meaning that despite these economic effects, there is no large-scale breakdown of the protocol or the network as a whole.

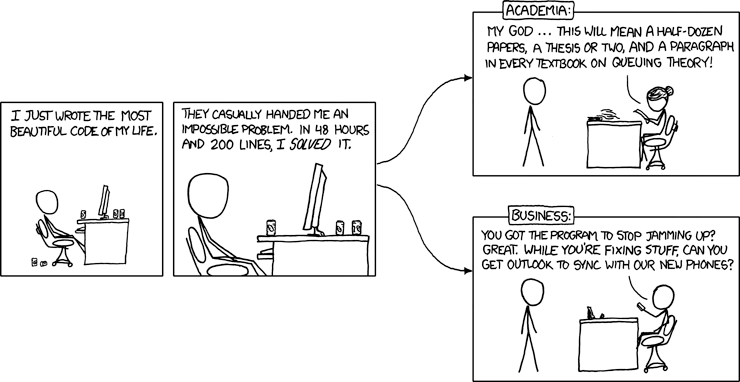

And maybe ironically he sums up the entire communication problem with the xkcd comic at the bottom

An accomplished business/engineer is arguing with an academic researcher and both gentlemen are trying to pull the other onto their home turf.

Gentlemen you can't fight in here, this is the War Room!

We need you both to do what you do so we can have nice things. This analogy may not land if you're not a programmer but think about Map/Reduce. An entire industry was born with a real application of some old Lisp primitives to data. Imagine the kind of disruption we will see if some academic pinheads join forces with an alpha-male super-coder and give us cross-chain compatibility or fast, secure finalization or light-weight NIZKs for private transactions or something I'm not even imagining. It could be a beautiful world but we've gotta quit fighting amongst ourselves, respect each other's areas of expertise, and stop puffing our chests out.

Well the truth is Cardano has lots of engineers, and I have work with lots of academics that are top engineers. And computer science academics do protocols, that is their sole purpose. So I don't know why not include them. I think Cardano approach is a standard setter compared to a lot of crappy coins. Don't know why Dan is picking on Charles, probably sees a good competitor, or a threat.

Certainly the project has a lot of engineers. Lots of projects have lots of engineers :) I used to work for an education company that had a strong bias to academic purity over Getting Things Done. What I'm reading from these two guys is the perspective of two organizations that bias towards academic rigor versus delivery and iteration (or heuristic/experience based if you prefer).

Well but looking at the product, they already have mainnet and wallet out in the wild unlike 99% of other ERC20 tokens. Cardano already has delivery and iteration, working with exchanges, wallet problems so I would say it is the other way around. Results kinda differ from your observation. It is one thing to just talk but results are seen in the wild. Also just take a look at their github branches, commits.

Hmmm I think maybe the important distinction is getting lost which means I haven't communicated as clearly as I'd hoped :)

Cardano has a basis in formal research and Charles makes clear statements that they value that approach to deliver their platform.

Dan Larimer just as clearly wants to put platforms into production and relies on good instincts and heuristic/"practical" definitions of security.

Both are delivering software, just from very different perspectives. There would be an easy test to run here ...

What would happen if there were some security flaw found in Ouroboros? The primary claim on which Cardano is founded is that they have "provable security". My prediction is that they would pull the brakes until the flaw was rectified or disproven. They would have to because it undermines their primary claim. If someone pushed a similar flaw in the threat model or ways to break the security properties of EOS, Dan would laugh and say it was an unrealistic threat or the large pool of voters/stakeholders would defuse the problem and push forward with delivery. Rigorous process is not the underpinning of EOS.

Congratulations @archon3d! You have completed some achievement on Steemit and have been rewarded with new badge(s) :

Click on any badge to view your own Board of Honor on SteemitBoard.

For more information about SteemitBoard, click here

If you no longer want to receive notifications, reply to this comment with the word

STOPWhile I appreciate your attempt at neutrality, I think you under represented the importance of broad definition of security. The narrow academic definition might as be a straw man and results derived from it are of limited use.

I've definitely miscommunicated then because all I'm saying is that both kinds of approaches have strengths and the strongest advocates of each are speaking past one another :)

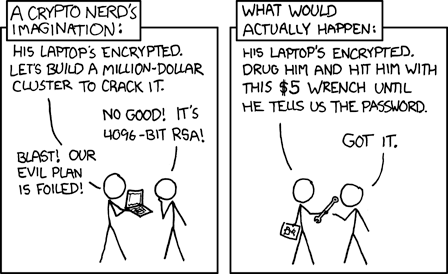

There is a lot of value to be had in both approaches and I think we will all have a better world if we try to tackle these problems from both sides rather than insist that the other approach "isn't real security". I'll use a couple of real world examples to illustrate and then go back to the xkcd well because it's just that good...

There are some spectacular examples of security protocols failing any rigorous analysis and hopefully you'll grant that the people working on them weren't intending them to insecure. An industry favorite is WEP for securing your connection to your wireless router. This is trivially broken and suddenly everyone's web traffic is free to be inspected. The motivation was right (many devices can connect, no mutual trust, encryption!), the primitives were right (streaming cipher), but there could not have been a formal analysis of the design because the keyspace was tiiiiny. There's a similar problem with the DVD encryption technology whose name escapes me. These were solutions to industry problems that looked Good Enough. The problem in both cases is that once a hack is found, the vulnerability is deployed everywhere and it's trivial to share the exploit.

Now the reverse case...

All the TLS, encrypted email, and password generators in the world aren't saving grandmas from Nigerian princes. It doesn't protect us from centralizing on our email for "Forgot password?" processes. TLS specifically is like a bane of many web companies and is one of the first big optimizations for their Edge network - offloading it and operating on clear requests inside the firewall.

So in my mind here's how we could work together better. I'll use an example from my work, Netflix. We want secure communication between clients and servers because we share sensitive data over the internet. We also want a strong notion of device and user identity and make sure that it is not transferable between users or devices because there is an incentive for malicious clients to do this. TLS doesn't solve all those problems and it's expensive to do a bunch of handshaking all the time and restricts what transports we can use. So a few years ago we worked on a protocol of our own that establishes identity in a non-transferable way, encrypts communications, and has extension points for us to evolve the protocol as we need. We have a great security team who worked through all of our business requirements and used industry best practices to build it. On the first day that the core protocol was open-sourced an external expert pointed out a timing attack. Obviously it was addressed, but it probably could have been found preemptively if a formal process and analysis/proof had been done. On the flip side, strong notions of Identity was a critical feature for us that is unaddressed in every public standard that I know of and it's not our core product, it's a business requirement. It doesn't behoove us to overinvest in a formal process when we can get "pretty good" and address all of our practical concerns.

This has been probably too long so I'll try to summarize. Formal process fills in the little cracks and details where hackers play. Business requirements and use-case driven design flesh out a big picture which may identify concerns or things un(der) addressed by a formal security hypothesis. If we work together with each hand washing the other instead of slapping the other, we'll have a bright future.