Seven months ago (mid-November 2021) I posted about my first experiences with AI models that create images given any text prompt.

A couple of weeks ago, my feed started filling up with examples from DALL-E MINI - that link produces a social-media-friendly 3x3 grid of possible responses to a prompt.

So I thought I'd take a look at how this latest thing deals with some of the random, surreal prompts I came up with back then.

First then is the self-referential "Lloyd Davis Self-Portrait With Banana"

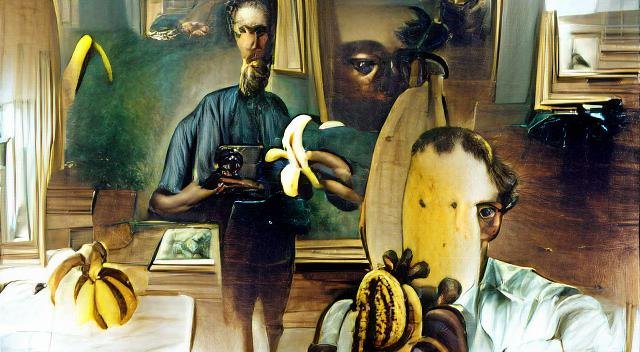

This is what the VQGAN/CLIP combo came up with last November:

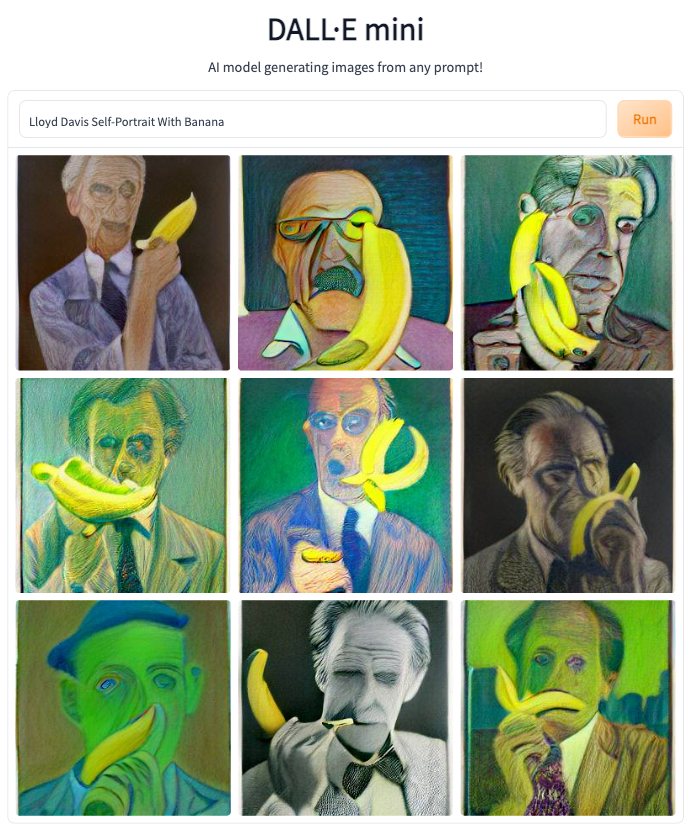

And here's one I made today with dall-e mini:

I have no idea what technical differences there are behind the scenes. I haven't looked at the code or read anyone else's opinion on the tech. I'm purely looking at how the different models interpret the same prompts.

What are the differences? Well, they represent different artistic styles for a start. The earlier one feels slightly more um.. natural? in a surreal kind of way. It looks more like a painting, where the new ones are more drawing-like.

The assumption seems to be, in the first one, that a self-portrait needs to be made with a camera - I see the subject (which is an interpretion of a person called Lloyd Davis, but not necessarily the one who's writing this post) holding a camera-like object as well as a banana and looking into a mirror. Or something.

I could believe that each of the new ones was drawn by the same artist, experimenting with chalk or pastel techniques. This Lloyd Davis is definitely not me (well, possibly the leftmost on the bottom row... at a stretch)

I'm also a little disturbed by the emphasis on sniffing the banana. Artists eh?!

More tomorrow.

I've seen these things going around. A friend has been playing with some of them to make art for her music stuff. Not everyone can draw or paint, but this lets us create something unique. Whether it is art is up for discussion.