Last spring, Roger Peng, a professor in the Johns Hopkins Biostatistics department gave a thought-provoking Dean’s Lecture on data analysis. The big idea 💡 Peng explores is one with no easy answer. How can the data community define “good” analysis without a common framework for communication & replication? Peng didn’t go as far as suggesting a solution but ultimately points to science 👨🏼🔬 and music 🎶 as guideposts.

The entire lecture is entertaining and worth watching. I’ve shared some notes below.

I’m curious to hear from readers… Do you have a framework or methodology for data analysis that works well? Please share!

— — — — — —

The Past and Future of Data Analysis (Peng 2017)

- We are in the “Measurement Revolution” 📏

- Data is eating the world BUT the analysis of the data is getting worse

- Everyone is a data analyst 👩🏽🔬

- Experiencing a replication crisis (reproducibility being challenged — Duke

How to solve? No easy answers…

- Automation? 🤖

- Teaching? 👩🏻🏫

“I have the statistical skills, now I have a dataset, what do I do??” 🤷🏻

There is no rubric for “good data analysis”

The “past future” of data analysis

- (1962) John Tukey — Think of data analysis separate from statistics

- (1991) Daryl Pregibon — process of data analysis not really understood

- (2015) David Donoho — What is data science? Validity of data analysis

Application of data “science”🔬 tools across disciplines is currently an “art” 🎨

Can we answer the question: What makes data analysis successful ❓

Identify good design patterns (not rules, but guides)

Cross disciplinary learning (look to music!) 🎼🎻

- Understand what is/is not important to represent

- Shared aesthetics

- Reconstruction based on assumption of shared tools 🛠

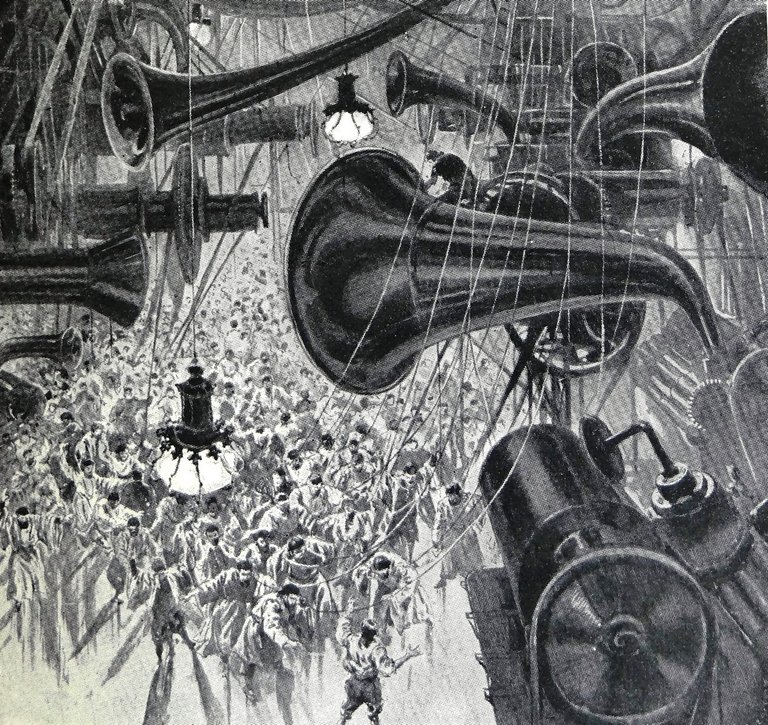

In data analysis, there’s too much focus on the instruments (tools) and not the music (analysis) being produced.

However, some progress on common principles

- Visualization (Tufte)📊

- Grammar of Graphics (Wilkinson)

- Tidy Data/Ggplot (Wickham)

Aesthetics -> Methodology

- Reproducible (code, packages, version control, formatted, metatdata, documentation)

- Translatable (modular, quantitative, generalize, API)

- Robust to New Data (Stat techniques, assertive testing, code review, fail loud & early)

- Communication (techniques, deliverables, peer-review)

Future of data analysis is developing a coherent theoretical framework that is scalable and allows teaching of data analysis to a larger audience.

No solutions yet, look to science for answers...

Congratulations @tfb! You have completed some achievement on Steemit and have been rewarded with new badge(s) :

Click on any badge to view your own Board of Honor on SteemitBoard.

For more information about SteemitBoard, click here

If you no longer want to receive notifications, reply to this comment with the word

STOP