Hi,

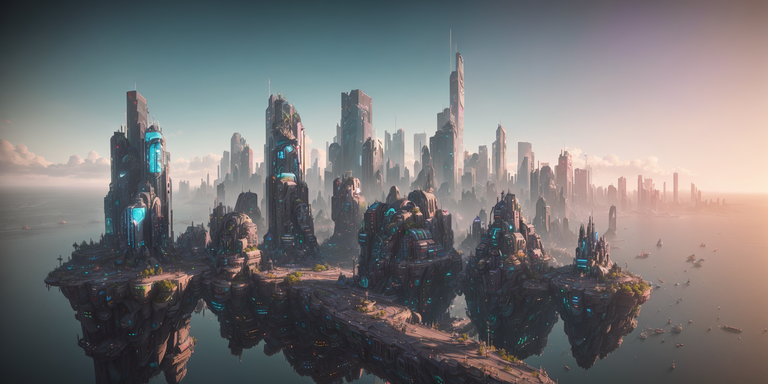

So I was browsing reddit and came across someone talking about a SD model called alfamix which has been trained on a lot of cityscapes in different style. Generating cities for ideas to use in games and fiction is really quite interesting to me, so I had to have a go at it. I tried a few different weather conditions; clear, overcast and night-time and was pretty pleased with all the results. I also did some generations by adding some "street level prompts". They all seemed to generate with people on the streets which isn't the look I was interested in, but maybe a negative prompt would help remove them in the future.

These images are also rendered with a 2x latent upscale which means they are considerably larger and more detailed than my other images.

Pretty cool fine-tuned model there! Do you think the similar building style and colours across the images is from your prompt(s) or the training data?

Might have to try it out and see what it does with like architectural terms, for example... 🤔

Definitely prompt based, switched "futuristic cityscape" to "medieval town" with some other embellishments and got these out. I wanted something more run down, but it insists on putting nice quality buildings there, even on muddy roads.

Heh, wow. There's some hints of texture in the walls of the top image but I see what you mean, slightly jarring in the second... unnaturally clean. I suppose if you added 'rundown buildings' it might make them closer to ruins—holes in the roofs, collapsed walls, exposed framework, that sort of thing—structural degradation rather than just a bit of normal wear. Depends what you're after of course, but I guess you're wanting complete buildings 😄

It's surprisingly hard to get a specific result in my experience!

A picture's worth a thousands words, as the old saying goes, but these image models kind of show that the difference between a hundred-word picture and a thousand-word picture isn't really 1:10

I think the next big step might be further training image models with RLHF, like how ChatGPT is a refinement of GPT-3 guided by human preference. Imagine telling alfamix to focus on making the walls slightly more timeworn without changing the fundamental composition of the image. Would be interesting...

Now with GPT-4 able to respond to multi-modal prompts, this could be just over the horizon