In the blog of today, I continue the discussion of my research works of the last couple of years. I focus on a topic slightly different from those considered earlier this month, that lies at the interface of particle physics and cosmology: dark matter. I nevertheless tackle it with a particle collider point of view.

I discuss a study undertaken a few years ago which has given rise to two open-access scientific publications, a technical one in 2020, and a phenomenological one in 2021. Another publication is expected in the next couple of months, as is a contribution to an upcoming CERN Yellow Report.

Dark matter is very well motivated, as explained in this earlier blog (as well as below). There are consequently hundreds of dark matter models available on the market, and it is our duty to probe them and test them with all the data we have.

In the publications to which this blog is dedicated, we first proposed a framework to look for dark matter at the Large Hadron Collider in a generic way. In this manner, it becomes possible to study simultaneously a large part of all dark matter models that have been proposed, and hopefully find a signal in future data. Next, we made use of this framework to investigate a few cases and show how a dark matter signal may be less dark (and thus more visible) than expected.

[Credits: Mario de Leo (CC BY-SA 4.0)]

Why does dark matter matter?

Dark matter is a key topic in high energy physics and cosmology today. This situation does not originate from randomness or the madness of some physicists. There are indeed very good reasons and motivations for dark matter.

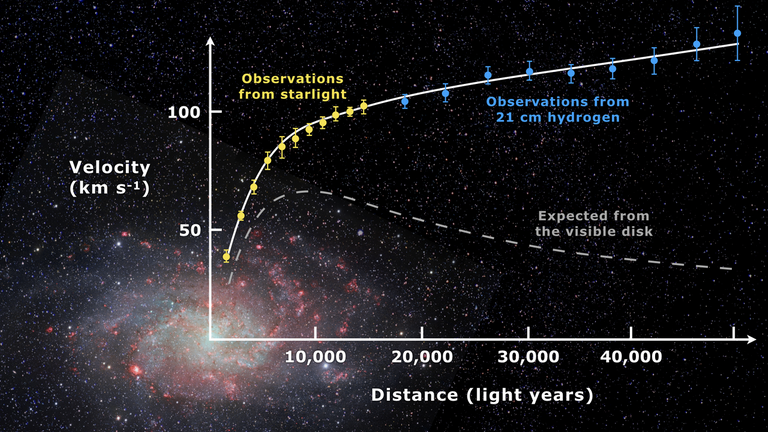

The historical proposal of dark matter in the 1930s is associated with revelations made by the study of the motion of stars in galaxies. Using our knowledge of how gravity works and the amount of stars seen in the sky (through the light they emit), it was possible to make predictions of the angular velocity of the stars as a function of their distance to the galactic centre.

As an illustrative example, those predictions are shown through a dashed line in the figure below. It concerns one of our neighbours, the galaxy Messier 33 (also known as NGC 598).

[Credits: NASA (CC BY 2.0)]

We can see that it is expected that stars lying far from the galactic centre are slower (the slope of the dashed line decreases when we move towards the right of the plot). Such a prediction is not a surprise as soon as we postulate that most of the galactic mass is located close to the galactic centre, such an assumption being very reasonable from what we see. A similar phenomenon is in fact observed with the planets of our solar system: planets further away from the Sun rotate more slowly around it. This can of course be generalised to planets in any star system.

However, the measurements of the star velocities in Messier 33 are given by the other curve shown in the above picture: the solid line with the yellow and blue data points. Stars are found to be much faster than what gravity predicts.

There are two ways to solve the issue; either we modify gravity, or we add matter that does not reflect, emit or absorb light. The latter is called dark matter.

Fast forward in time, standard cosmology (that includes the dark matter hypothesis) indirectly confirms the presence of dark matter through a variety of pieces of evidence (although modified gravity is not excluded). In my opinion, the most striking example for such a piece of evidence comes from the influence of dark matter on the cosmic microwave background (abbreviated as the CMB).

380,000 years after the Big Bang happened what is called in cosmology recombination. Here, the first atoms are formed from atomic nuclei and free electrons, and matter becomes neutral. Photons from that epoch are thus mostly still there today (as photons do not interact with electrically neutral forms of matter), and they have filled the entire universe.

Those photons are known as the fossil radiation left over from the Big Bang. Very good telescopes are sensitive to them and we can measure their temperature. The map of the variation of this temperature with respect to the average CMB temperature of 2.73 degrees Kelvin then gives a footprint of the universe from the recombination epoch. Data makes sense… only if dark matter is around the place!

As another motivation for dark matter, we can mention gravitational lensing. When something massive (like a galaxy) lies between some observer (us) and some light source, the light bends on its way to us. The mass lying in the middle hence act has a lens, and we observed a distorted image. Studying such a distortion then provides information on what distorted the light in the first place. In many studies, once again dark matter must be there to explain the observations.

All those reasons (including some reasons not covered above but raised in some of my other blogs) are indirect. In addition, they all converge in the sense that the properties required for dark matter to explain one issue are generally the same as those required to explain other issues. Everything is somewhat consistent.

We however still miss one piece of evidence: an evidence for direct detection of dark matter. Such an achievement is actually highly needed to get information about which dark matter model(s) among the hundreds of proposed ones are viable. This could come in two ways, provided that we assume that dark matter interacts with the particles of the Standard Model on top of its necessary gravitational interactions.

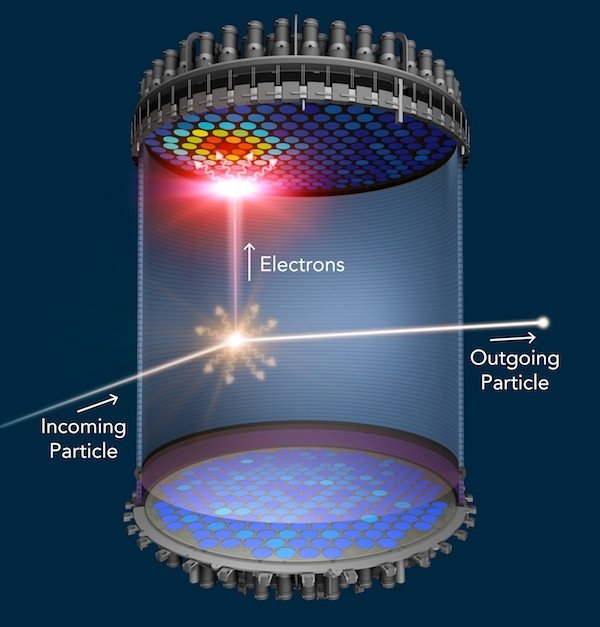

Firstly, we can rely on detectors patiently waiting (actually, the team running the experiment is patiently waiting) for dark matter particles to go through them. Sometimes (very rarely), a dark matter particle could hit one of the atoms making the detector. By monitoring the resulting recoils (see the image below), we then expect to see dark matter.

[Credits: SLAC]

Secondly, we can use a particle collider such as the Large Hadron Collider at CERN, also known as the LHC. Here, we make use of highly-accelerated protons and the associated large amount of energy available at the point of collision to produce some dark particles.

The latter materialise themselves as missing energy and missing momentum in the detector. Dark matter being invisible and energy and momentum being conserved, dark matter production naturally leads to a momentum and energy imbalance (as the dark particles stealthily carry momentum and energy away). This imbalance then allows for the reconstruction of the invisible dark states trying to fool us.

This second possibility is the one the rest of this blog focuses on…

Modelling a dark matter signal at the Large Hadron Collider

By virtue of the large amount of energy available in a collision at the Large Hadron Collider and the huge associated collision rate, we expect to be able to copiously produce dark matter signals in LHC collisions. We however have a plethora of models of dark matter that are interesting and that should be probed experimentally. Such a task is actually impossible (there are simply too many models). Fortunately, this is in fact not even necessary.

For a few years, the LHC experimental collaborations rely on simplified models of dark matter for the interpretation of the results of their analyses. These simplified models have the advantage to reproduce dark matter as it appears in many of the hundreds of proposed models simultaneously. The key point is that they reproduce well enough the relevant part of the full models for what concerns their LHC phenomenology. In this way, studying a single (simplified) model allows us to put constraints on plenty of (complete) models in one shot.

The first proposed simplified models for dark matter have emerged 6-7 years ago (see here for the associated publication). They are constructed in the following way. First, we take the Standard Model and keep it as such. Second, we include a new particle that is a candidate for dark matter. Finally, we connect this particle to the Standard Model.

A direct connection is not possible, as this would allow dark matter to decay into Standard Model particles. Dark matter stability is however needed to explain why we have dark matter in the universe today (otherwise, it would have decayed for a long time). We therefore need to connect the dark matter to the Standard Model via a mediator particle.

In 2015, a scenario in which this mediator has two independent couplings has been proposed. The first coupling connects the mediator to a pair of Standard Model particles. This coupling is denoted by Y-SM-SM, where Y is our mediator and SM a Standard Model particle. The second coupling connects the mediator to a pair of dark matter particles and is denoted by Y-DM-DM, where DM stands for our dark matter particle.

Dark matter production at the Large Hadron then proceeds in two steps. First, the mediator is produced in the collision of two Standard Model particles (relying on the existence of the Y-SM-SM interaction). Second, the mediator decays into a pair of dark matter particles (through the Y-DM-DM coupling). In this way, two dark matter particles can be produced in proton-proton collisions at the LHC. This mechanism leads to the name ‘s-channel dark matter models’ for these simplified models.

Dark matter particles are studied in cases where they are pair-produced, but the pair is not produced alone. Otherwise, we would just produce something invisible and nothing else. However, we need something visible to be produced in a collision to trigger the detector to record the event. If there is nothing visible produced, then the detector would behave as if nothing had happened.

In a second stage, physicists reconstruct what is visible in the collision record, and conclude to the presence of some momentum and energy imbalance. The latter is always associated with some invisible particles lying around, that could be dark matter.

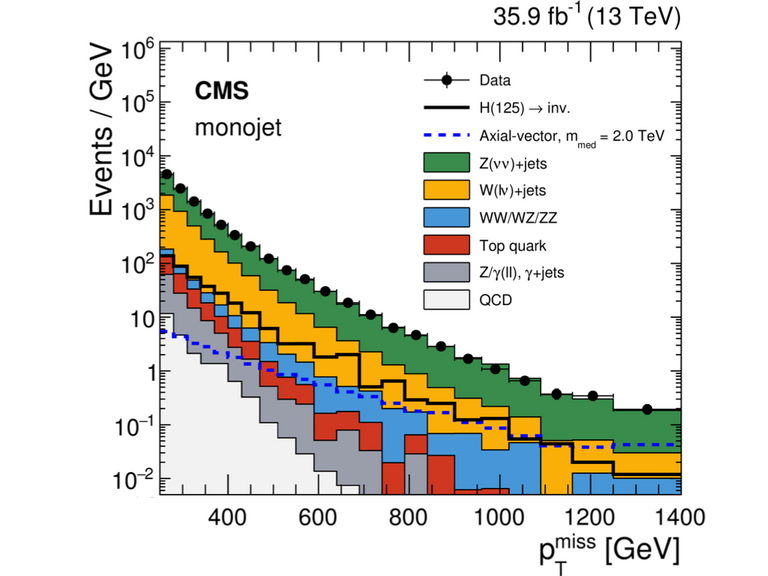

Such a mechanism was deeply studied in the following years, providing a unique way to interpret the results of all dark matter experimental analyses at the LHC. An example of results is shown in the figure below.

[Credits: PRD 97 (2018) 092005 (CC BY 4.0)]

This shows the momentum imbalance obtained in a collision in which the detector reconstructs one cascade of quarks and gluons called a jet. The data points are shown in black (with tiny error bars), and agree very well with the Standard Model expectation (the stack of coloured histograms). No (s-channel) dark matter signal is thus emerging from data…

What was known already at that moment is that the considered class of simplified models was not applicable to many dark matter models. In these models, although we have a mediator particle connecting the dark matter to the Standard Model, there is no Y-DM-DM and Y-SM-SM couplings, those being forbidden by the theory. This model configuration, called ‘t-channel dark matter’, is the one addressed in the two publications discussed in this blog (here and there).

t-channel dark matter models at the LHC

We focus on the second existing big class of simplified models for dark matter. Here, we still have the Standard Model on the one hand, and the dark matter on the other hand. Again, a mediator particle connects both. This time however the mediator couples simultaneously to one dark matter particle and one Standard Model particle (through a Y-SM-DM coupling in the notation introduced above).

In the technical work published in 2020, we implemented this class of simplified models in their full generality in high-energy physics simulation packages. This subsequently allows anyone (physicists but also non-physicists as everything is open source) to use the model and simulate associated signals at the LHC. In the next publication, we investigated the particularity of this class of models.

Naively, a dark matter signal in any simplified model would consist of the production of two dark matter particles (those are always produced in pairs, which stems from enforcing dark matter stability), plus something visible. In t-channel simplified models this is actually not necessarily the case. More precisely, we have demonstrated in our work that the dark matter signal includes three components, the considered dark matter pair-production signal, plus two additional contributions.

- We can produce two mediators in LHC collisions, each mediator decaying into a dark matter particle and a Standard Model particle by virtue of the Y-SM-DM interaction.

- We can produce from two colliding protons an associated pair of particles comprising one mediator (that decays into one dark matter particle and one Standard Model particle) and one dark matter particle.

Depending on the masses of the dark matter and of the mediator as well as on the strength of the Y-SM-DM coupling, any of the three components could dominate. In this way, each component brings a complementary contribution, and none can be neglected. This finding contrasts with the currently adopted manner to simulate a t-channel dark matter signal, that does not consistently include the three components of the signal. The proof is illustrated in the figure below.

[Credits: PLB 813 (2021) 136038 (CC BY 4.0)]

In this figure, we make use of an existing search for dark matter at the LHC that has been conducted by the ATLAS collaboration. We interpret the results of this search in the context of the studied simplified models. We have fixed the Y-SM-DM coupling and then studied the constraints on the mass of the mediator (the x-axis of the plot) and that of the dark matter (the y-axis of the plot). Each point in the shown plane therefore represents one particular mass choice for the dark matter and the mediator. Moreover, as the mediator must be heavier (to ensure dark matter stability), any configuration above the black diagonal is forbidden.

If we rely on defining a dark matter signal only from dark matter pair production, then the resulting constraints are given by the red line. Any mass setup below the red line is here excluded by data, which corresponds to the lightest scenarios. This constraint could be seen as that originating from a very naive approach to simulate a dark matter signal (only considering pure dark matter production at the LHC).

However, once we account for mediator pair production, it turns out that our signal is also excluded for other (heavier) mass configurations. Checking the above figure, we can now focus on the blue contour. Any point lying either below the top part of the blue contour or to the left of its rightmost part is excluded. In addition, we can also include the last signal contribution, that corresponds to the associated production of one mediator and one dark matter particle. This gives rise to the green constraints in which any mass configuration below the green line is excluded.

The total excluded region of the model is obtained by the combination of the green, blue and red exclusion contours. We immediately see that the other signal components have a non-negligible impact. Dark matter masses much larger than expected are reachable at the LHC.

I didn’t read anything. What is all of this about?

Dark matter is probably one of the most searched substances today. Whereas it is motivated by a wealth of observations, it indeed still escapes direct experimental detection. There are two ways to get this last ingredient, CERN’s Large Hadron Collider (the LHC) being one of them.

In this blog, I discuss two of my recent research articles (here and there) that focus on how to probe a variety of dark matter models at the LHC at once. Those models are constructed by having the Standard Model on the one hand, and a dark matter particle on the other hand. Next, a mediator particle is introduced and connects the dark matter and the Standard Model. While this construction may seem very specific, it is in fact representative of many dark matter models currently available on the market.

We provided a general framework allowing for the simulation of the model’s dark matter signals, and used it to understand the corresponding phenomenology at the LHC. We demonstrated that the full signal includes three components, and that none of them could be neglected. Depending on the masses of the new particles and on the strength of their interaction, any component of the signal could indeed be dominant. This finding is quite important, as it contrasts with the naive approach that used to be followed in the past.

As a consequence, discussions have been quite active within the activities of the LHC Dark Matter Working Group (hosted at CERN), and we will actively contribute to the upcoming CERN’s Yellow Report. In addition, my collaborators and myself are currently finalising a new comprehensive article on this topic, going deeper in all (LHC and cosmology) details.

I hope you enjoyed reading this text. As usual, feel free to provide feedback of any kind, to ask questions or clarifications, and to comment in general. Engagement is fun!

Cheers, and see you on Thursday for a French version of this dark blog, and on Monday for a new topic (which I have not chosen yet).

This was a little bony for me to chew but the summary session help me a lot.

In simple language, could you explain what you meant by the above statement? Thank you in anticipation of your reply.

I actually like starting and ending the post with two different summary versions. This is always useful for those who like to get the message(s) of the blog, but have no time to dig into it.

I must also admit I don't enjoy much writing short posts as this enforces to shorten many explanations for which more space is needed. Sometimes, I even feel that my posts should be even more detailed, the clear danger being making the readership afraid of tackling it at all...

That's why any form of feedback is always useful! I usually then adapt.

Let me try to rephrase this.

In the first of the two works discussed, we designed some computer code. This code can be used together with widely-spread high-energy physics software dedicated to the simulation of particle collisions. In this way, the existing software plus our code would allow to simulate a large class of dark matter signals at particle colliders.

In the second of the work, we made use of the code and the simulation software to actually study the model in details, and investigate its consequences for the Large Hadron Collider at CERN.

Is it clearer?

Yes, it is. Thank for taking the time to put it in comprehensive form for those of us who are not your learned colleagues. Always keep us in mind in your subsequence blogs 😀

Doing that is the fun part, believe me :)

Congrats on your discoveries and publications!

I want to ask something related to a previous article, where you talked about how in the last few months you are finding data that show that the Standard Model isn't the whole picture.

After that convo I remembered some previous discussion of ours, probably took place some years ago, where you were saying that, if I recall, the Standard Model is the most verified theory in existence, supported by trillions of observations, something like that (I may be misremembering and misexpressing, but I think the point is the same!)

I remember I was thinking at the time, "there's no way the theory of evolution is less supported than any physics theory! we don't know if the physics theories will hold up for another 10 years, but I'm sure every biologist feels pretty certain Darwin's theory will hold, well, forever!"

And now I was thinking, maybe itemizing all those observations is the wrong way to go about it. Maybe they should be grouped! Like, if I have 50 swans, and I'm trying to figure out whether they are all white, I could count their individual feathers and consider every feather as a piece of evidence; OR I would consider the whole swan as just one piece of evidence. Doing the same kind of experiment over and over again at CERN and counting it kinda seems inappropriate. OR maybe every time a new microbe is 'born' we should count it as further evidence for Darwin!

Another thought: Karl Popper insisted on science being about falsification. Confirmation, to him, doesn't count for much. Science's job is to look for instances that falsify the theory. And that seems to be a large part of what's being done at CERN if I understand correctly.

Thanks for this interesting comment. There are various points which I should answer in there.

I would not say trillions but a few :-) The point is however the same, as you said.

For the rest, the picture of the iceberg answers it pretty well, in my opinion. Whatever is the true theory of nature, at the energy scale of the Standard Model, it is equal to the Standard Model. This is why we are talking about extensions of the Standard Model.

Therefore, depending on what you want to do, making use of the Standard Model may be good enough. To rephrase this, let's assume you want to calculate the speed of a car. There is no need to use special relativity here. This will just make calculations way harder for a numerical result barely different.

Does it clarify?

Cheers!

Another adventure, full of information that may not be the direct purpose of your blog, and yet delightful.

First of all let me congratulate you on your light touch:

😆 Nothing like a chuckle to keep the reader interested.

Then the gems of information that are new to me.

And, in our own solar system, planets further from the sun move more slowly around it.

Also, the event you call recombination--380,000 years after the Big Bang--and the fact that photons from that time are still around.(!)

Just some information I picked up along the way to your dark matter discussion.

Now to Dark Matter: I understand that you need a mediator particle to interact (connect) the dark matter particle with the standard model. The result of this coupling will not be directly observable (the dark matter particle), but the consequence will be.

Physics 101: thank you @lemouth.

By the way, I think you are correct to offer a long blog with a great deal of information. There are those, (like me) who are new to this and cannot possibly challenge your observations or statements. But you want the blog to hold up under scrutiny of people who do know a great deal about the material. You manage to straddle two worlds with the blog: a professional reader, and a lay person who is wandering around wide-eyed.

PS: That title is perfect. I have watched the movie, *The Hitchhicker's Guide to the Galaxy, at least 3 times.

Thanks a lot for this valuable feedback. As an author, we can never be sure the blog is written well enough for the audience to understand it (although I do my best).

The above sentence is a bit confusing to me. I can understand in two ways and will try to comment both of them (hopefully one of the two will be the good one).

PS: I smiled a lot when I choose that title too, and precisely for the reason you pointed ;)

This I did remember from you blog (yes, the message came through) but I didn't know how to say it. Pretty good writing when someone with my background can come away understanding the basics of the 'lesson'.

Thank you!

That's the greatest success for the author :D

Really wish I was there with you guys during the meet-up at CERN. Seeing some of the things you discussed here would have been awesome and makes me be able to relate more to your blogs.

So, let's assume dark matters are detected today beyond just the models, what do you would be the likely multiplier effects as far research in particle physics is concerned?

I am confident that a new meeting at CERN could happen in the next few years. There is a long shutdown period in 2025-2027, and two planned technical stops in Jan-Feb 2023 and Dec-Feb 2024. If there are options, I will see what I can do. This obviously strong depends on the evolution of the pandemic, that may challenge the opening of the machine and of the site to the general public. Note that I also promised to bring my older son and @gtg inside (at some point).

After the detection of a dark matter signal, the obvious next step will be to measure its properties (many models can explain a single signal). This will allow us to corner dark matter better, and to build experiments (possibly relying on new or at least more appropriate technologies) to get dark matter produced at a copious rate.

At the end of the day, this will have a strong impact on our understanding of cosmology and particle physics as we will have to include dark matter in the Standard Model.

Does it answer the question?

Perfectly. Now I see why such time and resources are being dedicated to trying to detect the dark matter

Excellent! Thanks for passing by!

Congratulations @lemouth! You have completed the following achievement on the Hive blockchain and have been rewarded with new badge(s):

Your next target is to reach 70000 upvotes.

You can view your badges on your board and compare yourself to others in the Ranking

If you no longer want to receive notifications, reply to this comment with the word

STOPTo support your work, I also upvoted your post!

Check out the last post from @hivebuzz:

Thanks for your contribution to the STEMsocial community. Feel free to join us on discord to get to know the rest of us!

Please consider delegating to the @stemsocial account (85% of the curation rewards are returned).

You may also include @stemsocial as a beneficiary of the rewards of this post to get a stronger support.